X-E-Speech: Joint Training Framework of Non-Autoregressive Cross-lingual Emotional Text-to-Speech and Voice Conversion

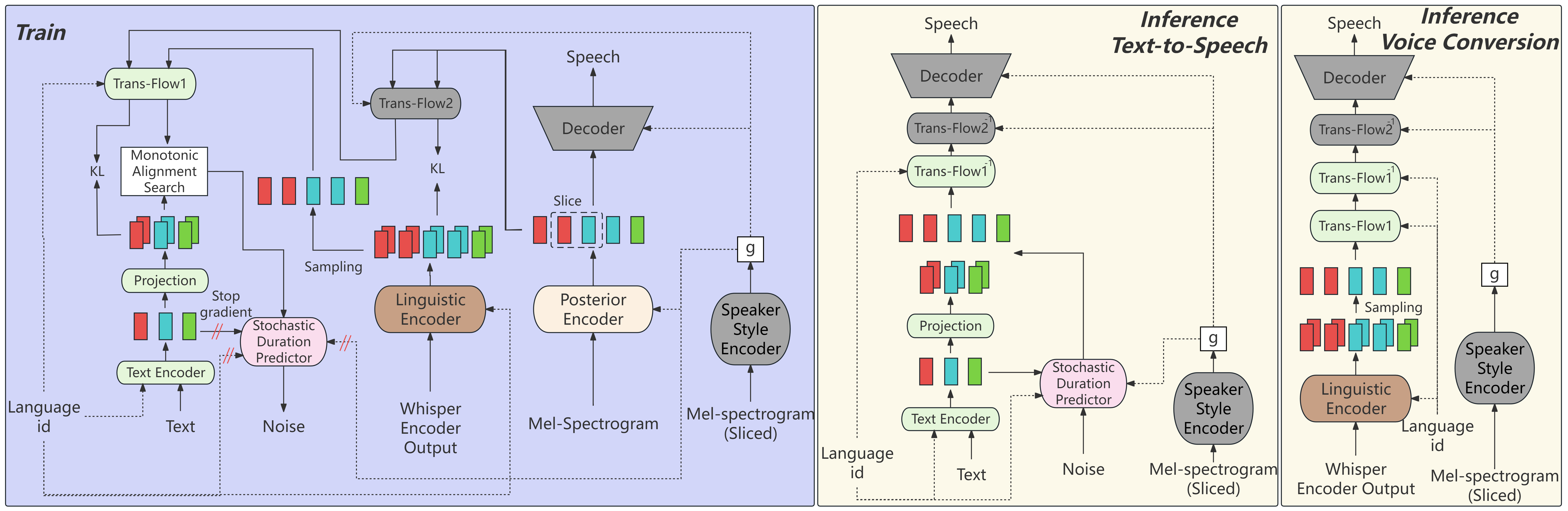

In this paper, we propose a cross-lingual emotional speech generation model, X-E-Speech, which achieves the disentanglement of speaker style and cross-lingual content features by jointly training non-autoregressive (NAR) voice conversion (VC) and text-to-speech (TTS) models. For TTS, we freeze the style-related model components and fine-tune the content-related structures to enable cross-lingual emotional speech synthesis without accent. For VC, we improve the emotion similarity between the generated results and the reference speech by introducing the similarity loss between content features for VC and text for TTS.

Visit our demo page for audio samples.

We also provide the pretrained models.

|

- Better inference code and instructions for inference code

- HuggingFace or Colab

-

Clone this repo:

git clone https://github.com/X-E-Speech/X-E-Speech-code.git -

CD into this repo:

cd X-E-Speech-code -

Install python requirements:

pip install -r requirements.txtYou may need to install:

- espeak for English:

apt-get install espeak - pypinyin

pip install pypinyinand jieba for Chinese - pyopenjtalk for Japenese:

pip install pyopenjtalk

- espeak for English:

-

Download Whisper-large-v2 and put it under directory 'whisper-pretrain/'

-

Download the VCTK, Aishell3, JVS dataset (for training cross-lingual TTS and VC)

-

Download the ESD dataset (for training cross-lingual emotional TTS and VC)

-

Build Monotonic Alignment Search

# Cython-version Monotonoic Alignment Search

cd monotonic_align

python setup.py build_ext --inplaceDownload the pretrained checkpoints and run:

#Under construction- Preprocess-resample to 16KHz

Copy datasets to the dataset folder and then resample the audios to 16KHz by dataset/downsample.py.

This will rewrite the original wav files, so please copy but not cut your original dataset!

- Preprocess-whisper

Generate the whisper encoder output.

python preprocess_weo.py -w dataset/vctk/ -p dataset/vctk_largev2

python preprocess_weo.py -w dataset/aishell3/ -p dataset/aishell3_largev2

python preprocess_weo.py -w dataset/jvs/ -p dataset/jvs_largev2

python preprocess_weo.py -w dataset/ESD/ -p dataset/ESD_largev2- Preprocess-g2p

I provide the g2p results for my dataset in filelist. If you want to do g2p to your datasets:

- For English, refer to

preprocess_en.pyand VITS; - For Japanese, refer to

preprocess_jvs.pyand VITS-jvs; - For Chinese, use

jieba_all.pyto split the words and then use thepreprocess_cn.pyto generate the pinyin.

Refer to filelist/train_test_split.py to split the dataset into train set and test set.

- Train cross-lingual TTS and VC

Train the whole model by cross-lingual datasets:

python train_whisper_hier_multi_pure_3.py -c configs/cross-lingual.json -m cross-lingual-TTSFreeze the speaker-related part and finetune the content related part by mono-lingual dataset:

python train_whisper_hier_multi_pure_3_freeze.py -c configs/cross-lingual-emotional-freezefinetune-en.json -m cross-lingual-TTS-en- Train cross-lingual emotional TTS and VC

Train the whole model by cross-lingual emotional datasets:

python train_whisper_hier_multi_pure_esd.py -c configs/cross-lingual-emotional.json -m cross-lingual-emotional-TTSFreeze the speaker-related part and finetune the content related part by mono-lingual dataset:

python train_whisper_hier_multi_pure_esd_freeze.py -c configs/cross-lingual-emotional-freezefinetune-en.json -m cross-lingual-emotional-TTS-enThere is two SynthesizerTrn in models_whisper_hier_multi_pure.py. The difference is the n_langs.

So if you want to train this model for more than 3 languages, change the number of n_langs.

- https://github.com/jaywalnut310/vits

- https://github.com/PlayVoice/lora-svc

- https://github.com/ConsistencyVC/ConsistencyVC-voive-conversion

- https://github.com/OlaWod/FreeVC/blob/main/README.md

- https://github.com/zassou65535/VITS

- Have questions or need assistance? Feel free to open an issue, I will try my best to help you.

- Welcome to give your advice to me!

- If you like this work, please give me a star on GitHub! ⭐️ It encourages me a lot.