- Text Classification

- Handle imabalanced dataset

- A fresh trained embedding would not be possible on such a small dataset so going ahead with a pre-trained BERT model

- Taking the last hidden state of the BERT model to get the embedding of the words

- Using the word embeddings and then combining the words embeddings using mean accross the axis 1 to get a fixed length vector for all sentences

- Because there are classes for which samples are very low, performing RandomOversampling to prevent overfitting and underfitting on the dataset.

- Thus creating a sentence vector for each sentence of fixed length.

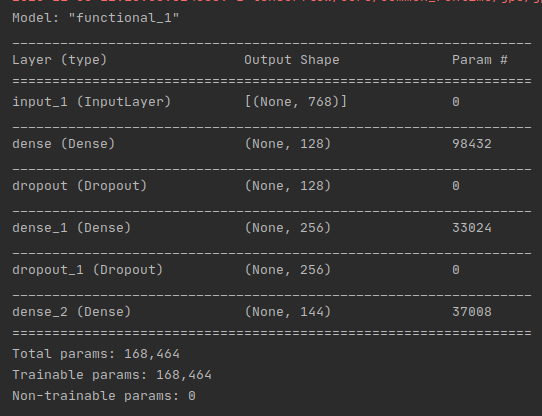

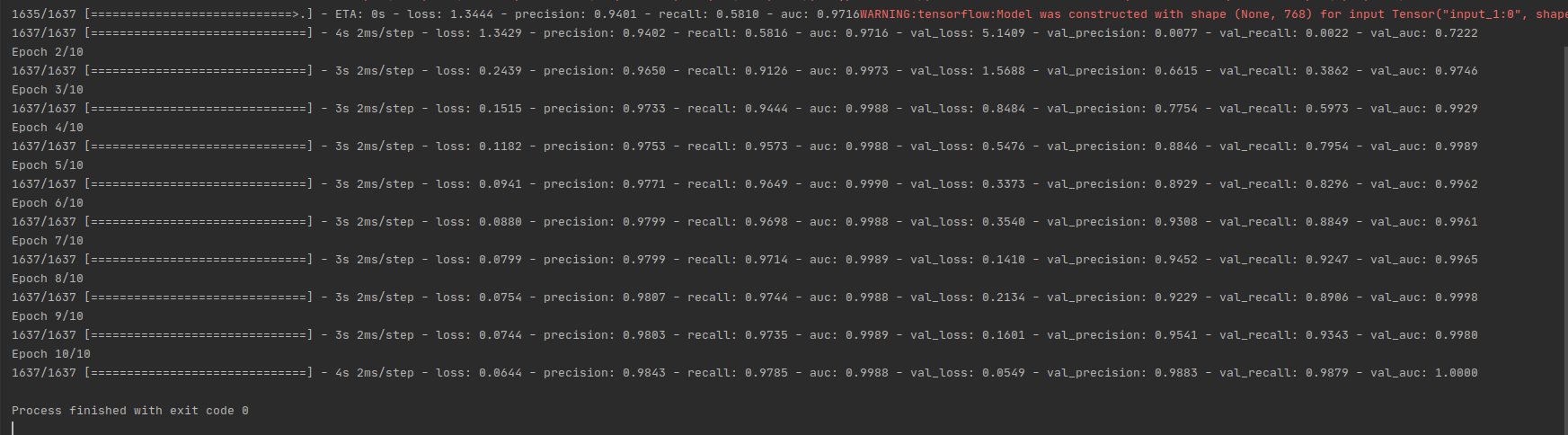

- Feeding this sentence vector to a feed forward neural network for classification.

- Instead of directly using BERT model fine tune it for our custom use case by:

- rather than taking a mean on the axis one on every sentence embedding using the full embedding

- Fixing the max lenght of each sentence

- padding the shorter sentences

- clipping the longer sentences

- adding mask so that the attention is only done on the non padded part of the sentence or original sentence only

- Using this 2D embedding to further use a Bi-LSTM for classification