Code for A Unified Framework for Domain Adaptive Pose Estimation, ECCV 2022

Donghyun Kim*, Kaihong Wang*, Kate Saenko, Margrit Betke, and Stan Sclaroff.

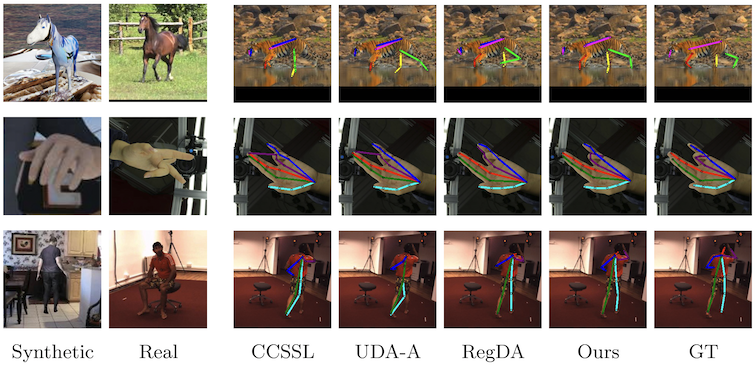

While several domain adaptive pose estimation models have been proposed recently, they are not generic but only focus on either human pose or animal pose estimation, and thus their effectiveness is somewhat limited to specific scenarios. In this work, we propose a unified framework that generalizes well on various domain adaptive pose estimation problems.

We propose a unified freamwork for domain adaptive pose estimation on various objects including human body, human hand and animal that requires a (synthetic) labeled source domain dataset and a (real-world) target domain dataset without annotations. The system consists of a style trasnfer module to mitigate visual domain gap and a mean-teacher framework to encourage feature-level unsupervised learning from unlabeled images. For further details regarding our method, please refer to our paper.

Bibtex

@InProceedings{kim2022unified,

title={A Unified Framework for Domain Adaptive Pose Estimation},

author={Kim, Donghyun and Wang, Kaihong and Saenko, Kate and Betke, Margrit and Sclaroff, Stan},

booktitle = {The European Conference on Computer Vision (ECCV)},

year = {2022}

}

Our code is based on the implmentation of RegDA, UDA-Aniaml.

Data Preparation

Human Dataset

As instructed by RegDA, following datasets can be downloaded automatically:

You need to prepare following datasets manually if you want to use them:

Aniaml Dataset

Following UDA-Animal-Pose and CCSSL:

- Create a

./animal_datadirectory. - Download the synthetic dataset by running

bash get_dataset.sh. - Download the TigDog dataset and move folder behaviorDiscovery2.0 to

./animal_data/. - Download a cleaned annotation file of the synthetic dataset for better time performance from here and plac it under

./animal_data/. - Download the cropped images for the TigDog dataset and move the folder real_animal_crop_v4 to

./animal_data/.

Pretrained Models

Before training, please make sure style transfer models are downloaded and saved in the "saved_models" folder under this directory. Pretrained style transfer models in all experiments are available here.

To pretrain a style transfer model on your own:

- Go to

./adain/directory. - For pretraining on human datasets (e.g. SURREAL->Human36M), run

python train/train_human.py --source SURREAL --target Human36M_mt --source_root path/to/SURREAL --target_root path/to/Human36M --exp_name s2h_0_1 --style_weight 0.1

- For pretraining on animal datasets (e.g. SyntheticAniaml->TigDog), run

python train/train_animal.py --image-path ../animal_data --source synthetic_animal_sp_all --target real_animal_all --target_ssl real_animal_all_mt --train_on_all_cat --exp_name syn2td_0_1 --style_weight 0.1

UDA Human Pose Estimation

SURREAL-to-Human36M

python train_human.py path/to/SURREAL path/to/Human36M -s SURREAL -t Human36M --target-train Human36M_mt --log logs/s2h_exp/syn2real --debug --seed 0 --lambda_t 0 --lambda_c 1 --pretrain-epoch 40 --rotation_stu 60 --shear_stu -30 30 --translate_stu 0.05 0.05 --scale_stu 0.6 1.3 --color_stu 0.25 --blur_stu 0 --rotation_tea 60 --shear_tea -30 30 --translate_tea 0.05 0.05 --scale_tea 0.6 1.3 --color_tea 0.25 --blur_tea 0 -b 32 --mask-ratio 0.5 --k 1 --decoder-name saved_models/decoder_s2h_0_1.pth.tar --s2t-freq 0.5 --s2t-alpha 0 1 --t2s-freq 0.5 --t2s-alpha 0 1 --occlude-rate 0.5 --occlude-thresh 0.9

SURREAL-to-LSP

python train_human.py path/to/SURREAL path/to/LSP -s SURREAL -t LSP --target-train LSP_mt --log logs/s2l_exp/syn2real --debug --seed 0 --lambda_t 0 --lambda_c 1 --pretrain-epoch 40 --rotation_stu 60 --shear_stu -30 30 --translate_stu 0.05 0.05 --scale_stu 0.6 1.3 --color_stu 0.25 --blur_stu 0 --rotation_tea 60 --shear_tea -30 30 --translate_tea 0.05 0.05 --scale_tea 0.6 1.3 --color_tea 0.25 --blur_tea 0 -b 32 --mask-ratio 0.5 --k 1 --decoder-name saved_models/decoder_s2l_0_1.pth.tar --s2t-freq 0.5 --s2t-alpha 0 1 --t2s-freq 0.5 --t2s-alpha 0 1 --occlude-rate 0.5 --occlude-thresh 0.9

UDA Hand Pose Estimation

RHD-to-H3D

python train_human.py path/to/RHD path/to/H3D -s RenderedHandPose -t Hand3DStudio --target-train Hand3DStudio_mt --log logs/r2h_exp/syn2real --debug --seed 0 --lambda_t 0 --lambda_c 1 --pretrain-epoch 40 --rotation_stu 180 --shear_stu -30 30 --translate_stu 0.05 0.05 --scale_stu 0.6 1.3 --color_stu 0.25 --blur_stu 0 --rotation_tea 180 --shear_tea -30 30 --translate_tea 0.05 0.05 --scale_tea 0.6 1.3 --color_tea 0.25 --blur_tea 0 -b 32 --mask-ratio 0.5 --k 1 --decoder-name saved_models/decoder_r2h_0_1.pth.tar --s2t-freq 0.5 --s2t-alpha 0 1 --t2s-freq 0.5 --t2s-alpha 0 1 --occlude-rate 0.5 --occlude-thresh 0.9

FreiHand-to-H3D

python train_human.py path/to/FreiHand path/to/RHD -s FreiHand -t RenderedHandPose --target-train RenderedHandPose_mt --log logs/f2r_exp/syn2real --debug --seed 0 --lambda_t 0 --lambda_c 1 --pretrain-epoch 40 --rotation_stu 180 --shear_stu -30 30 --translate_stu 0.05 0.05 --scale_stu 0.6 1.3 --color_stu 0.25 --blur_stu 0 --rotation_tea 180 --shear_tea -30 30 --translate_tea 0.05 0.05 --scale_tea 0.6 1.3 --color_tea 0.25 --blur_tea 0 -b 32 --mask-ratio 0.5 --k 1 --decoder-name saved_models/decoder_f2r_0_1.pth.tar --s2t-freq 0.5 --s2t-alpha 0 1 --t2s-freq 0.5 --t2s-alpha 0 1 --occlude-rate 0.5 --occlude-thresh 0.9

UDA Animal Pose Estimation

SyntheticAnimal-to-TigDog

python train_animal.py --image-path animal_data --source synthetic_animal_sp_all --target real_animal_all --target_ssl real_animal_all_mt --train_on_all_cat --log logs/syn2real_animal/syn2real --debug --seed 0 --lambda_c 1 --pretrain-epoch 40 --rotation_stu 60 --shear_stu -30 30 --translate_stu 0.05 0.05 --scale_stu 0.6 1.3 --color_stu 0.25 --blur_stu 0 --rotation_tea 60 --shear_tea -30 30 --translate_tea 0.05 0.05 --scale_tea 0.6 1.3 --color_tea 0.25 --blur_tea 0 --k 1 -b 32 --mask-ratio 0.5 --decoder-name saved_models/decoder_animal_0_1.pth.tar --s2t-freq 0.5 --s2t-alpha 0 1 --t2s-freq 0.5 --t2s-alpha 0 1 --occlude-rate 0.5 --occlude-thresh 0.9

SyntheticAnimal-to-AnimalPose

python train_animal_other.py --image-path animal_data --source synthetic_animal_sp_all_other --target animal_pose --target_ssl animal_pose_mt --train_on_all_cat --log logs/syn2animal_pose/syn2real --debug --seed 0 --lambda_c 1 --pretrain-epoch 40 --rotation_stu 60 --shear_stu -30 30 --translate_stu 0.05 0.05 --scale_stu 0.6 1.3 --color_stu 0.25 --blur_stu 0 --rotation_tea 60 --shear_tea -30 30 --translate_tea 0.05 0.05 --scale_tea 0.6 1.3 --color_tea 0.25 --blur_tea 0 --k 1 -b 32 --mask-ratio 0.5 --decoder-name saved_models/decoder_animal_0_1.pth.tar --s2t-freq 0.5 --s2t-alpha 0 1 --t2s-freq 0.5 --t2s-alpha 0 1 --occlude-rate 0.5 --occlude-thresh 0.9

Code borrowed from RegDA, UDA-Aniaml and AdaIN.