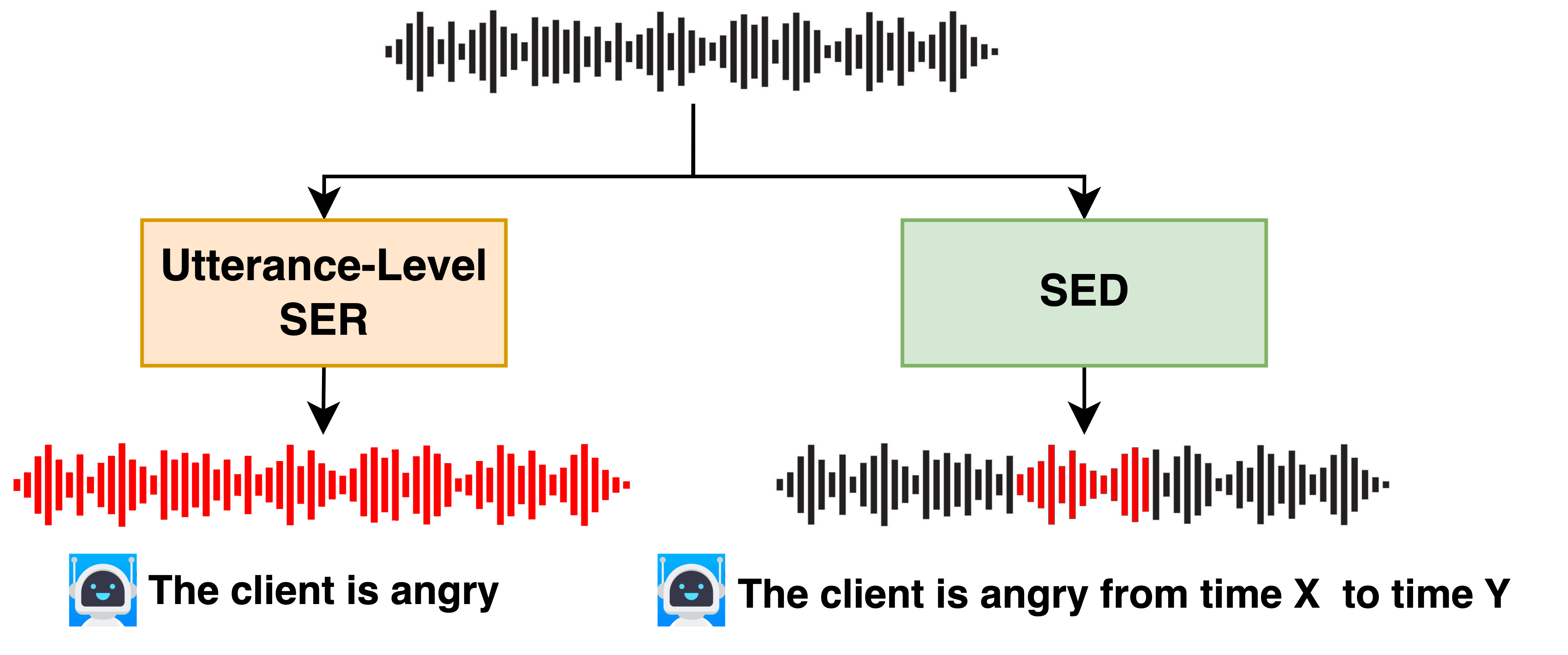

Speech Emotion Diarization is a technique that focuses on predicting emotions and their corresponding time boundaries within a speech recording.

The model has been trained using audio samples that include one non-neutral emotional event, which belong to one of the four following transitional sequences:

neutral-emotional

neutral-emotional-neutral

emotional-neutral

emotional

The model's output takes the form of a dictionary comprising emotion components (neutral, happy, angry, and sad) along with their respective start and end boundaries, as exemplified below:

{

'example.wav': [

{'start': 0.0, 'end': 1.94, 'emotion': 'n'}, # 'n' denotes neutral

{'start': 1.94, 'end': 4.48, 'emotion': 'h'} # 'h' denotes happy

]

}The implementation is based on the popular speech tookit SpeechBrain.

Another implementation of this project can be found here as a SpeechBrain recipe.

To install the dependencies, do pip install -r requirements.txt

The test is based on Zaion Emotion Dataset (ZED), which can be downloaded here.

-

RAVDESS: https://zenodo.org/record/1188976/files/Audio_Speech_Actors_01-24.zip?download=1

Unzip and rename the folder as "RAVDESS".

-

ESD: https://github.com/HLTSingapore/Emotional-Speech-Data

Unzip and rename the folder as "ESD".

-

IEMOCAP: https://sail.usc.edu/iemocap/iemocap_release.htm

Unzip.

-

JL-CORPUS: https://www.kaggle.com/datasets/tli725/jl-corpus?resource=download

Unzip, keep only

archive/Raw JL corpus (unchecked and unannotated)/JL(wav+txt)and rename the folder to "JL_corpus". -

EmoV-DB: https://openslr.org/115/

Download

[bea_Amused.tar.gz, bea_Angry.tar.gz, bea_Neutral.tar.gz, jenie_Amused.tar.gz, jenie_Angry.tar.gz, jenie_Neutral.tar.gz, josh_Amused.tar.gz, josh_Neutral.tar.gz, sam_Amused.tar.gz, sam_Angry.tar.gz, sam_Neutral.tar.gz], unzip and move all the folders into another folder named "EmoV-DB".

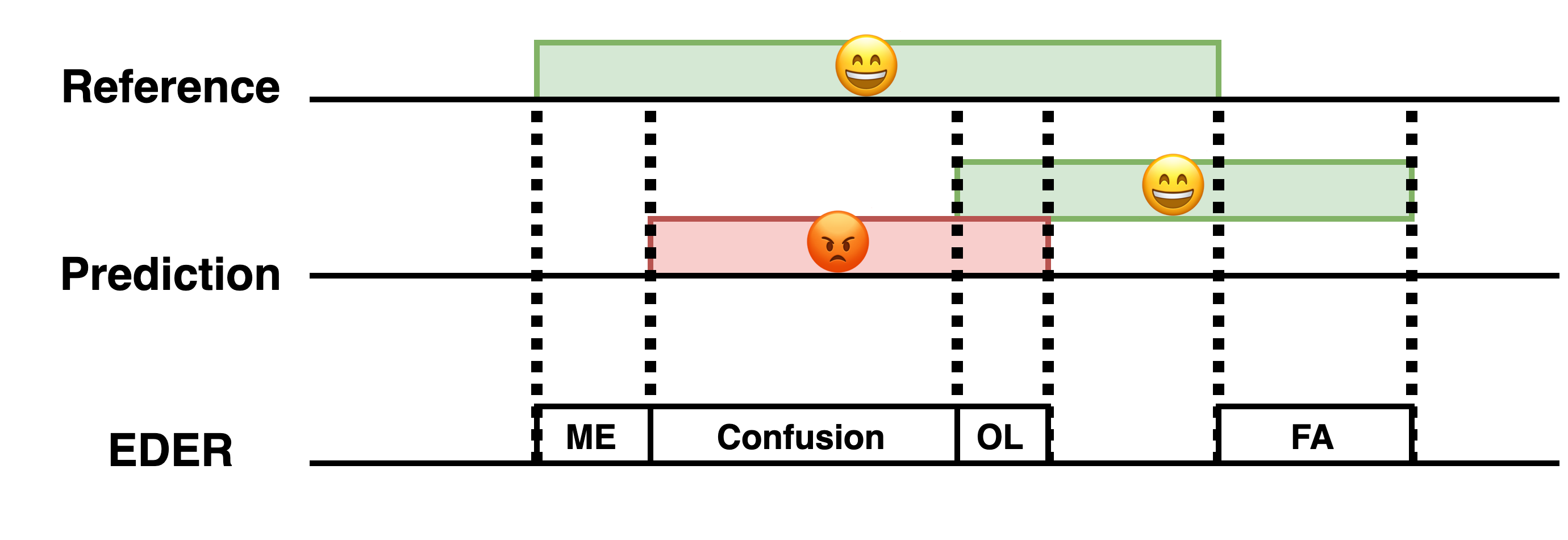

A proposed Emotion Diarization Error Rate is used to evaluate the baselines.

The four components are:

The four components are:

- False Alarm (FA): Length of non-emotional segments that are predicted as emotional.

- Missed Emotion (ME): Length of emotional segments that are predicted as non-emotional.

- Emotion Confusion (CF): Length of emotional segments that are assigned to another(other) incorrect emotion(s).

- Emotion Overlap (OL): Length of non-overlapped emotional segments that are predicted to contain other overlapped emotions apart from the correct one

Even though frame-wise classification accuracy can also reflect the system's capacity, it is not always convincing because it depends on the frame length (resolution). A higher accuracy of frame-wise classification does not equal that the model can better diarize. Hence, EDER is a more common metric for the task.

Model configs and experiment settings can be modified in hparams/train.yaml.

To run the code, do python train.py hparams/train.yaml --zed_folder /path/to/ZED --emovdb_folder /path/to/EmoV-DB --esd_folder /path/to/ESD --iemocap_folder /path/to/IEMOCAP --jlcorpus_folder /path/to/JL_corpus --ravdess_folder /path/to/RAVDESS.

The data preparation may take a while.

A results repository will be generated that contains checkpoints, logs, etc. The frame-wise classification result for each utterance can be found in eder.txt.

The EDER (Emotion Diarization Error Rate) reported here was averaged on 5 different seeds, results of other models (wav2vec2.0, HuBERT) can be found in the paper. You can find our training results (model, logs, etc) here.

| model | EDER |

|---|---|

| WavLM-large | 30.2 ± 1.60 |

It takes about 40 mins/epoch with 1xRTX8000(40G), reduce the batch size if OOM.

The pretrained models and a easy-inference interface can be found on HuggingFace.

@article{wang2023speech,

title={Speech Emotion Diarization: Which Emotion Appears When?},

author={Wang, Yingzhi and Ravanelli, Mirco and Nfissi, Alaa and Yacoubi, Alya},

journal={arXiv preprint arXiv:2306.12991},

year={2023}

}