Conferences and conventions are hotspots for making connections. Professionals in attendance often share the same interests and can make valuable business and personal connections with one another. At the same time, these events draw a large crowd and it's often hard to make these connections in the midst of all of these events' excitement and energy. To help attendees make connections, we are building the infrastructure for a service that can inform attendees if they have attended the same booths and presentations at an event.

- Flask - API webserver

- SQLAlchemy - Database ORM

- PostgreSQL - Relational database

- PostGIS - Spatial plug-in for PostgreSQL enabling geographic queries]

- Vagrant - Tool for managing virtual deployed environments

- VirtualBox - Hypervisor allowing you to run multiple operating systems

- K3s - Lightweight distribution of K8s to easily develop against a local cluster

We will be installing the tools that we'll need to use for getting our environment set up properly.

- Install Docker

- Set up a DockerHub account

- Set up

kubectl - Install VirtualBox with at least version 6.0

- Install Vagrant with at least version 2.0

To run the application, you will need a K8s cluster running locally and to interface with it via kubectl. We will be using Vagrant with VirtualBox to run K3s.

In this project's root, run vagrant up.

$ vagrant upThe command will take a while and will leverage VirtualBox to load an openSUSE OS and automatically install K3s. When we are taking a break from development, we can run vagrant suspend to conserve some ouf our system's resources and vagrant resume when we want to bring our resources back up. Some useful vagrant commands can be found in this cheatsheet.

kubectl apply -f deployment/db-configmap.yaml- Set up environment variables for the podskubectl apply -f deployment/db-secret.yaml- Set up secrets for the podskubectl apply -f deployment/grpc-configmap.yaml- Set up environment variables related to the gRPC serverkubectl apply -f deployment/postgres.yaml- Set up a Postgres database running PostGISkubectl apply -f deployment/udaconnect-api.yaml- Set up the service and deployment for the API ingresskubectl apply -f deployment/udaconnect-app.yaml- Set up the service and deployment for the web appkubectl apply -f deployment/udaconnect-connections-api.yaml- Set up the service and deployment for the connections microservicekubectl apply -f deployment/udaconnect-locations-api.yaml- Set up the service and deployment for the locations microservicekubectl apply -f deployment/udaconnect-persons-api.yaml- Set up the service and deployment for the persons microservicekubectl apply -f deployment/udaconnect-swagger.yaml- Set up the service and deployment for the API documentationsh scripts/run_db_command.sh <POD_NAME>- Seed your database against thepostgrespod. (kubectl get podswill give you thePOD_NAME)

Manually applying each of the individual yaml files is cumbersome but going through each step provides some context on the content of the starter project. In practice, we would have reduced the number of steps by running the command against a directory to apply of the contents: kubectl apply -f deployment/.

Note: The first time you run this project, you will need to seed the database with dummy data. Use the command sh scripts/run_db_command.sh <POD_NAME> against the postgres pod. (kubectl get pods will give you the POD_NAME). Subsequent runs of kubectl apply for making changes to deployments or services shouldn't require you to seed the database again!

These pages should load on your web browser:

http://localhost/api- OpenAPI Documentation and base path for APIhttp://localhost/- Frontend ReactJS Application

The database uses a plug-in named PostGIS that supports geographic queries. It introduces GEOMETRY types and functions that we leverage to calculate distance between ST_POINT's which represent latitude and longitude.

You may find it helpful to be able to connect to the database. In general, most of the database complexity is abstracted from you. The Docker container in the starter should be configured with PostGIS. Seed scripts are provided to set up the database table and some rows.

While the Kubernetes service for postgres is running (you can use kubectl get services to check), you can expose the service to connect locally:

kubectl port-forward svc/postgres 5432:5432This will enable you to connect to the database at localhost. You should then be able to connect to postgresql://localhost:5432/geoconnections. This is assuming you use the built-in values in the deployment config map.

To manually connect to the database, you will need software compatible with PostgreSQL.

- CLI users will find psql to be the industry standard.

- GUI users will find pgAdmin to be a popular open-source solution.

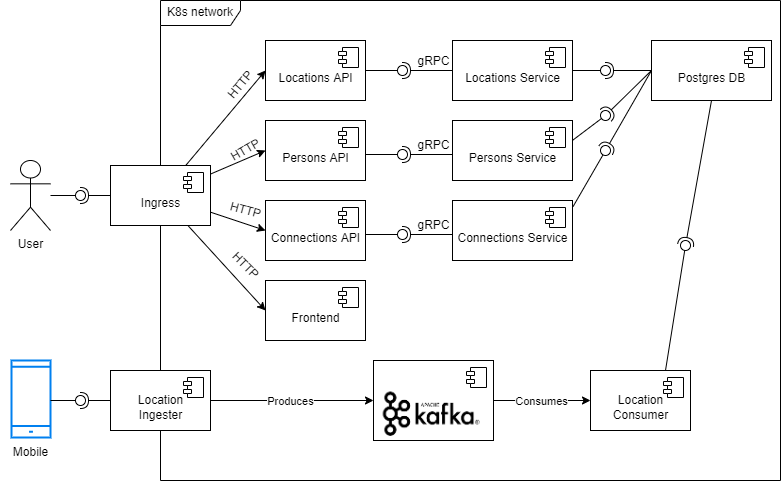

The following diagram shows the architecture of the application using UML component diagram notation:

Note that the diagram tries to follow the UML Component Diagram notation. The "arrows" do not represent directionality, but rather "interface" provision and consumption.

This architecture design is chosen in order to minimize the cost and development time by dividing the code into multiple small modules, which leads to higher maintainability, meaning that it is easier for new developers to be onboarded or assigned responsibility of a particular module without having to know about the others, thus reducing development time and cost.

Additionally, the services run in containers, making them platform-agnostic so the developers do not need to install a bunch of tools before getting productive. It also makes it easier to deploy the application to a cloud provider, such as AWS, Azure, or GCP.

Lastly, this model allows the application to handle large amounts of incoming data since it is horizontally scalable, meaning that it can be scaled out by adding more instances of the services to handle the increased load, and it has a load balancer to distribute the incoming requests to the services. It also makes use of such technologies as Kafka and gRPC to speed up the communication between services.

The frontend was left as one module rather than split into micro-frontends because it would be such an overkill for a simple one-page website as this to be divided further and would incur more cost than benefit. Additionally, it was added under the same ingress as the API to avoid exposing additional ports and to have it under the same domain and avoid CORS issues.

The APIs were split into microservices to allow for easier scaling and to allow for each service to be developed and deployed independently. This also allows for each service to be developed by different teams and to be deployed independently. Three independent modules were identified: persons, locations, and connections.

The front-facing RESTful API uses gRPC to communicate with the underlying service that handles communcation with the database. This allows for each module to be developed in a language that is more familiar to the developer and to be able to use the language's best practices and libraries. Additionally, gRPC is more efficient than HTTP.

Note: services here denotes modules that handle connections to the database and are not to be confused with Kubernetes services or microservices. Also, the *_pb2.py and *_pb2_grpc.py files are gitignored because they are generated in a Docker image build step, and it is generally considered a good practice to not push auto-generated files to git.

The database was not split into more than one because it only contained 2 tables that are linked together. Splitting it would introduce more difficulty in maintaining the database and ensuring consistency with the foreign keys and contraints and would not provide any benefit since Postgres is already highly efficient and scalable.

Acting as a locations ingestion service, the Kafka module manages a topic to which the locations producer (the service exposed via gRPC) produces locations as they arrive from the user, to then be consumed by another module that stores them in the database.