NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE https://arxiv.org/pdf/1409.0473.pdf

Effective Approaches to Attention-based Neural Machine Translation https://arxiv.org/pdf/1508.04025.pdf

Massive Exploration of Neural Machine Translation Architectures https://arxiv.org/pdf/1703.03906.pdf

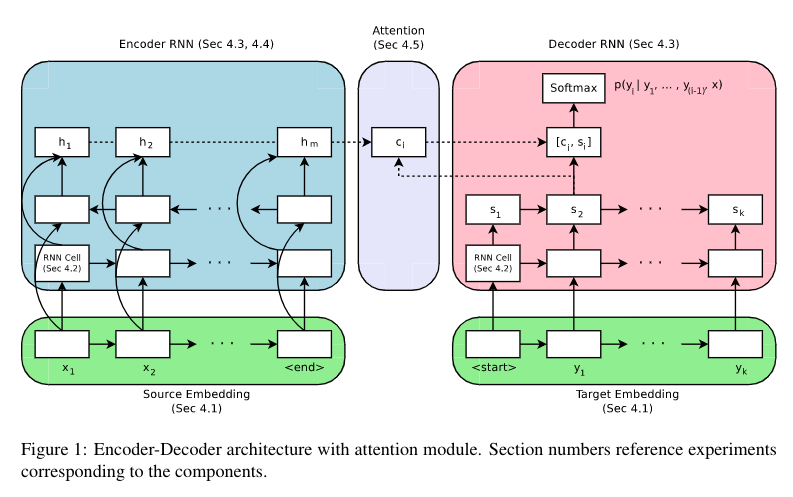

- Encoder --> Attention --> Decoder Architecture.

- Luong Attention.

- Training on Multi30k German to English translation task.

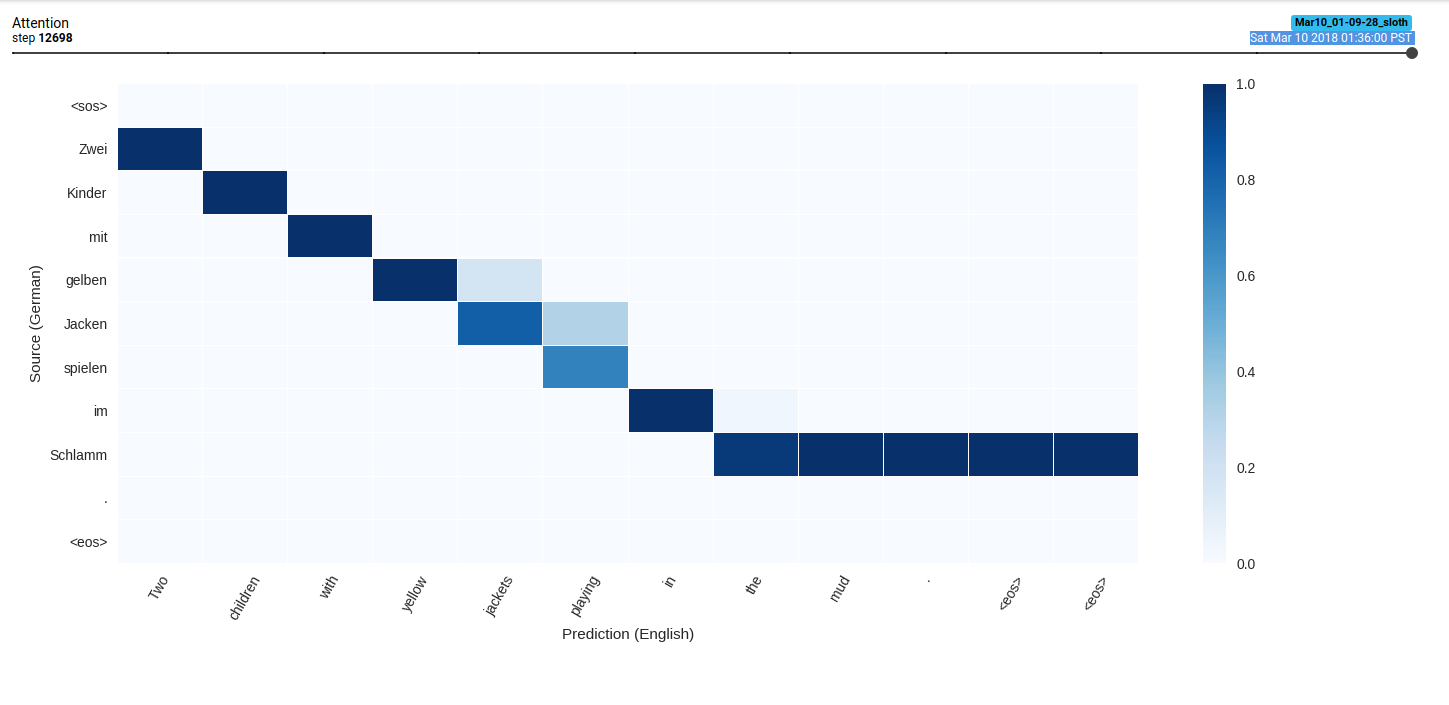

- Attention Visualization.

- Teacher Forcing.

- Greedy Decoding.

- nmt tutorial notebook

- minimal beam search decoding.

- install pytorch:

conda install pytorch -c pytorch

- install other requirements:

pip install -r requirements.txt

Training with a batch size of 32 takes ~3gb GPU ram.

If this is too much, lower the batch size or reduce network dimensionality in hyperparams.py.

python train.py

view logs in Tensorboard decent alignments should be seen after 2-3 epochs.

tensorboard --logdir runs

(partially trained attention heatmap)