MultiLayer Perceptron

Fully connected artificial neural network simulation project.

I highly recommend you to take a look at the flowchart before use the program.

Features

- Single/Multi layer support

- Supported methods

- Stochastic gradient descent

- Mini-batch gradient

- Batch gradient descent

- Supported activation functions

- Relu

- Leaky relu

- Elu

- Tanh

- Sigmoid

- Bipolar sigmoid

- Sinus (experimental)

- None (without activation function)

- Supported optimizers

- Momentum

- Supported preprocessing operations

- Data shuffling

- Z-score normalization

- Supported output layer activation functions

- Softmax function

- Supported loss functions

- Cross-entropy

Guide

The weights

When you train the network again and again, it will continue where it left off. You can set the weights randomly and lost the information with clear weight button.

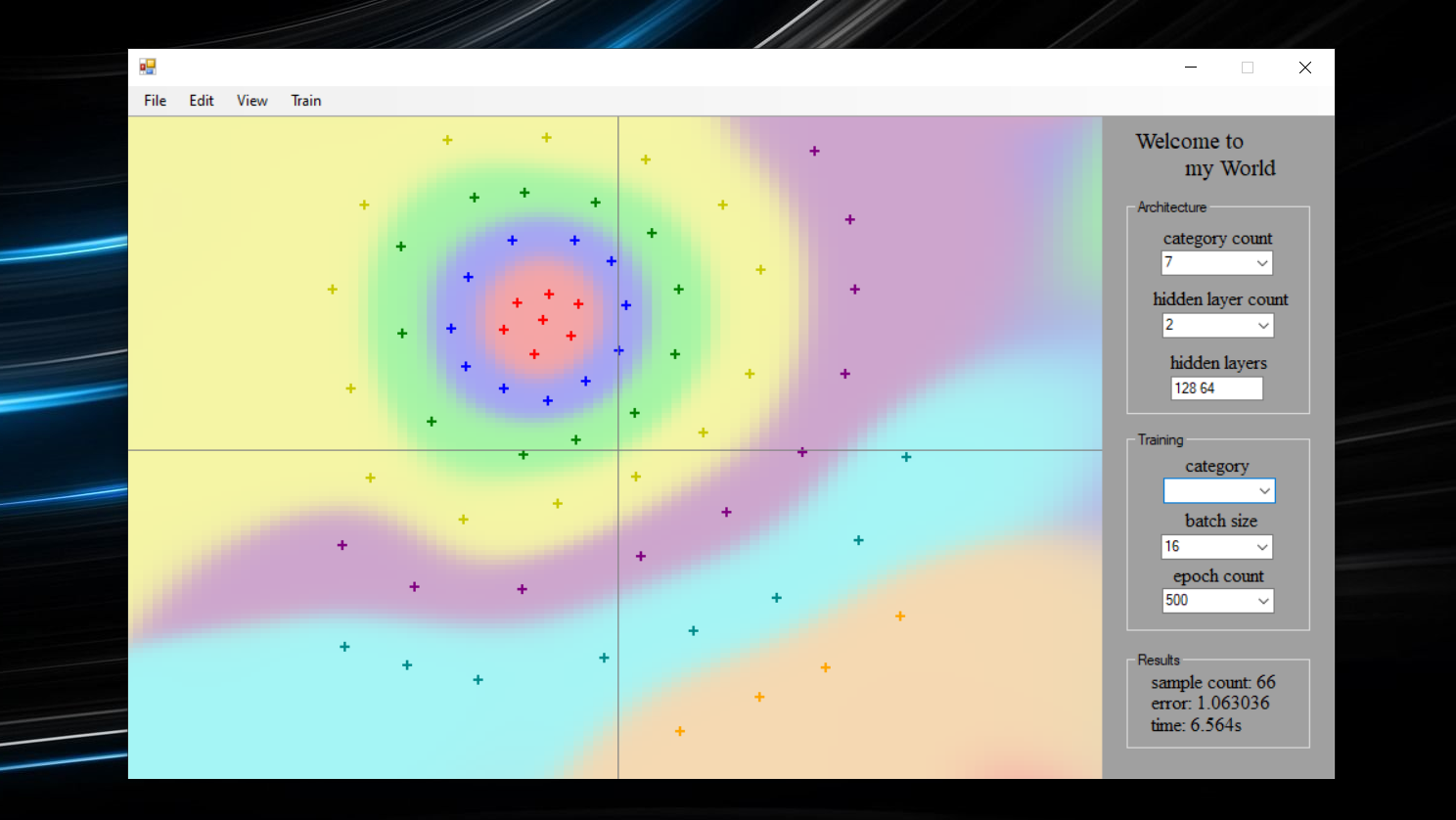

Continuous color

You can find some visually useful stuff in the view tab. The continuous color option is not an interpolation, it's the probability map that softmax function produce.

Animated training

There are 2 training mod, classical training and animated training. The animated training cuts the epochs into 10 equal pieces and keeps the visual output of every piece. When the training is done, it will show the 10 outputs one after the other with 0.25 seconds interval.

Verbose

It saves training information in a csv file named as "record.csv". The file has two columns named as "epoch" and "error". The csv file can be examined via external sources like pandas, plotly etc.

Report

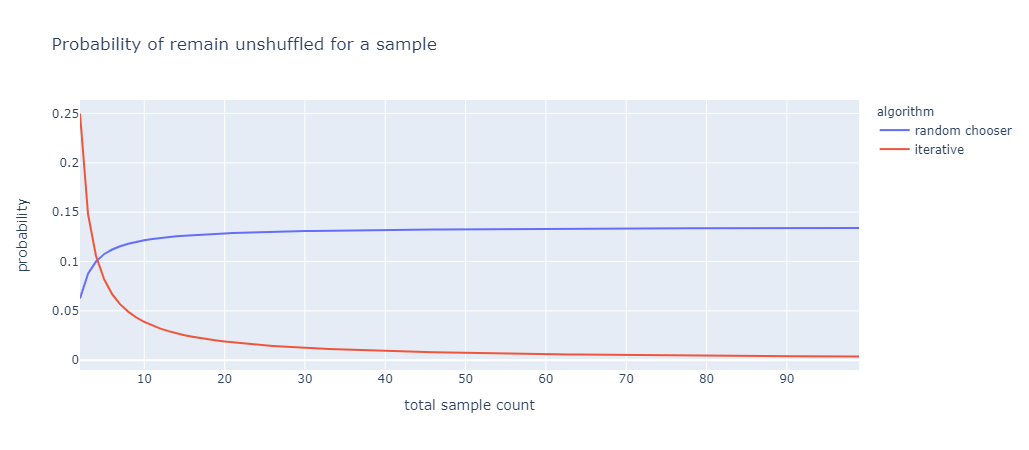

Data shuffling

When i implemented the data shuffling at first, the algorithm was like below. One day i realised that calling the training function 10 times with n epochs was not same as calling the training function 1 time with 10n epochs. There was a huge performance gap between them and the problem was in the shuffling phase. In fact the shuffling algorithm does not affect the original data and if you try to slice training phase into multi function calls, the shuffling algorithm start all over again for every call. The performance gap means that the algorithm is not shuffling enough and there are some unshuffled data remain.

Old algorithm

for i in sample count

pick random sample A

pick random sample B

swap A and B

end

Then i upgraded the algorithm:

New algorithm

for i in sample count

pick ith sample A

pick random sample B

swap A and B

end

Let's consider a sample from the dataset. What is the probability that the data remain unshuffled after the shuffling phase?

For the old algorithm:

For the new algorithm:

As you see the new algorithm is far better.

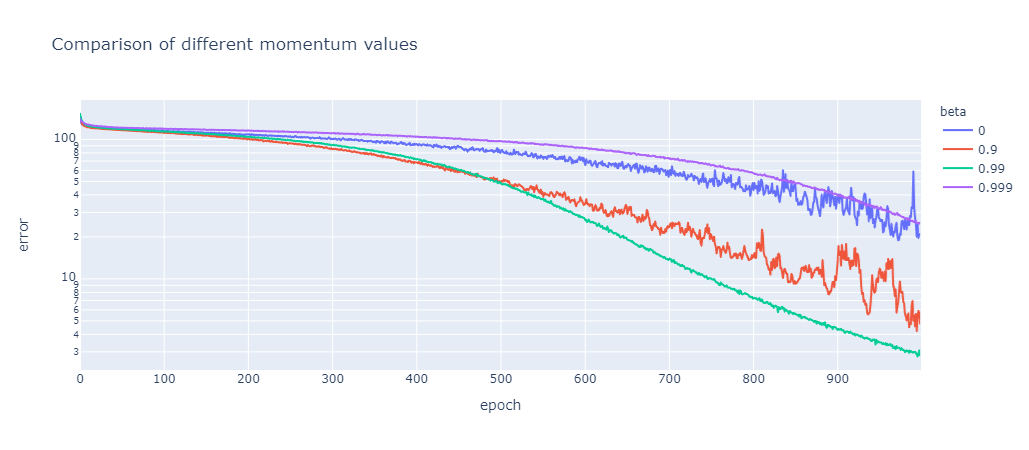

Momentum optimization

The momentum is a method for optimize the training phase. It has one parameter called "beta". I found that the best value for the beta parameter is 0.99 for my neural network. You can see my tests below. Every line represents mean of 10 tests.

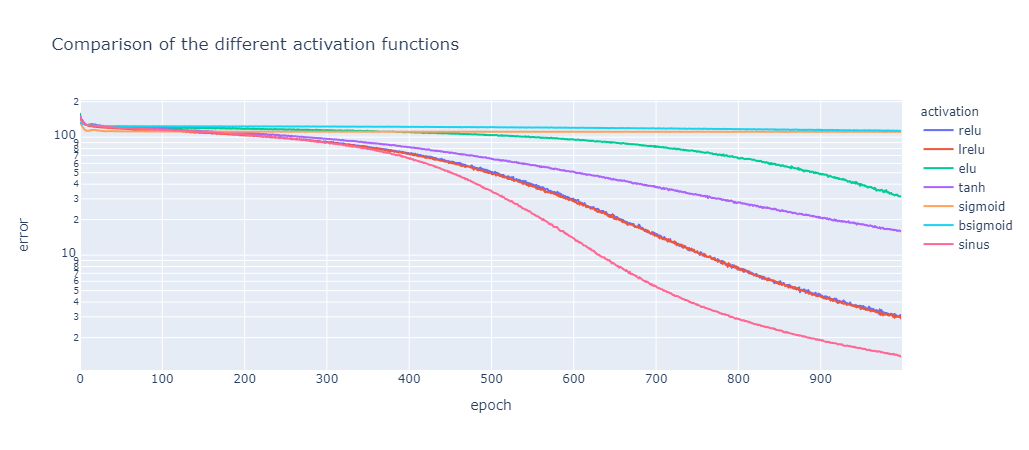

Activation functions

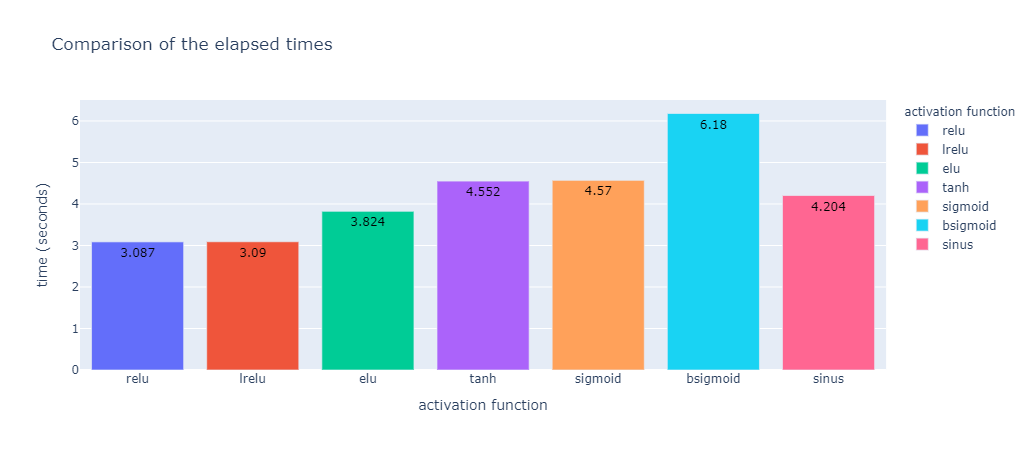

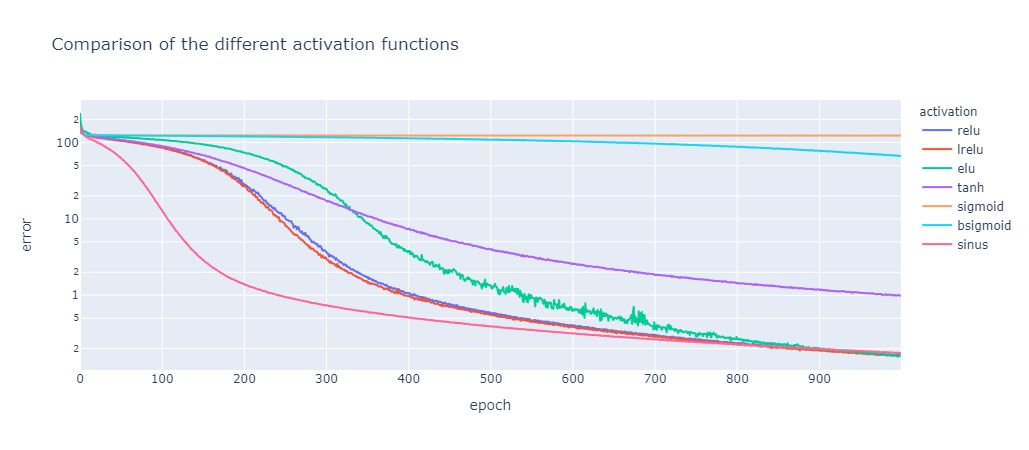

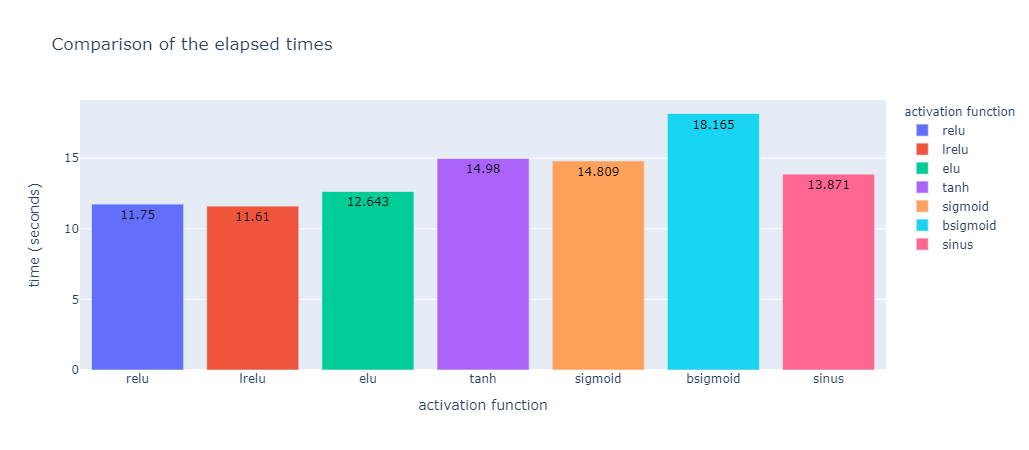

I added sinus function just for curiosity. I wasn't expecting even the neural network trained some way. Ironically it did one of the best scores. The error values calculated as the sum of the errors per epoch. The "random.csv" dataset is used for the tests.

For 2 hidden layers [64, 32]

For 3 hidden layers [128, 64, 32]

Note

There is no batch normalization in the intermediate layers. If you try to train a multi layer network with RELU, Leaky RELU or ELU the program might exceed maximum float number limit and give nan values. It's because of the softmax function that uses exponential terms which is easy to grow so fast.