Please check recent paper index list in PaperList.

We also present a bird’s eye view of hallucinations in AGI and compile a comprehensive survey.

LightHouse: A Survey of AGI Hallucination

We called it LightHouse for AGI

✨ Nowadays, we defined hallucinations as: Model outputs that do not align with the contemporary empirical realities of our current world.

-

Definition for AGI Hallucination

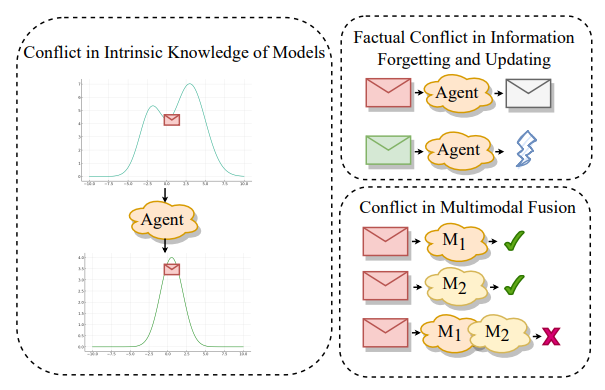

- Conflict in Intrinsic Knowledge of Models

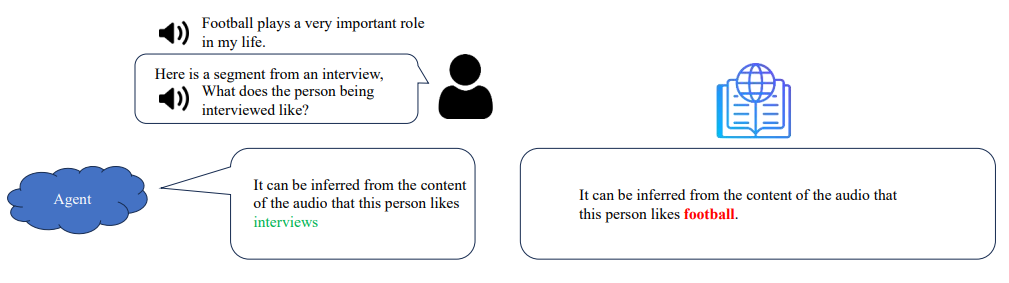

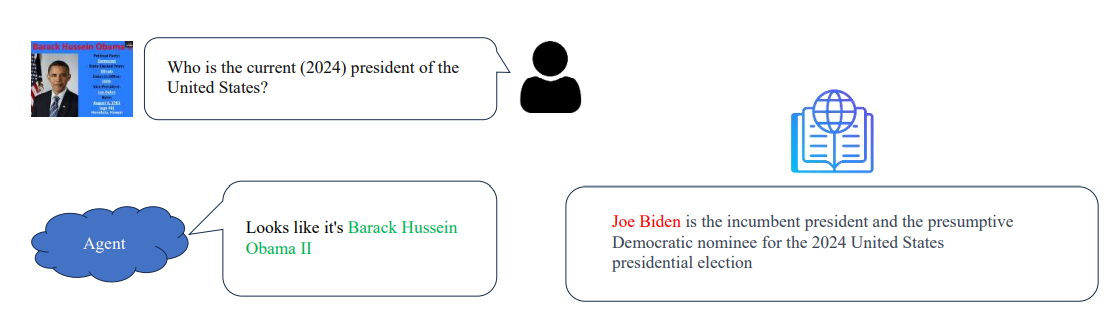

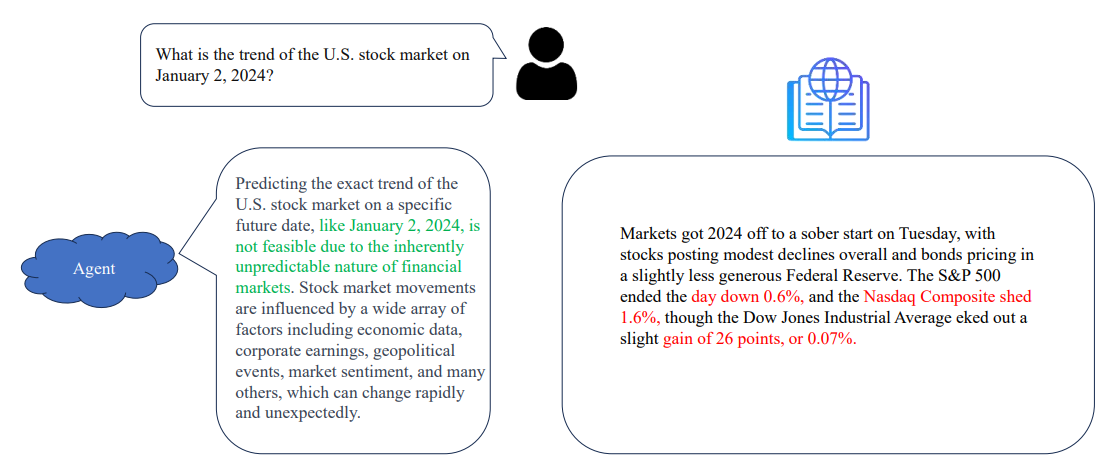

- Factual Conflict in Information Forgetting and Updating

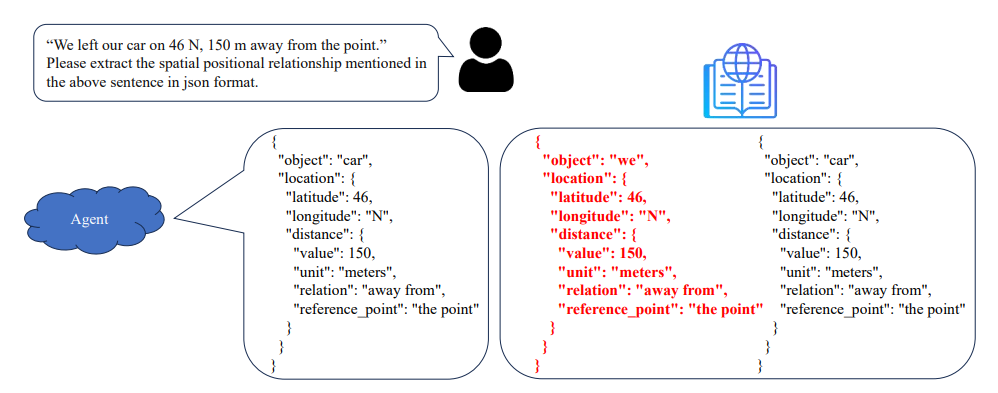

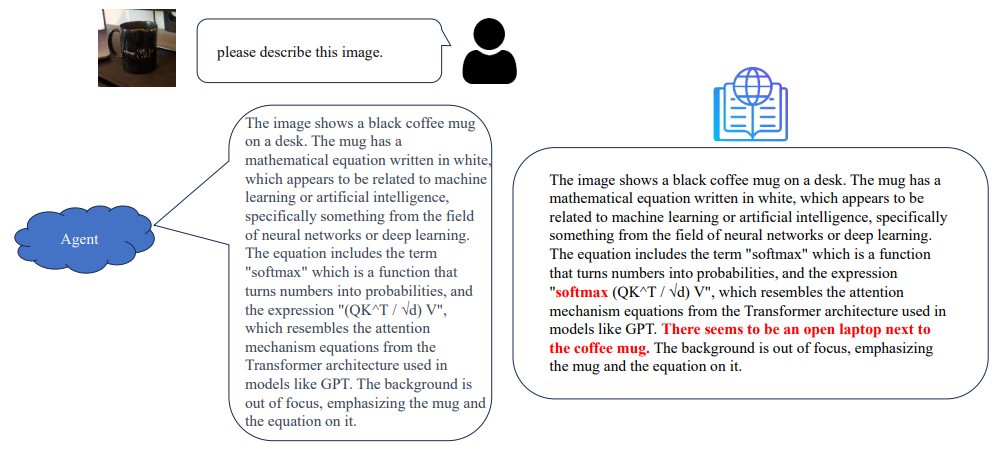

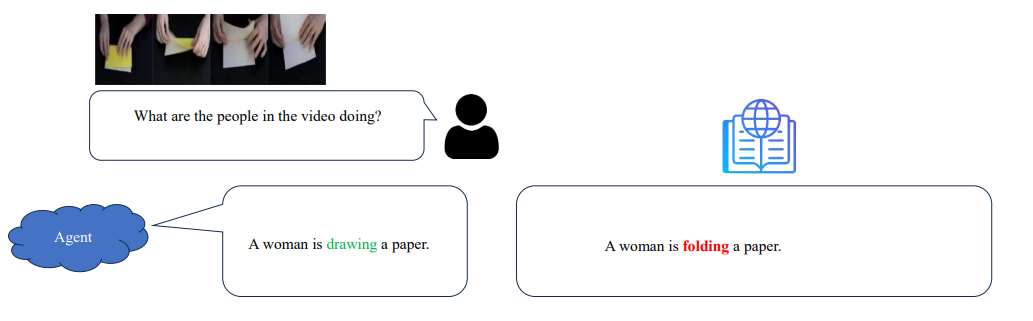

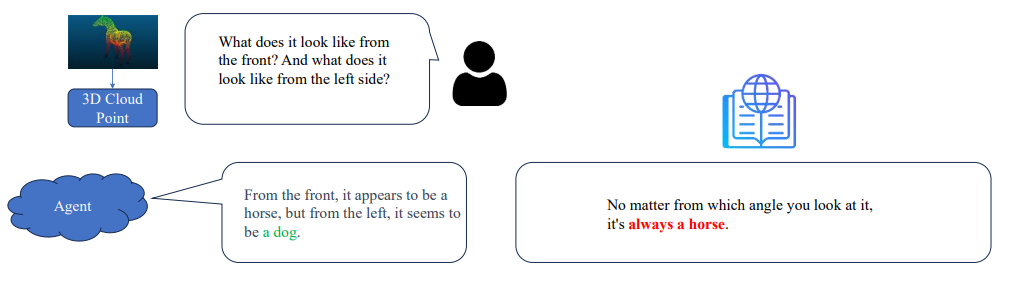

- Conflict in Multimodal Fusion

-

Emergence for AGI Hallucination

- Training Data Distribution

- Timeliness of Information

- Ambiguity in Different Modalities

-

Mitigation for AGI Hallucination

- Data

- Train & SFT & RLHF

- Inference & Post-hoc

-

Evaluation for AGI Hallucination

- Benchmark

- Rule-Based

- Large Model-Based

- Human-Based

-

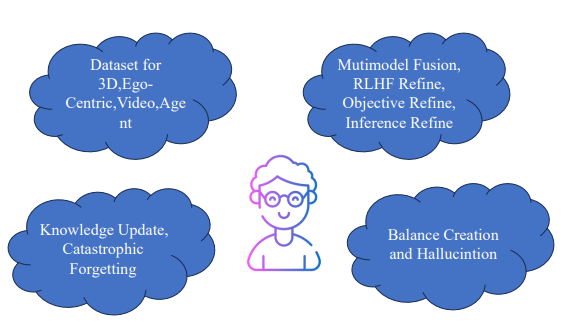

Talk about Future

- Dataset for 3D, Ego-Centric, Video, and Agent

- Mutimodel Fusion, RLHF Refine, Objective Refine, Inference Refine

- Knowledge Update, Catastrophic Forgetting

- Balance Creation and Hallucintion

We warmly welcome any kinds of useful suggestions or contributions. Feel free to drop us an issue or contact Rain with this e-mail.