Official implementation of 'Point-Bind & Point-LLM: Aligning Point Cloud with Multi-modality for 3D Understanding, Generation, and Instruction Following'.

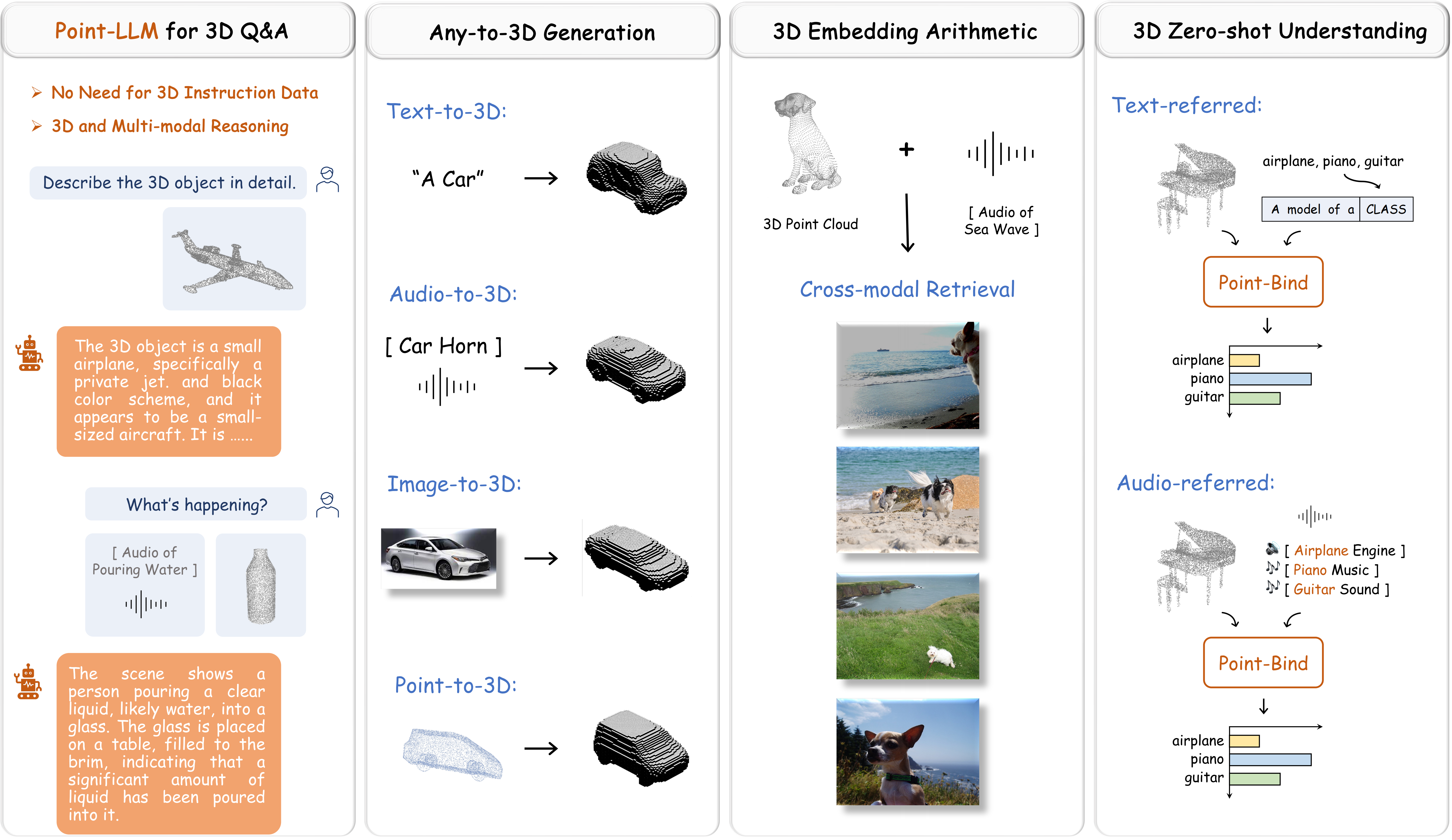

- 🔥 Point-Bind is a 3D multi-modality model with a joint embedding space among 3D point cloud, image, language, audio, and video

- 🔥 Point-LLM is the first 3D large language model, which requires no 3D instruction data 🌟 and reasons 3D multi-modality input 🌟

- Try our 💥 Online Demo here, which is integrated into ImageBind-LLM

- [2023-09-04] The paper of this project is available on arXiv 🚀.

- [2023-05-20] The inference code of Point-Bind and Point-LLM is released 📌.

With a joint embedding space of 3D and multi-modality, our Point-Bind empowers four promising applications:

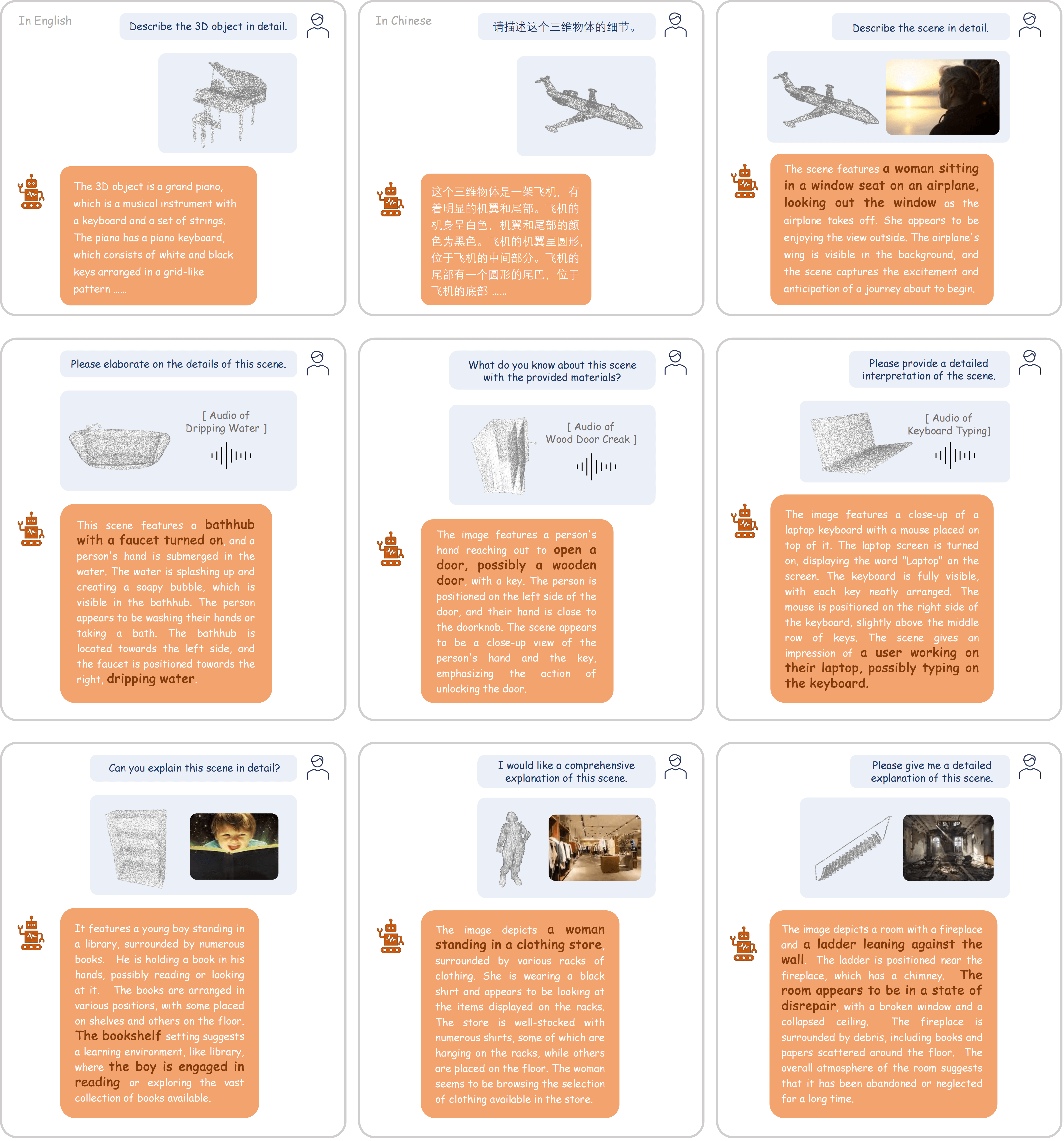

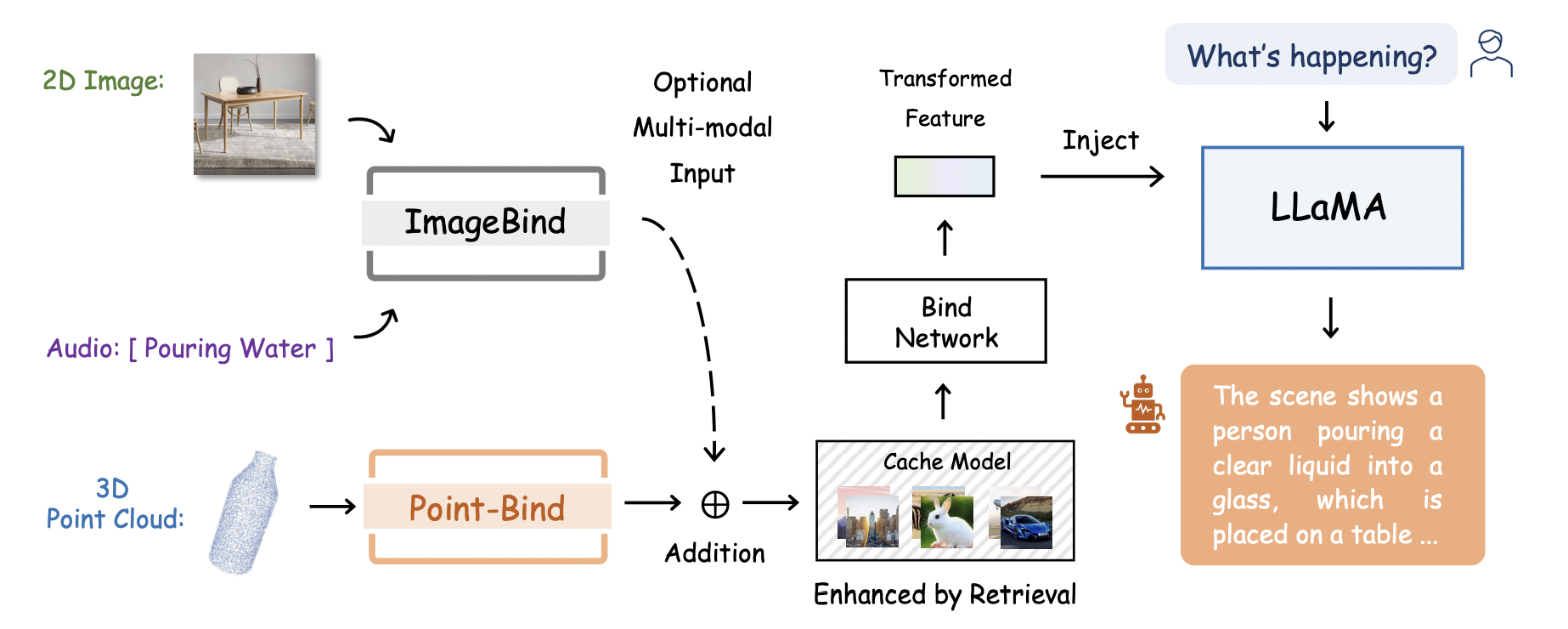

Using Point-Bind, we introduce Point-LLM, the first 3D LLM that responds to instructions with 3D point cloud conditions, supporting both English and Chinese. Our Point-LLM exhibits two main characters:

-

$\color{darkorange}{Data\ and\ Parameter\ Efficiency\ .}$ We only utilize public vision-language data for tuning without any 3D instruction data, and adopt parameter-efficient finetuning techniques, saving extensive resources. -

$\color{darkorange}{3D\ and\ MultiModal\ Reasoning.}$ Via the joint embedding space, Point-LLM can generate descriptive responses by reasoning a combination of 3D and multimodal input, e.g., a point cloud with an image/audio.

The overall pipeline of Point-LLM is as follows. We efficiently fine-tune LLaMA 7B for 3D instruction-following capacity referring to LLaMA-Adapter and ImageBind-LLM:

Please refer to Install.md for preparing environments and pre-trained checkpoints.

We provide simple inference scripts to verify the embedding alignment for 3D and other modalities in Point-Bind.

Run python demo_text_3d.py with input:

text_list = ['An airplane', 'A car', 'A toilet']

point_paths = ["examples/airplane.pt", "examples/car.pt", "examples/toilet.pt"]

Output the similarity matrix:

Text x Point Cloud

tensor([[1.0000e+00, 6.5731e-09, 6.5958e-10],

[1.7373e-06, 9.9998e-01, 1.7816e-05],

[2.1133e-10, 3.4070e-08, 1.0000e+00]])

Run python demo_audio_3d.py with input:

Input

audio_paths = ["examples/airplane_audio.wav", "examples/car_audio.wav", "examples/toilet_audio.wav"]

point_paths = ["examples/airplane.pt", "examples/car.pt", "examples/toilet.pt"]

Output the similarity matrix:

Audio x Point Cloud:

tensor([[0.9907, 0.0041, 0.0051],

[0.0269, 0.9477, 0.0254],

[0.0057, 0.0170, 0.9773]])

For 3D zero-shot classification, please follow DATASET.md to download ModelNet40, and put it under data/modelnet40_normal_resampled/. Then run bash scripts/pointbind_i2pmae.sh or bash scripts/pointbind_pointbert.sh for Point-Bind with I2P-MAE or Point-BERT encoder.

Zero-shot classification accuracy comparison:

| Model | Encoder | ModeNet40 (%) |

|---|---|---|

| PointCLIP | 2D CLIP | 20.2 |

| ULIP | Point-BERT | 60.4 |

| PointCLIP V2 | 2D CLIP | 64.2 |

| ULIP 2 | Point-BERT | 66.4 |

| Point-Bind | Point-BERT | 76.3 |

| Point-Bind | I2P-MAE | 78.0 |

- Obtain the LLaMA backbone weights using this form. Please note that checkpoints from unofficial sources (e.g., BitTorrent) may contain malicious code and should be used with care. Organize the downloaded file in the following structure

/path/to/llama_model_weights ├── 7B │ ├── checklist.chk │ ├── consolidated.00.pth │ └── params.json └── tokenizer.model - Other dependent resources will be automatically downloaded at runtime.

-

Here is a simple script for 3D inference with Point-LLM, utilizing the point cloud samples provided in

examples/. Also, you can runpython demo.pyunder./Point-LLM.import ImageBind.data as data import llama llama_dir = "/path/to/LLaMA" model = llama.load("7B-beta", llama_dir, knn=True) model.eval() inputs = {} point = data.load_and_transform_point_cloud_data(["../examples/airplane.pt"], device='cuda') inputs['Point'] = [point, 1] results = model.generate( inputs, [llama.format_prompt("Describe the 3D object in detail:")], max_gen_len=256 ) result = results[0].strip() print(result)

Try out our web demo, which incorporates multi-modality including 3D point cloud supported by ImageBind-LLM

- Run the following command to host the demo locally:

python gradio_app.py --llama_dir /path/to/llama_model_weights

Ziyu Guo, Renrui Zhang, Xiangyang Zhu, Yiwen Tang, Peng Gao

Other excellent works for incorporating 3D point clouds and LLMs:

- PointLLM: Collect a novel dataset of point-text instruction data to tune LLMs in 3D

- 3D-LLM: Render 3D scenes into multi-view images to enable various 3D-related tasks

If you have any questions about this project, please feel free to contact zhangrenrui@pjlab.org.cn and zyguo@cse.cuhk.edu.hk.