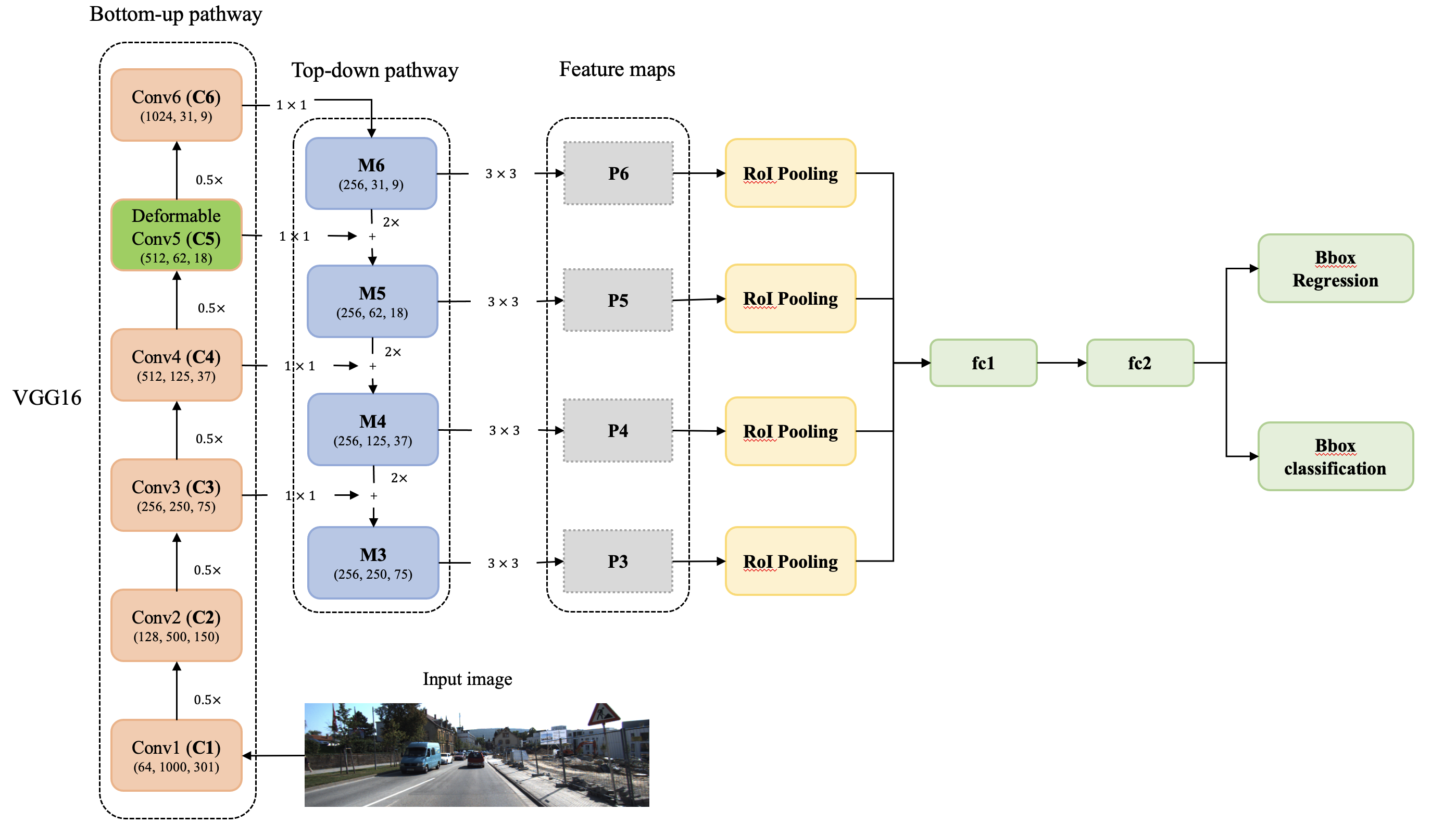

Object Detector for Autonomous Vehicles Based on Improved Faster RCNN

Zirui Wang

- Pytorch implementation of Faster R-CNN based on VGG16.

- Supports Feature Pyramid Network (FPN).

- Supports Deformable Convolution (DCNv1)

- Pretrained model can be found here

1. Introduction

This is a implementation a framework that combines Feature Pyramid Network (FPN) and Deformable Convolution Network (DCNv1) to improve Faster RCNN on object detection tasks.The whole model is implemented on Pytorch and trained on VOC 2007 training set and evaluate on VOC 2007 test set, with 1.1% improvement on mAP@[.5,.95] score and 3.95% improvement on mAP@[0.75:0.95] score, which demonstrates the effectiveness of the model. The model also support for KITTI 2d Object Detection dataset, training on KITTI 2D object detection training set and evaluate on validation set, with a surprisingly 11.96% increase on mAP@[.5,.95] score and a 23.35% increase on mAP@[.75,.95]. m

2. Experimental Results

- Detection results on PASCAL VOC 2007 test set

- All models were evaluated using COCO-style detection evaluation metrics.

| Training dataset | Model | mAP@[.5,.95] | mAP@[.75,.95] |

|---|---|---|---|

| VOC 07 | Faster RCNN | 69.65 | 31.14 |

| VOC 07 | FPN+ Faster RCNN | 69.83 | 34.02 |

| VOC 07 | Deform+ Faster RCNN | 69.93 | 30.85 |

- Detection results on KITTI 2d Object Detection valication set

- All models were evaluated using COCO-style detection evaluation metrics.

| Training dataset | Model | mAP@[.5,.95] | mAP@[.75,.95] |

|---|---|---|---|

| KITTI 2d | Faster RCNN | 71.58 | 32.40 |

| KITTI 2d | FPN+ Faster RCNN | 82.76 | 56.02 |

| KITTI 2d | Deform+ Faster RCNN | 71.73 | 33.16 |

| KITTI 2d | FPN+ Deform+ Faster RCNN | 82.59 | 56.30 |

3. Requirements

- numpy

- six

- torch

- torchvision

- tqdm

- cv2

- defaultdict

- itertools

- namedtuple

- skimage

- xml

- pascal_voc_writer

- PIL

4. Usage

4.1 Data preparation

- Download the training, validation, and test data.

# VOC 2007 trainval and test datasets

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

# KITTI 2d Object Detection training set and groundtruth labels

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_object_image_2.zip

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_object_label_2.zip- Untar files into two separate directories named

VOCdevkitandKITTIdevkit

# VOC 2007 trainval and test datasets

mkdir VOCdevkit && cd VOCdevkit

tar xvf VOCtrainval_06-Nov-2007.tar

tar xvf VOCtest_06-Nov-2007.tar

# KITTI 2d Object Detection trainset and labels (following last command)

cd ..

mkdir KITTIdevkit && cd KITTI devkit

unzip data_object_image_2.zip

unzip data_object_label_2.zip- The KITTI dataset need to reformat to match with the following structure.

dataset

├── KITTIdevkit

│ ├── training

│ ├── image_2

│ └── label_2

└── VOCdevkit

├── VOC2007

├── Annotations

├── ImageSets

├── JPEGImages

├── SegmentationClass

└── SegmentationObject- Convert the KITTI dataset into PASCAL VOC 2007 dataset format using the dataset format convertion tool script

# go back to the main page of the project code

cd ./improved_faster_rcnn

# change directory to find the format convertion script

cd data

# run dataset format convertion script

python kitti2voc.py- After running the above command, you should have the same dataset structure for KITTI as VOC 2007, and it is now ready to load into the model

dataset

├── KITTI2VOC

│ ├── Annotations

│ ├── ImageSets

│ ├── JPEGImages

└── VOCdevkit

└── VOC2007

├── Annotations

├── ImageSets

├── JPEGImages

├── SegmentationClass

└── SegmentationObject4.2 Train models

- You can easily modify the parameters for training in

utils/config.pyand run the following script for model training

# if you are in local environemnt, run:

python3 ./train.py

# if you are in conda environment, run:

python ./train.py4.3 Test models

-

You can easily modify the parameters for testing in

utils/config.pyand run the following script for model training -

You can visualize the testing image by setting

visualize=Truein configuration file -

The output image should be placed under

save_dir/visualsspecified in the configuration file -

Ex 1) FPN based on VGG16 (file name: "fpn_vgg16_1.pth")

# if you are in local environemnt, run:

python3 ./test.py

# if you are in conda environment, run:

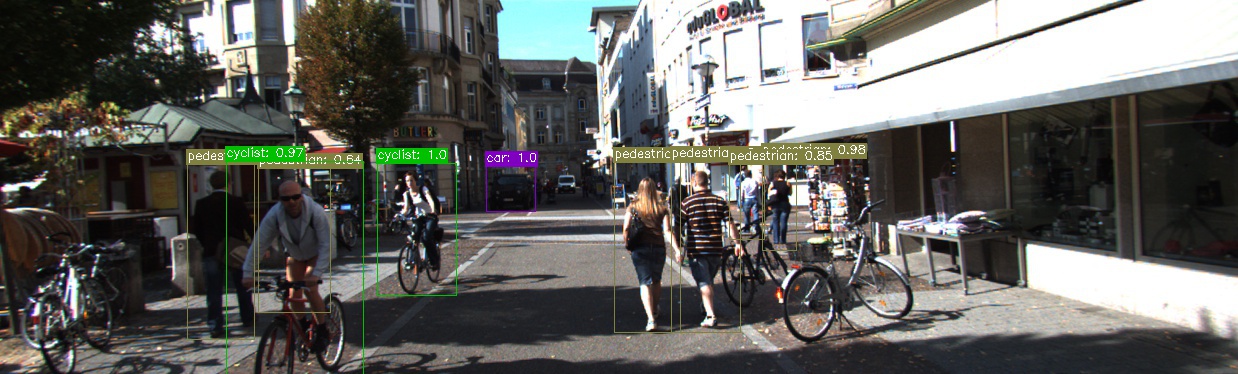

python ./test.py4.4 Example output images

- Below are some of the resulting images from visualization