TDS

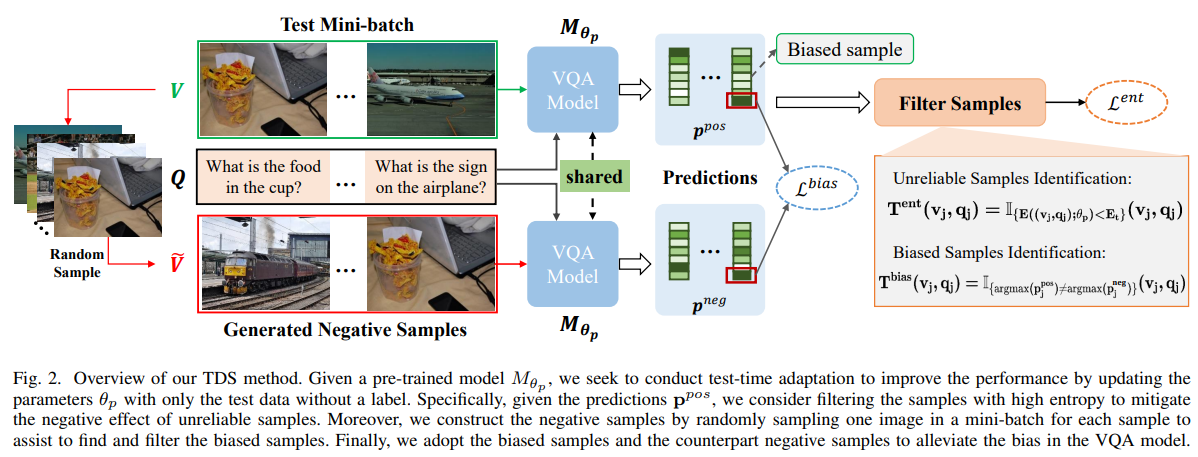

We provide the PyTorch implementation for Test-Time Model Adaptation for Visual Question Answering with Debiased Self-Supervisions (IEEE TMM 2023).

Dependencies

- Python 3.6

- PyTorch 1.1.0

- dependencies in requirements.txt

- We train and evaluate all of the models based on one TITAN Xp GPU

Getting Started

Installation

-

Clone this repository:

git clone https://github.com/Zhiquan-Wen/TDS.git cd TDS -

Install PyTorch and other dependencies:

pip install -r requirements.txt

Download and preprocess the data

cd data

bash download.sh

python preprocess_features.py --input_tsv_folder xxx.tsv --output_h5 xxx.h5

python feature_preprocess.py --input_h5 xxx.h5 --output_path trainval

python create_dictionary.py --dataroot vqacp2/

python preprocess_text.py --dataroot vqacp2/ --version v2

cd ..

Download the baseline models pre-trained on VQA-CP v2 training set

Test-Time Adaptation

- Evaluate and Train our model simultaneous

CUDA_VISIBLE_DEVICES=0 python TDS.py --dataroot data/vqacp2/ --img_root data/coco/trainval_features --output saved_models_cp2/test.json --batch_size 512 --learning_rate 0.01 --rate 0.2 --checkpoint_path path_for_pretrained_UpDn_model

Evaluation

- Compute detailed accuracy for each answer type on a json file of results:

python comput_score.py --input saved_models_cp2/test.json --dataroot data/vqacp2/

Quick Reproduce

- Preparing enviroments: we prepare a docker image (built from Dockerfile) which has included above dependencies, you can pull this image from dockerhub or aliyun registry:

docker pull zhiquanwen/debias_vqa:v1

docker pull registry.cn-shenzhen.aliyuncs.com/wenzhiquan/debias_vqa:v1

docker tag registry.cn-shenzhen.aliyuncs.com/wenzhiquan/debias_vqa:v1 zhiquanwen/debias_vqa:v1

- Start docker container: start the container by mapping the dataset in it:

docker run --gpus all -it --ipc=host --network=host --shm-size 32g -v /host/path/to/data:/xxx:ro zhiquanwen/debias_vqa:v1

- Running: refer to

Download and preprocess the data,TrainingandEvaluationsteps inGetting Started.

Reference

If you found this code is useful, please cite the following paper:

@inproceedings{TDS,

title = {Test-Time Model Adaptation for Visual Question Answering with Debiased Self-Supervisions},

author = {Zhiquan Wen,

Shuaicheng Niu,

Ge Li,

Qingyao Wu,

Mingkui Tan,

Qi Wu},

booktitle = {IEEE TMM},

year = {2023}

}