Neural Style Transfer

📄 PyTorch implementation of "A Neural Algorithm of Artistic Style" (arXiv:1508.06576)

Table of Contents

Background

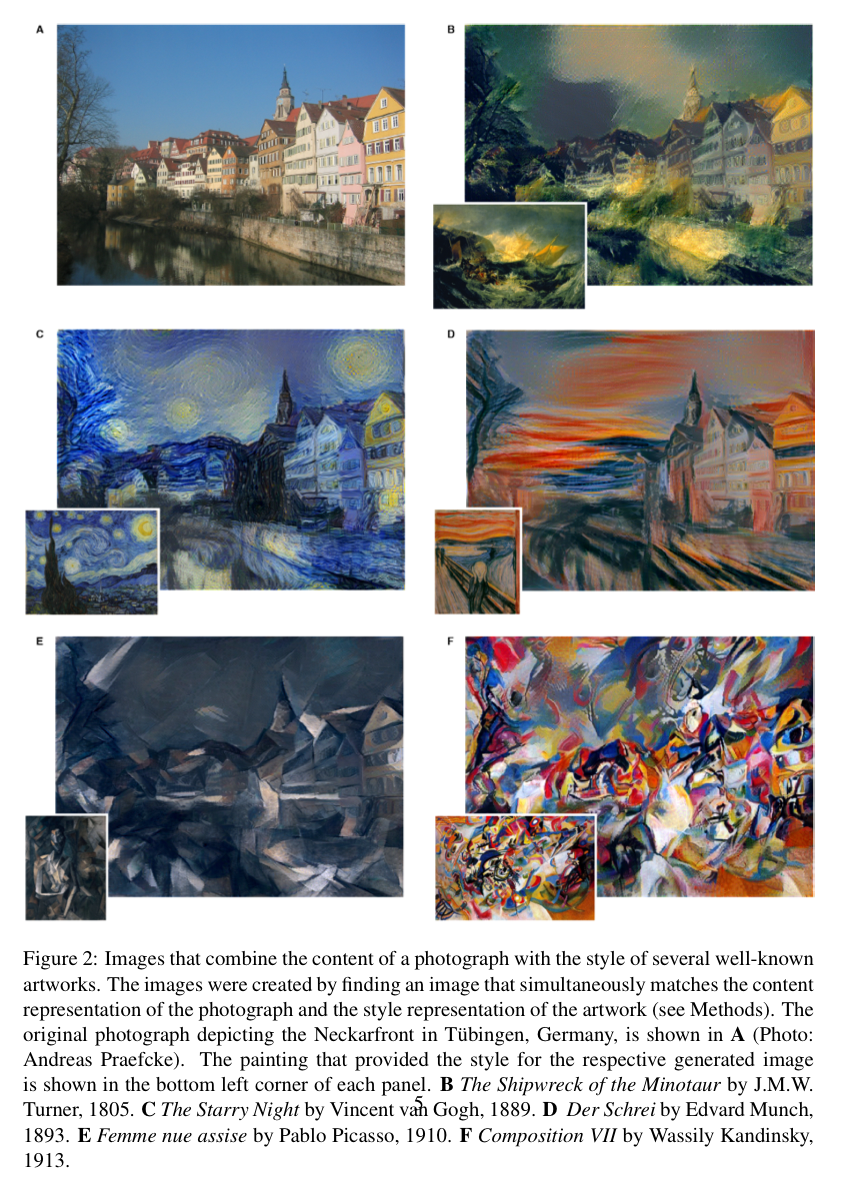

In order to generate images, we take advantage of backpropagation to minimize the loss value. We call the input image which the art style will be transfered to content image, and the art style image is called style image.

We initialized generated image to be random noise. Then we pass the initialized generated image, style image and content image to the neural network model which its parameters are pre-trained using ImageNet as mentioned in the paper.

Model architecture

The feature space is provided by the 16 convolutional and 5 pooling layers of the 19 layer VGG Network without any of fully connected layers.

For image synthesis, the max-pooling operation is replaced by average pooling improves the gradient flow and one obtains slightly more appealing results.

Optimization method

- For content image, perform gradient descent on a white noise image to find another image that matches the feature responses of the original image.

- For style image, perform gradient descent from a white noise image to find another image that matches the style representation of the original image.

Loss function

Content loss function

let

$$ \mathcal{L}{\text{content}}(\overrightarrow{p}, \overrightarrow{x}, l) = \frac{1}{2} \sum \limits{i,j} (F_{ij}^{l} - P_{ij}^{l})^2. $$

where

Style loss function

Style representation computes the correlations between the different filter responses, where the expectation is taken over the spatial extend of the input image.

The feature correlations are given by the Gram matrix

What we do is to minimize the mean-squared distance between the entries of the Gram matrix from the original image and the Gram matrix of the image to be generated. let

The contribution of that layer to the total loss is then

and the total loss is

$$ \mathcal{L}{\text{style}} (\overrightarrow{a}, \overrightarrow{x}) = \sum \limits{l=0}^{L} w_l E_l $$

where

Total loss function

Jointly minimize the distance of a white noise image from the content representation of the photograph in one layer of the network and the style representation of the painting in a number of layers of the CNN. So let

$$ \mathcal{L}{\text{content}}(\overrightarrow{p}, \overrightarrow{a}, l) = \alpha \mathcal{L}{\text{content}}(\overrightarrow{p}, \overrightarrow{x}) + \beta \mathcal{L}_{\text{style}} (\overrightarrow{a}, \overrightarrow{x}) $$

where

Dependencies

torch

PIL

scipy

Experiments

Hyper-parameters

Optimizer

I use the Adam optimizer to minimize total loss. We iteratively update our output image such that it minimizes our loss: we don't update the weights associated with our network, but instead we train our input image to minimize loss. As for the L-BFGS optimizer, which if you are familiar with this algorithm is recommended.

def _init_optimizer(self, input_img):

"""Initialize LBFGS optimizer."""

self.optimizer = optim.LBFGS([input_img.requires_grad_()])Generated Artwork

| Content Image | Style Image | Output Image |

|---|---|---|

|

|

|

References

[1] Shubhang Desai, Neural Artistic Style Transfer: A Comprehensive Look

[2] Raymond Yuan, Neural Style Transfer: Creating Art with Deep Learning using tf.keras and eager execution

[3] Shubham Jha, A brief introduction to Neural Style Transfer

[4] Adrian Rosebrock, Neural Style Transfer with OpenCV