The code for ICME2023 paper of “Image-text Retrieval via preserving main Semantics of Vision”[pdf].

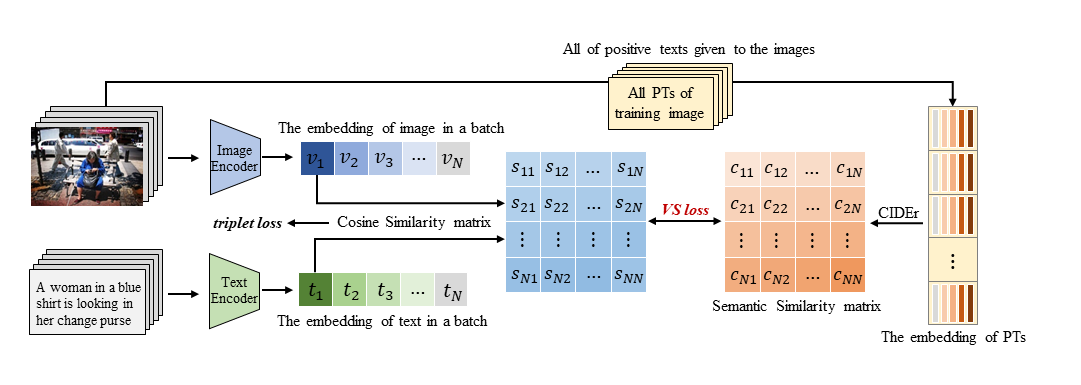

We proposed a semantical alignment strategy Visual Semantic Loss(VSL) for image-text retrieval. And we verify the effectiveness on top of two models proposed in SGRAF.

The framework of VSL:

The experiments result:

| Dataset | Method | Image to Text | Text to Image | ||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| MSCOCO1K | SGR+VSL | 78.5 | 96.2 | 98.6 | 63.0 | 89.9 | 95.3 |

| SAF+VSL | 78.3 | 96.0 | 98.6 | 63.0 | 89.9 | 95.3 | |

| SGRAF+VSL | 80.1 | 96.5 | 98.8 | 64.8 | 90.7 | 95.9 | |

| MSCOCO5K | SGR+VSL | 57.7 | 84.3 | 91.0 | 41.4 | 70.5 | 80.8 |

| SAF+VSL | 56.2 | 84.4 | 91.3 | 41.4 | 70.4 | 81.0 | |

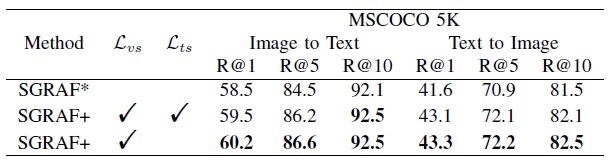

| SGRAF+VSL | 60.2 | 86.6 | 92.5 | 43.3 | 72.2 | 82.5 | |

| Flickr30K | SGR+VSL | 75.7 | 93.5 | 96.5 | 56.5 | 80.9 | 85.9 |

| SAF+VSL | 75.9 | 93.9 | 97.5 | 57.9 | 82.7 | 88.9 | |

| SGRAF+VSL | 79.5 | 95.3 | 97.9 | 60.2 | 84.3 | 89.4 | |

- Python 3.7

- PyTorch==1.10.1

- CUDA==11.1

- NumPy==1.21.6

- TensorBoard

- h5py==3.1.0

- Punkt Sentence Tokenizer:

import nltk

nltk.download()

> d punktWe follow SCAN and SGRAF to obtain image features and vocabularies, which can be downloaded by using:

wget https://iudata.blob.core.windows.net/scan/data.zip

wget https://iudata.blob.core.windows.net/scan/vocab.zipAnother download link is provided by SGRAF.

https://drive.google.com/drive/u/0/folders/1os1Kr7HeTbh8FajBNegW8rjJf6GIhFqCPut the pretrained models into "./checkpoint".

Modify the model_path, data_path, vocab_path in the eval_single.py file. Then run eval_single.py:

For example:

evalrank(model_path="./checkpoint/SGR+VSL_COCO.pth.tar", data_path='./data', split="testall", fold5=True)

(For SGR+VSL and SAF+VSL) python eval_single.pyNote that fold5=True is only for evaluation on mscoco1K (5 folders average) while fold5=False for mscoco5K and flickr30K. Pretrained models and Log files can be downloaded from:

Modify the sgr_model_path, saf_model_path, data_path, vocab_path in the eval_overall.py file. Then run eval_overall.py:

For example:

evalrank(sgr_model_path="./checkpoint/SGR+VSL_COCO.pth.tar", saf_model_path="./checkpoint/SAF+VSL_COCO.pth.tar", data_path='./data', split="testall", fold5=True)

(For SGRAF+VSL) python eval_overall.pyNote that fold5=True is only for evaluation on mscoco1K (5 folders average) while fold5=False for mscoco5K and flickr30K. Pretrained models and Log files can be downloaded from:

Modify the data_path, vocab_path, model_name, logger_name in the opts.py file. Then run train.py:

For MSCOCO:

(For SGR+VSL) python train.py --data_name coco_precomp --batch_size 128 --num_epochs 25 --lr_update 10 --learning_rate 0.0003 --module_name SGR

(For SAF+VSL) python train.py --data_name coco_precomp --batch_size 128 --num_epochs 25 --lr_update 10 --learning_rate 0.0003 --module_name SAFFor Flickr30K:

(For SGR+VSL) python train.py --data_name f30k_precomp --batch_size 128 --num_epochs 40 --lr_update 25 --learning_rate 0.0003 --module_name SGR

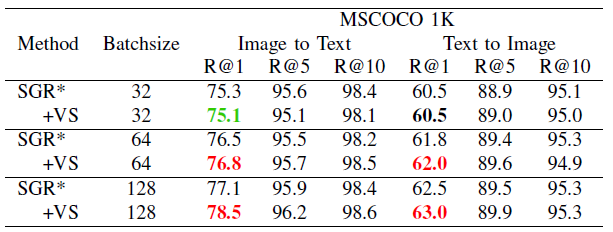

(For SAF+VSL) python train.py --data_name f30k_precomp --batch_size 128 --num_epochs 30 --lr_update 15 --learning_rate 0.0003 --module_name SAFModify the --batch_size to 32, 64, and 128. The results on MSCOCO1K shows below.

Modify the code in line 501-530, model.py. The results on MSCOCO5K shows below.

If Visual Semantic Loss(VSL) is useful for you, please cite the following paper.

Since ICME2023 has published the paper, please cite this official version of the paper. : )

@inproceedings{10219570,

author={Zhang, Xu and Niu, Xinzheng and Fournier-Viger, Philippe and Dai, Xudong},

booktitle={2023 IEEE International Conference on Multimedia and Expo (ICME)},

title={Image-text Retrieval via Preserving Main Semantics of Vision},

year={2023},

pages={1967-1972},

doi={10.1109/ICME55011.2023.00337}

}