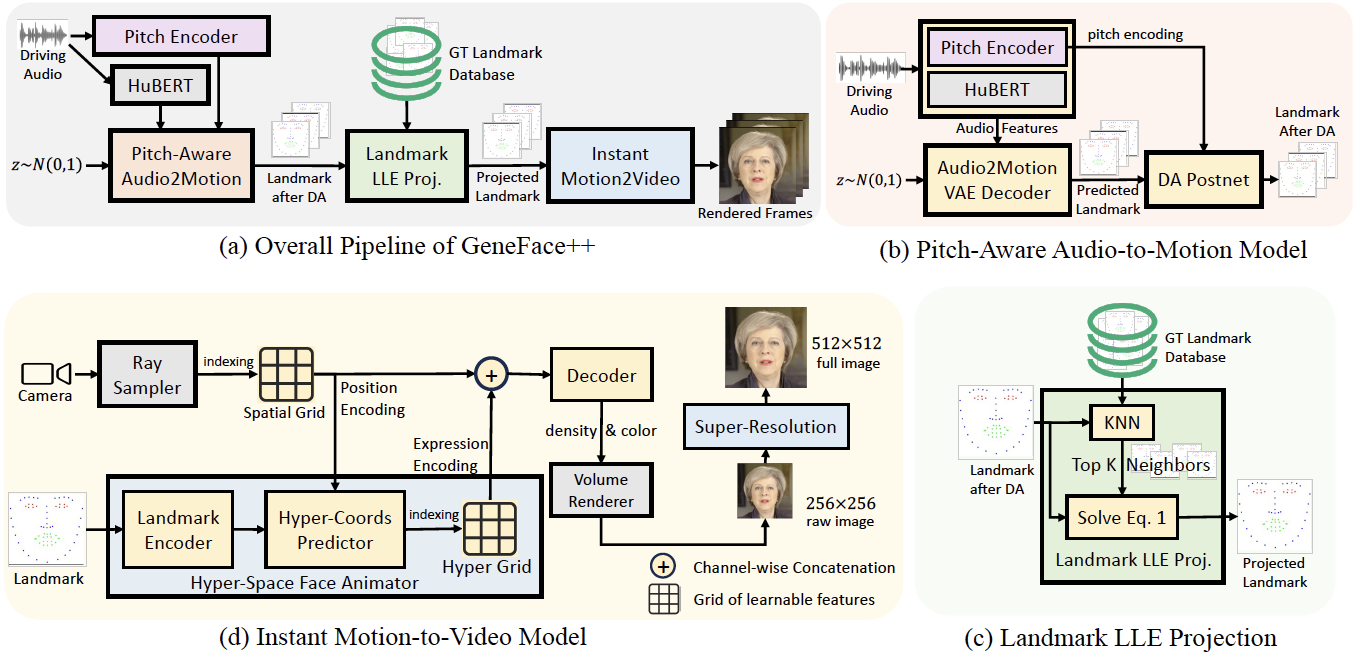

This is the official implementation of GeneFace++ Paper with Pytorch,which enables high lip-sync, high video-reality and high system-efficiency 3D talking face generation. You can visit our Demo Page to watch demo videos and learn more details.

- We release Real3D-portrait (ICLR 2024 Spotlight), (https://github.com/yerfor/Real3DPortrait), a NeRF-based one-shot talking face system. Only upload one image and enjoy realistic talking face!

We provide a guide for a quick start in GeneFace++.

-

Step 1: Follow the steps in

docs/prepare_env/install_guide.md, create a new python environment namedgeneface, and download 3DMM files intodeep_3drecib/BFM. -

Step 2: Download pre-processed dataset of May(Google Drive or BaiduYun Disk with password e1a3), and place it here

data/binary/videos/May/trainval_dataset.npy -

Step 3: Download pre-trained audio-to-motino model (Google Drive or BaiduYun Disk with password 9cqp) and motion-to-video which is specific to May (in this Google Drive or in this BaiduYun Disk password: exwd), and unzip them to

./checkpoints/

After these steps,your directories checkpoints and data should be like this:

> checkpoints

> audio2motion_vae

> motion2video_nerf

> may_head

> may_torso

> data

> binary

> videos

> May

trainval_dataset.npy

- Step 4: activate

genefacePython environment, and execute:

export PYTHONPATH=./

python inference/genefacepp_infer.py --a2m_ckpt=checkpoints/audio2motion_vae --head_ckpt= --torso_ckpt=checkpoints/motion2video_nerf/may_torso --drv_aud=data/raw/val_wavs/MacronSpeech.wav --out_name=may_demo.mp4Or you can play with our Gradio WebUI:

export PYTHONPATH=./

python inference/app_genefacepp.py --a2m_ckpt=checkpoints/audio2motion_vae --head_ckpt= --torso_ckpt=checkpoints/motion2video_nerf/may_torsoPlease refer to details in docs/process_data and docs/train_and_infer.

- Release Inference Code of Audio2Motion and Motion2Video.

- Release Pre-trained weights of Audio2Motion and Motion2Video.

- Release Training Code of Motino2Video Renderer.

- Release Gradio Demo.

- Release Training Code of Audio2Motion and Post-Net.

If you found this repo helpful to your work, please consider cite us:

@article{ye2023geneface,

title={GeneFace: Generalized and High-Fidelity Audio-Driven 3D Talking Face Synthesis},

author={Ye, Zhenhui and Jiang, Ziyue and Ren, Yi and Liu, Jinglin and He, Jinzheng and Zhao, Zhou},

journal={arXiv preprint arXiv:2301.13430},

year={2023}

}

@article{ye2023geneface++,

title={GeneFace++: Generalized and Stable Real-Time Audio-Driven 3D Talking Face Generation},

author={Ye, Zhenhui and He, Jinzheng and Jiang, Ziyue and Huang, Rongjie and Huang, Jiawei and Liu, Jinglin and Ren, Yi and Yin, Xiang and Ma, Zejun and Zhao, Zhou},

journal={arXiv preprint arXiv:2305.00787},

year={2023}

}