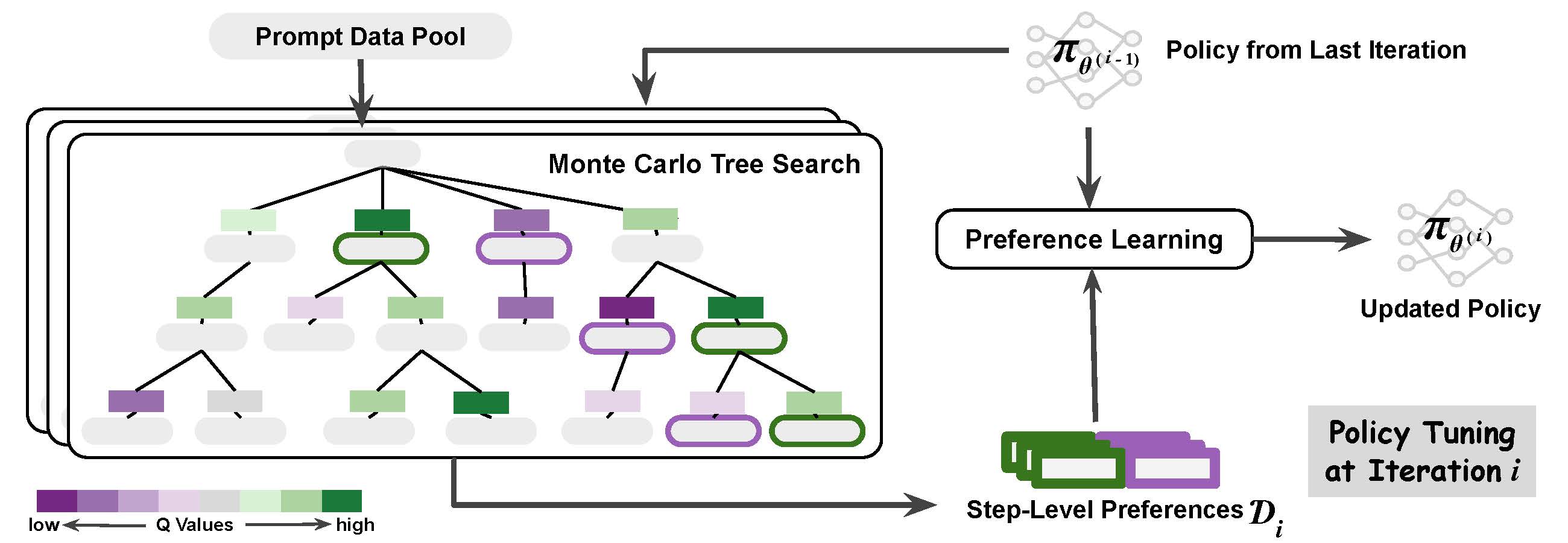

This repository contains code and analysis for the paper: Monte Carlo Tree Search Boosts Reasoning via Iterative Preference Learning. Below is the framework of our proposed method.

conda env create --file conda-recipe.yaml

pip install -r requirements.txtOur main code include ./mcts_rl/algorithms/mcts and ./mcts_rl/trainers/tsrl_trainer.py

To run MCTS-DPO for MathQA on Mistral (SFT):

bash scripts/mcts_mathqa.shTo run MCTS-DPO for CSR on Mistral (SFT):

bash scripts/mcts_csr.sh@article{xie2024monte,

title={Monte Carlo Tree Search Boosts Reasoning via Iterative Preference Learning},

author={Xie, Yuxi and Goyal, Anirudh and Zheng, Wenyue and Kan, Min-Yen and Lillicrap, Timothy P and Kawaguchi, Kenji and Shieh, Michael},

journal={arXiv preprint arXiv:2405.00451},

year={2024}

}

This repository is adapted from the code of the works Safe-RLHF.