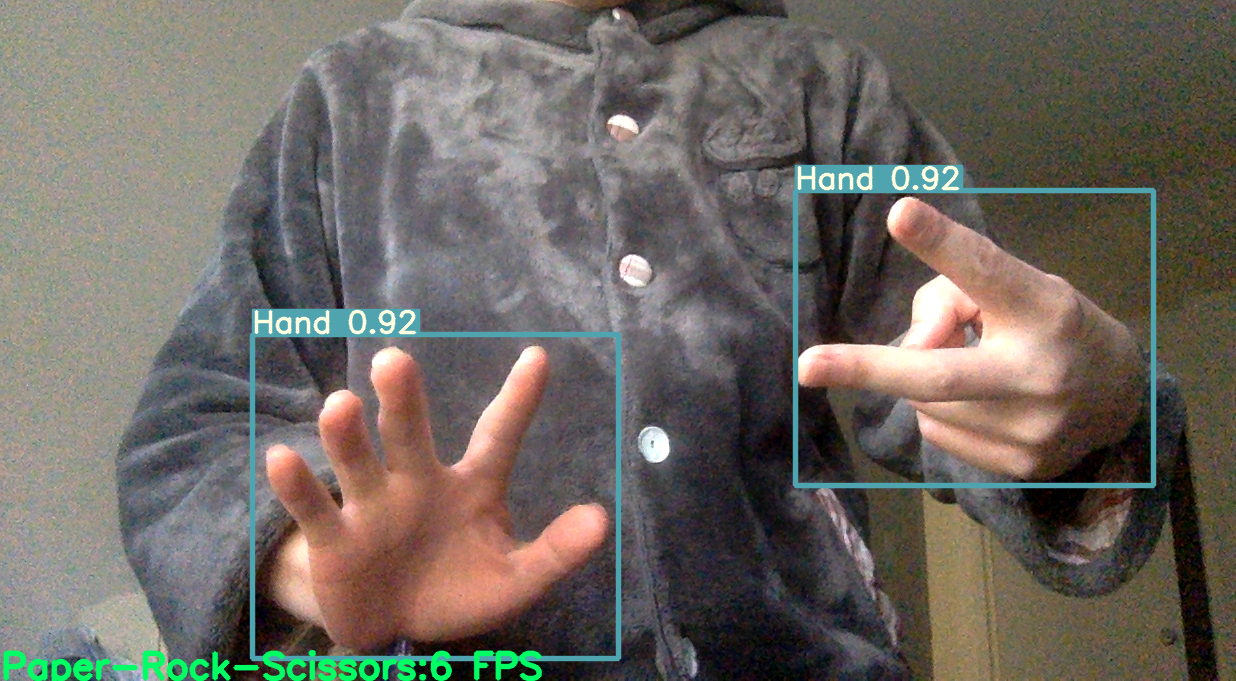

The code is for the final project titled as rock-paper-scissors. It can detect hand gestures from the live video input and classify it into one of the three classes {paper, rock, scissors}.

The hand detection feature is realized by modifying the general-purpose object detection model YOLOv5.

The gesture classification is realized by training convolution networks. Specifically, we tried 3 layers Conv2D and 4 layers dense net and the resnet50 .

At the root of the project directory

cd test && python3 video_gesture_detect.py

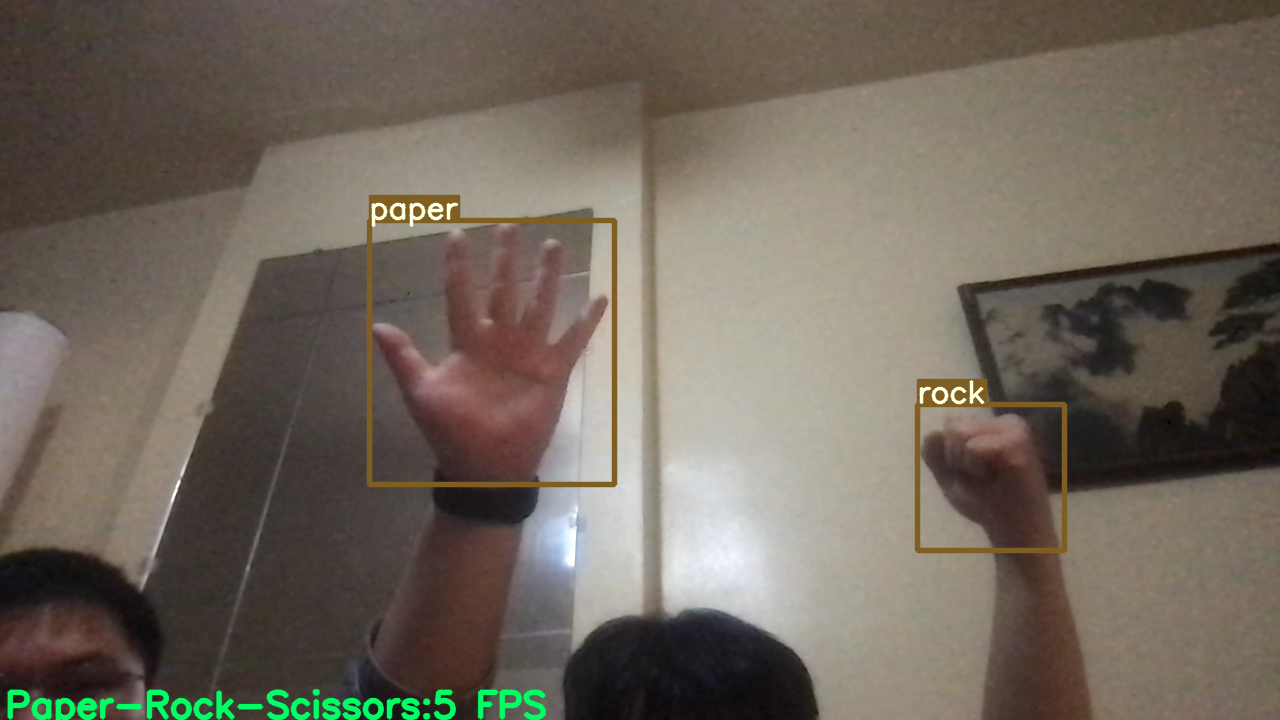

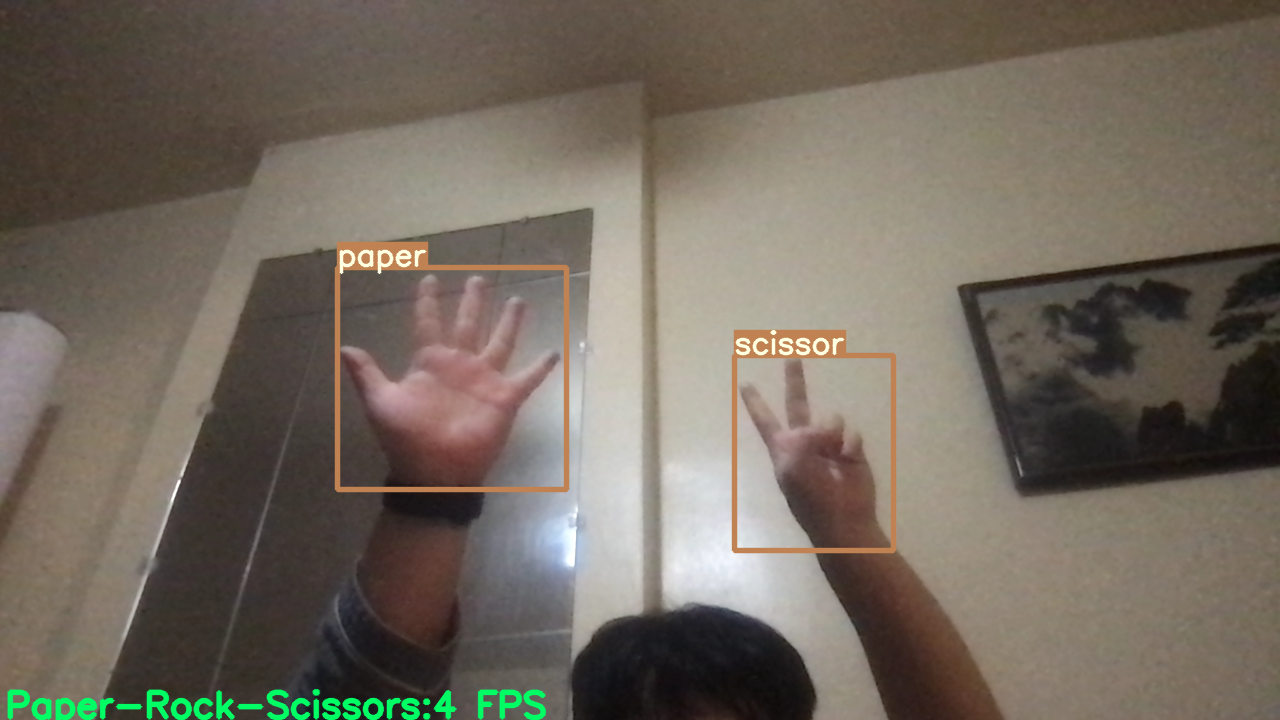

A demo is shown.

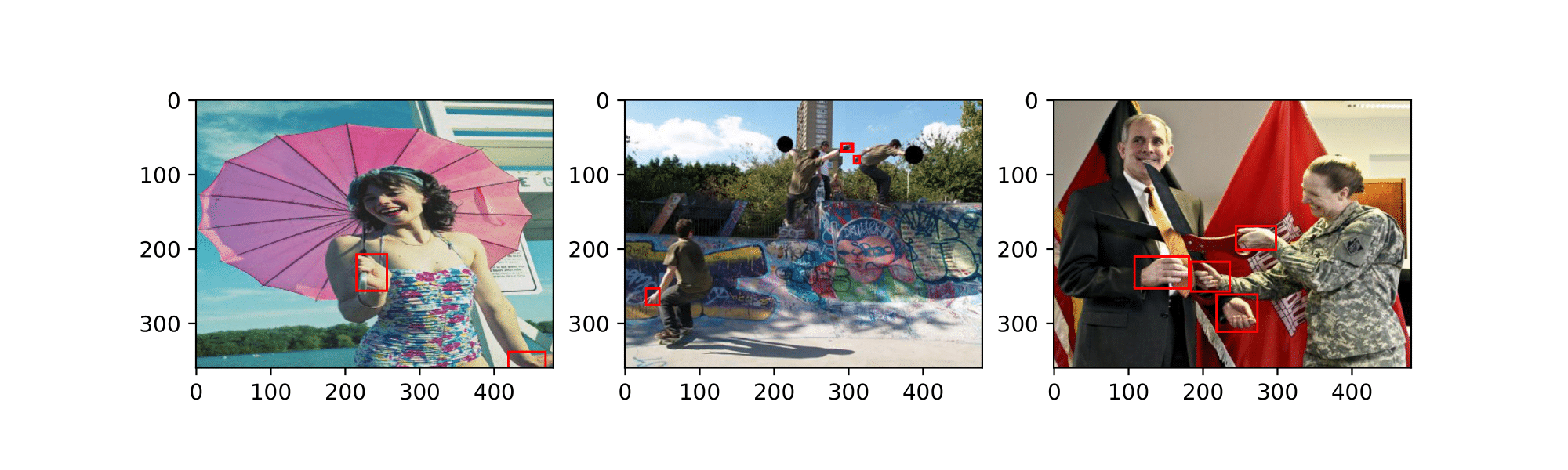

Hand Detector: Datasets for training a hand detector are based on the TV-Hand dataset, which contains hand annotations for 9,500 images extracted from the ActionThread dataset and the COCO-Hand dataset contains annotations for 25,000 images of the Microsoft's COCO dataset.

We provide a glance of the training samples and an example of annotations follow the format

# class x y w h

0 0.36354166666666665 0.5166666666666667 0.07291666666666667 0.08333333333333333

where all the coordinates are normalized with respect to its own size using the following rule. The (x,y) specifies the center of the bounding box and (w,h) specifies the width and the height of the bounding box.

We merge them and use 25974 images for training and 7459 for validation.

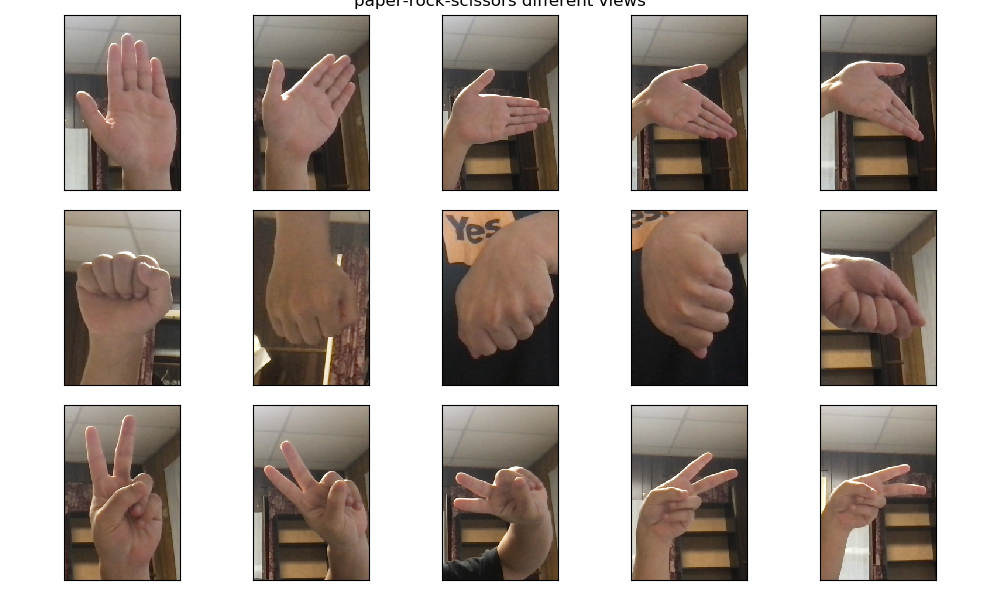

Gesture Classifier: To train a classifier that can recognize paper, rock, and scissors from a hand gesture,we use three datasets Kaggle-RPC dataset are generated by a Raspberry-Pi device with a webcam and are of dimension

We provide a glance of the training samples from our own dataset

To train a hand detector using YOLOv5 model, run the following commands in terminal. The following code use pre-trained YOLOV5-nano weights and the image size is 640.

# assume at the root directory of the project

cd src/yolohand/ && python train.py --data hand.yaml --weights yolov5n.pt --img 640

It is highly recommend to use GPU instead of CPU to train the model. I use one GPU (Tesla T4) offered by Lehigh Research Computing Systems. If you happen to have the access, then you can use the following slurm script to starting training on your own.

#!/bin/tcsh

#SBATCH --partition=hawkgpu

#SBATCH --time=24:00:00

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=4

#SBATCH --gres=gpu:1

#SBATCH --job-name yolov5

#SBATCH --output="yolov5.%j.%N.out"

cd ${SLURM_SUBMIT_DIR}

python train.py --data hand.yaml --weights yolov5n.pt --img 640

The trained model weights can be found at test/yolohand/runs/train/exp/weights/best.pt

A caveat here is that one needs to modify the

hand.yamlto specify the correct path to the train/val/test datasets.

To test the trained hand detector, one can run the script under test/video.py. One should expect to see the following result.

cd src/gestures

Open the cnn_train.ipynb or resnet50.ipynb and run the command line by line.

- src

- yolohand: Contains the code for training a hand detector. The majority of the code is cloned from https://github.com/ultralytics/yolov5 with custmization specialized for this project.

- data: hyper-parameters + yolo-format to specify the train/val data + sample traning data

- ./models: model architecure; specifically, we adpot the smallest yolov5 model

- ./utils: helper functions

- train.py: trainning pipline

- val.py: validation

- gestures: Contains the code for training a classifier to do the gesture classification.

- cnn_train.ipynb:Train a shallow CNN

- resnet50.ipynb: Use the pretrained Resnet50 to do the transfer learning.

- test

- ./yolohand: Contains all training logs and the final trained model.

- ./test/gestures/saved_models: Save all the trained models which will be used in the real-time detection. (This will not appear on the github due to the file size limits. Run notebook under src/gestures/ will create this folder.)

- video.py: test trained model's hand detection performance.

- video_gesture_detect.py: Do real-time gestures classification.

- environment.yml: dependencies