Supplementary materials for the paper: Attention-based cross-modal fusion for audio-visual voice activity detection in musical video streams

The proposed attention-based AVVAD (ATT-AVVAD) framework consists of the audio-based module (audio branch), image-based module (visual branch), and attention-based fusion module. The audio-based module produces acoustic representation vectors for four target audio events: Silence, Speech of the anchor, Singing voice of the anchor, and Others. The image-based module aims to obtain the possibility of anchor vocalization based on facial parameters. Finally, we propose an attention-based module to fuse audio-visual information to comprehensively consider the bi-modal information to make final decisions at the audio-visual level.

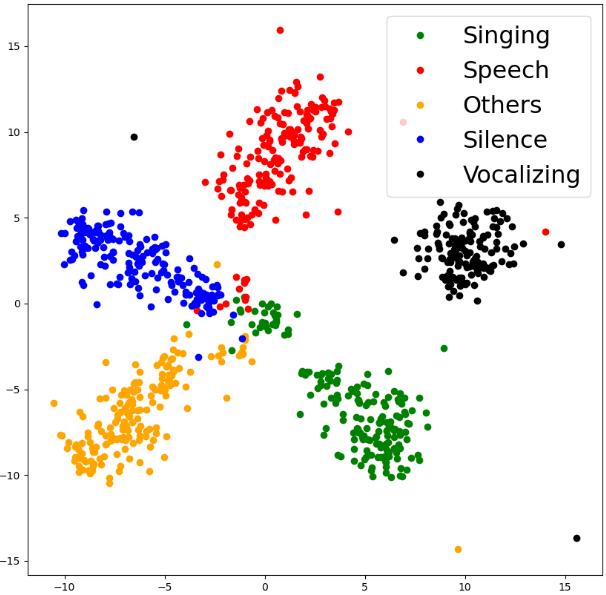

2. Visualization of core representation vectors distribution after attention-based fusion from a test sample using t-SNE.

The vectors in subgraph (a) are from the audio branch, vectors in subgraph (b) are from audio-visual modules after attention-based fusion.

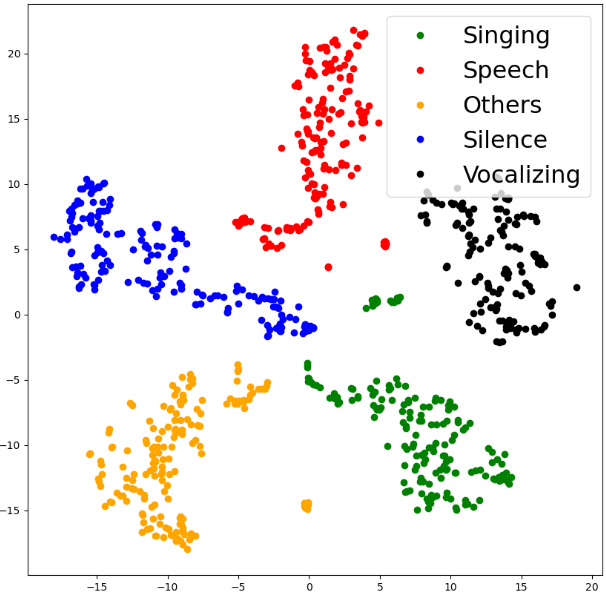

3. Visualization of acoustic representation vectors and visual vocalization vector distribution from a test sample using t-SNE.

The vector (black dots) representing the vocalizing of the anchor is distributed on the side representing the voices of the anchor (green dots for singing, red dots for speech).

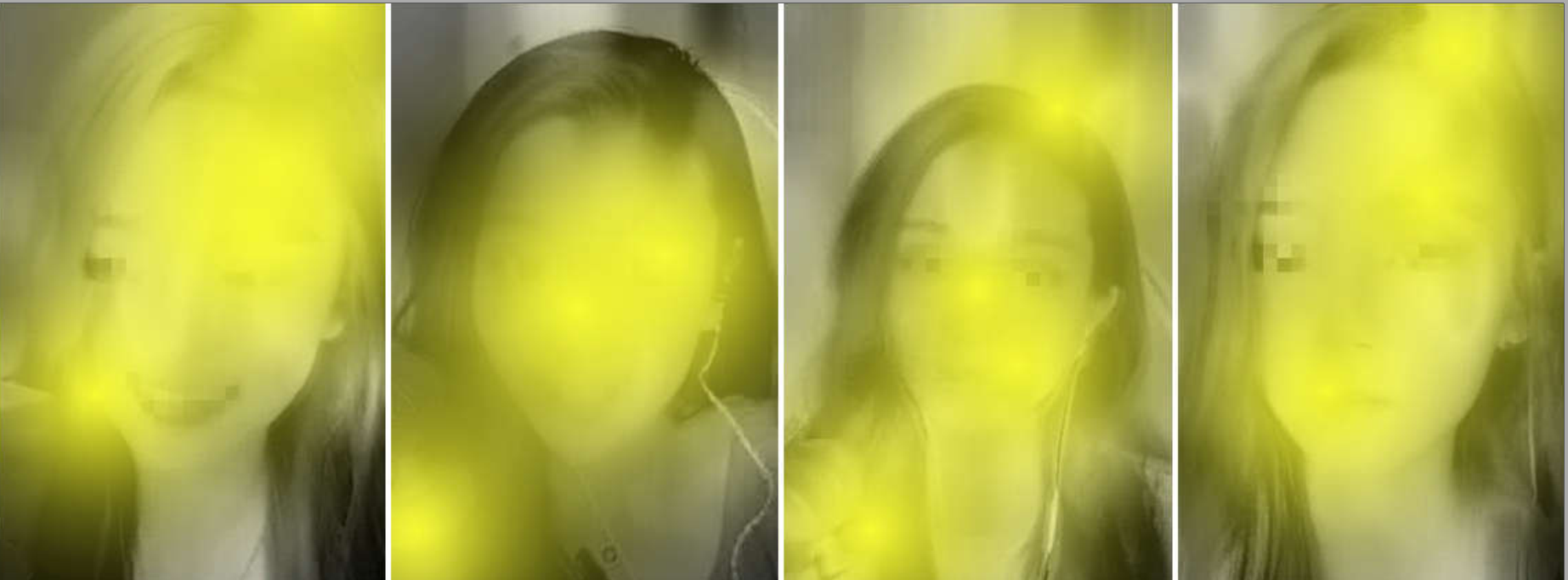

To intuitively inspect the focal areas of the model, a visualization method CAM for deep networks is used in this paper. As shown in Figure 8, the visual branch mainly focuses on the eye and lip contours of the anchor, and the high-level representation of facial parameters is used to judge whether the anchor is vocalizing. This proves that the visual branch designed in this paper performs as expected.

In the proposed ATT-AVVAD framework, the audio branch roughly distinguishes the target sound events in latent space, but it is not accurate enough. The visual branch predicts the probability of the anchor vocalizing from the facial information in the image sequence, so as to correct the learned representations from the audio branch, and then transmit them to the classification layer of the audio-visual module for final decisions. The above results show that each branch has achieved the expected goal, and the fusion based on semantic similarity is reasonable and effective.

If you want to watch more intuitively, please see here: https://yuanbo2020.github.io/Attention-based-AV-VAD/.

Please feel free to use the open dataset MAVC100 and consider citing our paper as

@inproceedings{hou21_interspeech,

author={Yuanbo Hou and Zhesong Yu and Xia Liang and Xingjian Du and Bilei Zhu and Zejun Ma and Dick Botteldooren},

title={{Attention-Based Cross-Modal Fusion for Audio-Visual Voice Activity Detection in Musical Video Streams}},

year=2021,

booktitle={Proc. Interspeech 2021},

pages={321--325},

doi={10.21437/Interspeech.2021-37}

}