This is our implementation for the paper: MH-DETR: Video Moment and Highlight Detection with Cross-modal Transformer

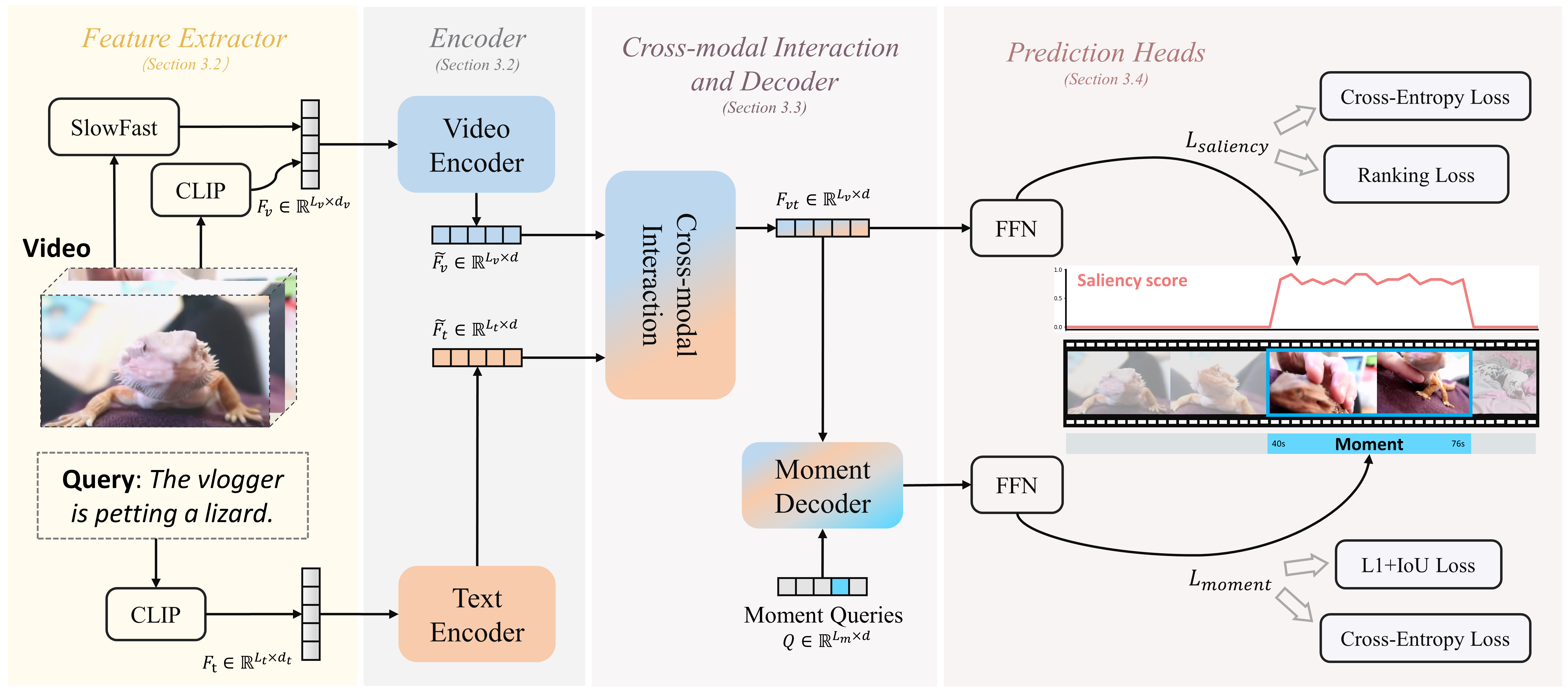

With the increasing demand for video understanding, video moment and highlight detection (MHD) has emerged as a critical research topic. MHD aims to localize all moments and predict clip-wise saliency scores simultaneously. Despite progress made by existing DETR-based methods, we observe that these methods coarsely fuse features from different modalities, which weakens the temporal intra-modal context and results in insufficient cross-modal interaction. To address this issue, we propose MH-DETR (Moment and Highlight DEtection TRansformer) tailored for MHD. Specifically, we introduce a simple yet efficient pooling operator within the uni-modal encoder to capture global intra-modal context. Moreover, to obtain temporally aligned cross-modal features, we design a plug-and-play cross-modal interaction module between the encoder and decoder, seamlessly integrating visual and textual features. Comprehensive experiments on QVHighlights, Charades-STA, Activity-Net, and TVSum datasets show that MH-DETR outperforms existing state-of-the-art methods, demonstrating its effectiveness and superiority. Our code is available at -.

The released code consists of the following files.

MH-DETR

├── data

│ ├── activitynet

│ │ └── {train,val}.pkl

│ ├── charades

│ │ └── {train,val}.pkl

│ ├── tvsum

│ │ └── tvsum_{train,val}.jsonl

│ └── highlight_{train,val,test}_release.jsonl

├── features

│ ├── activitynet

│ │ └── c3d.hdf5

│ ├── charades

│ | ├── vgg.hdf5

│ │ └── i3d.hdf5

| ├── clip_features

| ├── clip_text_features

| ├── slowfast_features

| └── tvsum

├── mh_detr

├── standalone_eval

├── utils

├── results

├── README.md

└── ···

# create conda env

conda create --name mh_detr python=3.9

# activate env

conda actiavte mh_detr

# install pytorch 1.13.1

conda install pytorch=1.13.1 torchvision torchaudio -c pytorch

# install other python packages

pip install tqdm ipython easydict tensorboard tabulate scikit-learn pandas timm fvcoreDownload QVHighlights annotations.

Download QVHighlights_features.tar.gz (8GB) from Moment-DETR repo, extract it under project root directory:

tar -xf path/to/moment_detr_features.tar.gz

Download VGG features for Charades-STA official server.

Download I3D features (password: 1234) for Charades-STA.

Download C3D features for ActivityNet.

Download TVSum features from UMT repo.

bash mh_detr/scripts/train.shbash mh_detr/scripts/inference.sh ${Your_Path}/MH-DETR/results/qvhighlights/model_best.ckpt valCheckpoint download link. Please replace ${Your_Path} with your path. The result is as follows:

| MR R1@0.5 | MR R1@0.7 | MR mAP Avg. | HD ( |

HD ( |

Params | GFLOPs |

|---|---|---|---|---|---|---|

| 60.84 | 44.90 | 39.26 | 38.77 | 61.74 | 8.2M | 0.34 |

bash mh_detr/scripts/inference.sh ${Your_Path}/MH-DETR/results/qvhighlights/model_best.ckpt testbash mh_detr/scripts/train_charades.sh --dset_name ${Dataset_Name}Please replace ${Dataset_Name} with {activitynet, charades, tvsum}.

bash mh_detr/scripts/train.sh --debug