This repository countains the code to compute the baseline and run the metrics used in the SPEAR challenge. To download the data go here.

Terminal commands to download are also provided below. Use the flag --continue-at if the download aborted to early.

cd <your-spear-directory>

# All core Train data. Size of 14, 20, 20 an 24GB compressed for D1, D2, D3 and D4 respectively.

curl https://spear2022data.blob.core.windows.net/spear-data/CoreTrainDataset1_v1.1.tar -o CoreTrainDataset1_v1.1.tar

curl https://spear2022data.blob.core.windows.net/spear-data/CoreTrainDataset2_v1.1.tar -o CoreTrainDataset2_v1.1.tar

curl https://spear2022data.blob.core.windows.net/spear-data/CoreTrainDataset3_v1.1.tar -o CoreTrainDataset3_v1.1.tar

curl https://spear2022data.blob.core.windows.net/spear-data/CoreTrainDataset4_v1.1.tar -o CoreTrainDataset4_v1.1.tar

# ATFs

curl https://spear2022data.blob.core.windows.net/spear-data/Device_ATFs.h5 --create-dirs -o Miscellaneous/Array_Transfer_Functions/Device_ATFs.h5

# All core Dev data (size of 24GB unpacked, 35GB decompressed)

curl https://spear2022data.blob.core.windows.net/spear-data/CoreDev_1.1.tar -o CoreDev_v1.1.tarNote that the ATFs should be downloaded in a directory called Miscellaneous/Array_Transfer_Functions at the same level as the extracted Main and Extra folders for the example bash script to run. The file is already included in CoreDev but not in the CoreTrain files.

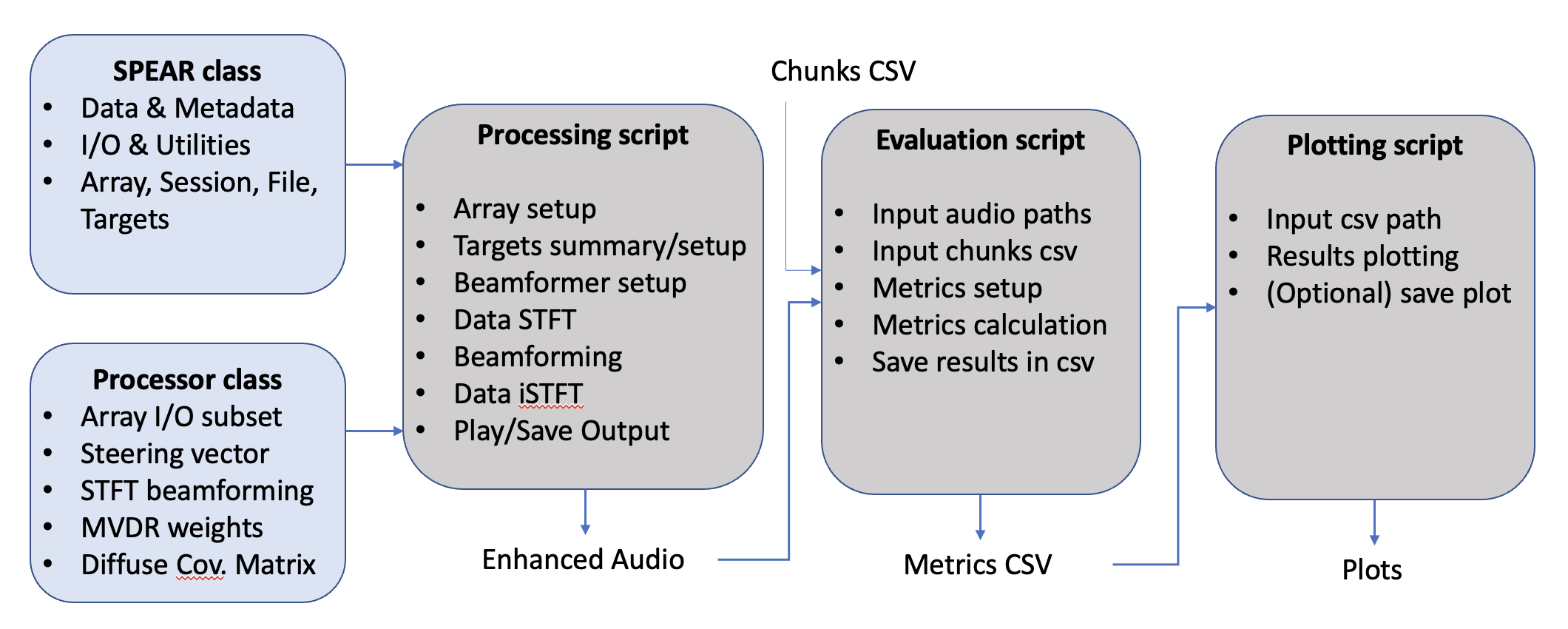

The provided framework consists mainly of three python scripts (more details are found in the powerpoint presentation in assets):

- spear_enhance.py computes the baseline enhancement (MVDR beamformer) and output processed audio files.

- spear_evaluate.py computes the metrics on the processed audio files and output a csv file with all metrics for all chunks.

- spear_visualise.py plot the results of the computed metrics csv file and save them.

The resulting metrics csv files for passthrough and baseline enhancements are already generated and provided in metrics. To compute those files yourself, a bash script run_example.sh is provided. Instructions are found below.

We assume that conda is installed. If not follow the instruction here.

# First clone the repo

git clone https://github.com/ImperialCollegeLondon/spear-tools

cd spear-tools

# Second create & activate environment with conda

conda env create -n <your-env-name> -f env-spear-metrics.yml

conda activate <your-env-name>

cd analysisFor windows users, Microsoft C++ build tools are needed when building the environment.

To run an example, use the command bash run_example.sh in the analysis folder.

The following parameters can be modified

# Setup soft links to make paths easier

ln -sf <outputs-folder-full-path> my_results # Output path for the processed audio, metrics csv and plots

ln -sf <dataset-folder-full-path> spear_data # Root folder of the SPEAR datasets containing the folders Main and Extra

# Define variables

SET='Dev' # 'Train', 'Dev' or 'Eval'.

DATASET=2 # 1 to 4.

SESSION='10' # 1 to 9 for Train, 10 to 12 for Dev, 13 to 15 for Eval. Select '' for all session of current Dev.

MINUTE='00' # 00 to 30 (depends on the current session). '' for all minutes of current session.

PROCESSING='baseline' # Name of desired processing. 'baseline' by default

REFERENCE='passthrough' # Name of desired reference enhancement. 'passthrough' by default.

The rest of the script computes the enhancement, metrics evaluation and plotting for both passthrough and the desired METHOD.

python spear_enhance.py <input-root> <audio-dir> --method_name <method-name> --list_cases <list-cases>

python spear_evaluate.py <input-root> <audio-dir> <segments-csv> <metrics-csv> --list_cases <list-cases>

python spear_visualise.py <output-root> <metrics-csv-ref> <metrics-proc> --reference_name <reference-name> --method_name <method-name>

| Category | Metric | Metric Abbreviation | Reference | Python Package |

|---|---|---|---|---|

| SNR | Signal to Noise Ratio | SNR | [-] | PySEPM |

| SNR | Frequency weighted Segmental SNR | fwSegSNR | Hu et al. 2008 1 | PySEPM |

| SI | Short Time Objective Intelligibility | STOI | Taal et al. 2011 2 | PYSTOI |

| SI | Extended STOI | ESTOI | Jensen et al. 2016 3 | PYSTOI |

| SI | Modified Binaural STOI | MBSTOI | Andersen et al. 2018 4 | CLARITY |

| SI | Speech to Artifacts Ratio | SAR | Vincent et al. 2006 5 | Speech Metric |

| SI | Image to Spatial Ratio | ISR | Vincent et al. 2006 5 | Speech Metric |

| SI | Speech to Distortion Ratio | SDR | Vincent et al. 2006 5 | Speech Metric |

| SI | Scale Invariant SDR | SI-SDR | Roux et al. 2019 6 | Speech Metric |

| SI | Hearing Aid Speech Perception Index | HASPI | Kates et al. 2014 7 8 | CLARITY |

| SQ | Perceptual Evaluation of Speech Quality | PESQ | Rix et al 2001 9 | Speech Metric |

| SQ | PESQ Narrow Band | PESQ-NB | Rix et al 2001 9 | Speech Metric |

Footnotes

-

Y. Hu and P. C. Loizou, “Evaluation of objective quality measures for speech enhancement,” IEEE Transactions on Audio, Speech, and Language Processing, vol. 16, no. 1, pp. 229–238, 2008. ↩

-

C. H. Taal, R. C. Hendriks, R. Heusdens, and J. Jensen, “An algorithm for intelligibility prediction of time–frequency weighted noisy speech,” IEEE Transactions on Audio, Speech, and Language Processing, vol. 19, no. 7, pp. 2125–2136, 2011. ↩

-

J. Jensen and C. H. Taal, “An algorithm for predicting the intelligibility of speech masked by modulated noise maskers,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 24, no. 11, pp. 2009–2022, 2016. ↩

-

A. H. Andersen, J. M. de Haan, Z.-H. Tan, and J. Jensen, “Refinement and validation of the binaural short time objective intelligibility measure for spatially diverse conditions,” Speech Communication, vol. 102, pp. 1–13, 2018. ↩

-

E. Vincent, R. Gribonval, and C. F ́evotte, “Performance measurement in blind audio source separation,” IEEE Transactions on Audio, Speech and Language Processing, vol. 14, no. 4, pp. 1462–1469, 2006. ↩ ↩2 ↩3

-

J. Barker, S. Graetzer, and T. Cox, “Software to support the 1st Clarity Enhancement Challenge [software and data collection],” https://doi.org/10.5281/zenodo.4593856, 2021. ↩

-

J. M. Kates and K. H. Arehart, “The hearing-aid speech perception index (haspi),” Speech Communication, vol. 65, pp. 75–93, 2014. ↩

-

J. M. Kates and K. H. Arehart, “The hearing-aid speech perception index (haspi) version 2,” Speech Communication, vol. 131, pp. 35–46, 2021. ↩

-

A. Rix, J. Beerends, M. Hollier, and A. Hekstra, “Perceptual evaluation of speech quality (pesq)-a new method for speech quality assessment of telephone networks and codecs,” in 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No.01CH37221), vol. 2, pp. 749–752 vol.2, 2001. ↩ ↩2