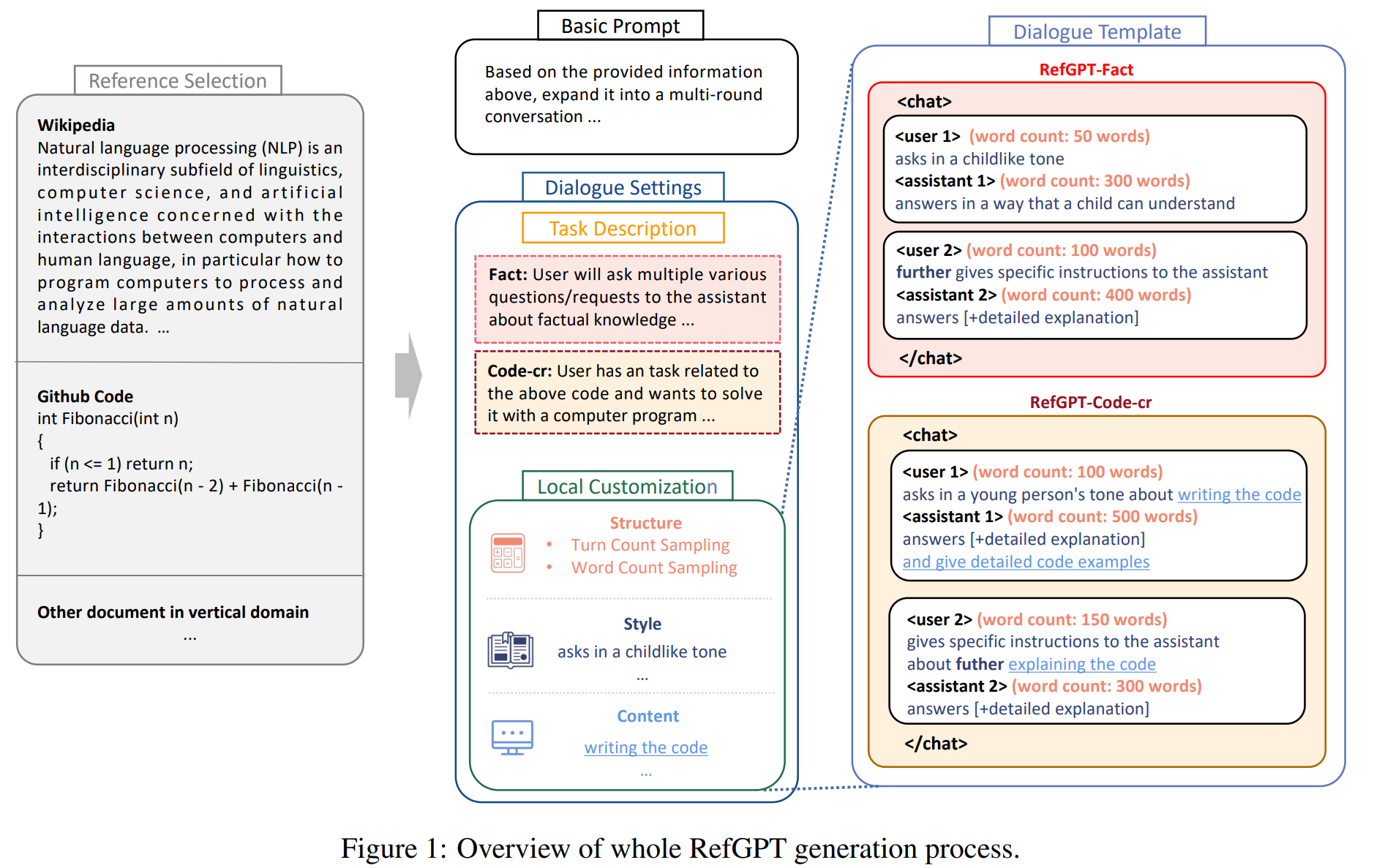

General chat models, like ChatGPT, have attained impressive capability to resolve a wide range of NLP tasks by tuning Large Language Models (LLMs) with high-quality instruction data. However, collecting human-written high-quality data, especially multi-turn dialogues, is expensive and unattainable for most people. Though previous studies have used powerful LLMs to generate the dialogues automatically, but they all suffer from generating untruthful dialogues because of the LLMs hallucination. Therefore, we propose a method called RefGPT to generate enormous truthful and customized dialogues without worrying about factual errors caused by the model hallucination. RefGPT solves the model hallucination in dialogue generation by restricting the LLMs to leverage the given reference instead of reciting their own knowledge to generate dialogues. Additionally, RefGPT adds detailed controls on every utterances to enable highly customization capability, which previous studies have ignored. On the basis of RefGPT, we also propose two high-quality dialogue datasets generated by GPT-4, namely RefGPT-Fact and RefGPT-Code. RefGPT-Fact is 100k multi-turn dialogue datasets based on factual knowledge and RefGPT-Code is 76k multi-turn dialogue dataset covering a wide range of coding scenarios.

- April 15th, we released the initial version of RefGPT-Fact-CN dataset.

- May 25th, we released the paper and annouced the new RefGPT-Fact dataset and the RefGPT-Code dataset.

- May 26th, we released the RefGPT-Fact dataset and the RefGPT-Code dataset on the huggingface hub. [download]

- Future: We will release our code recently.

RefGPT-Fact [download]

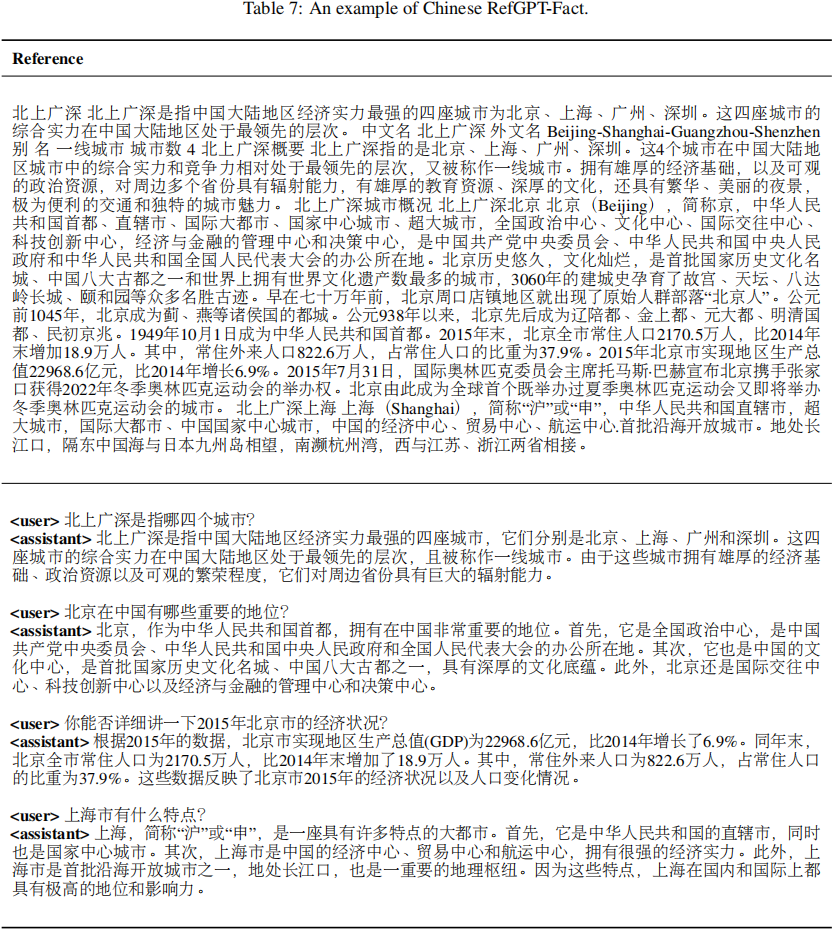

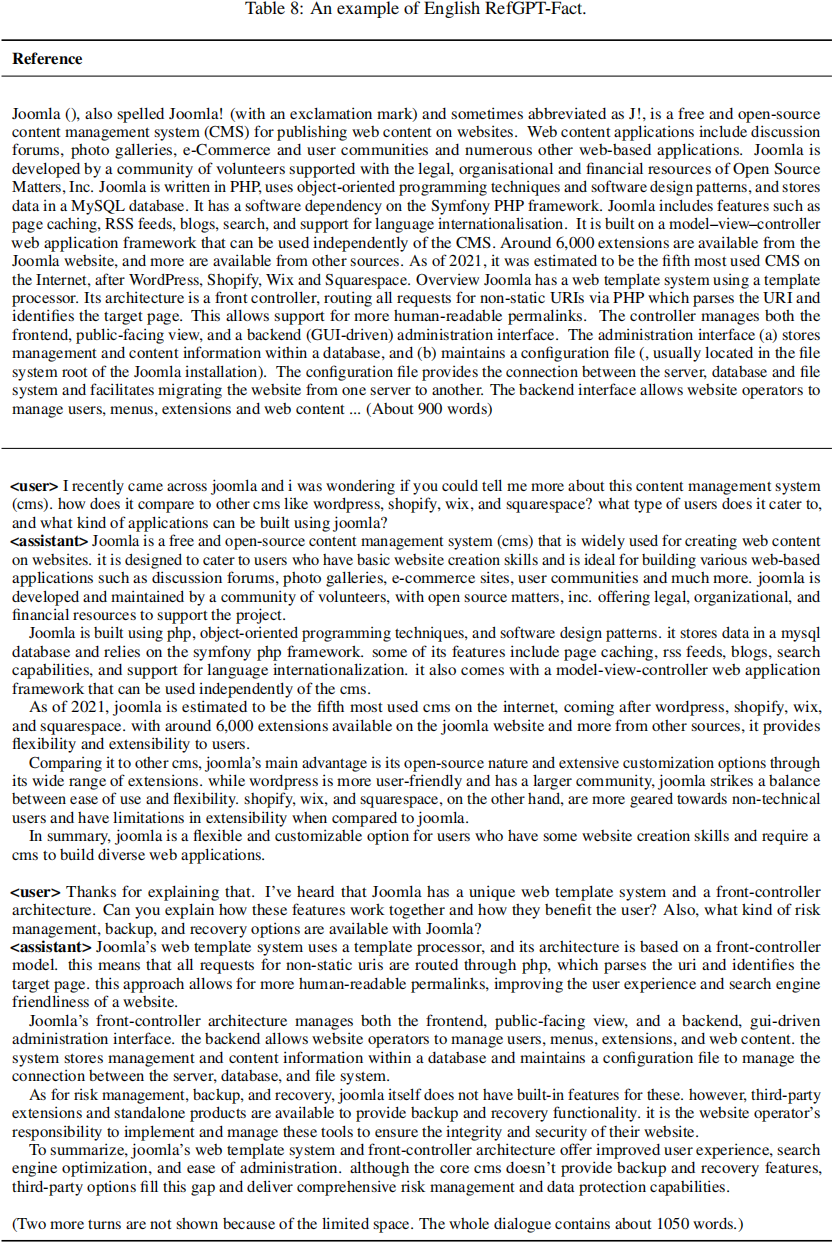

RefGPT-Fact is a datasets containing 100k multi-turn dialogues about factual knowledge with 50k English and 50k Chinese. The English version uses the English Wikipedia as the reference and the Chinese version uses the frequently-used Chinese online encyclopedia website, Baidu Baike.

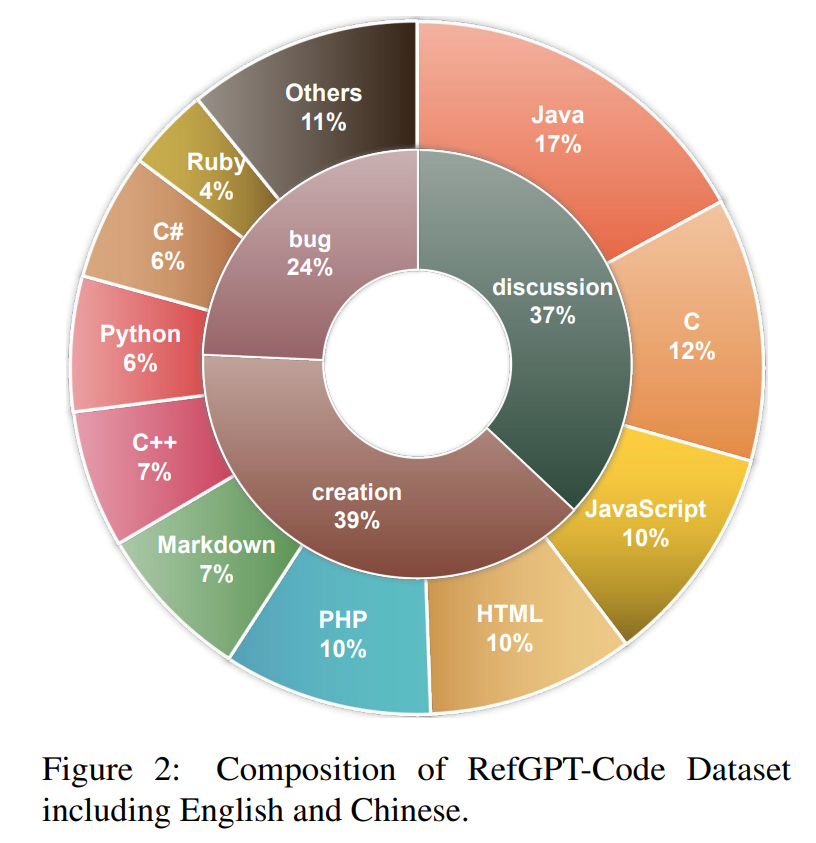

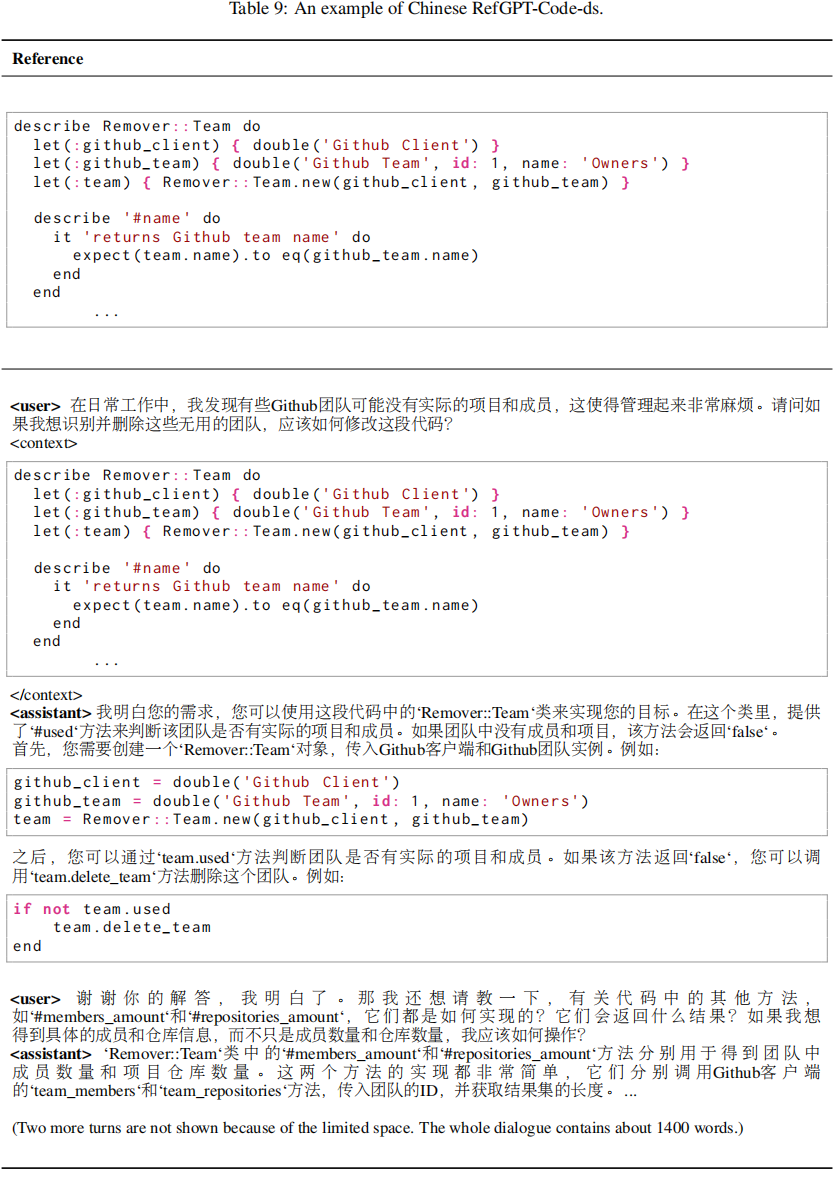

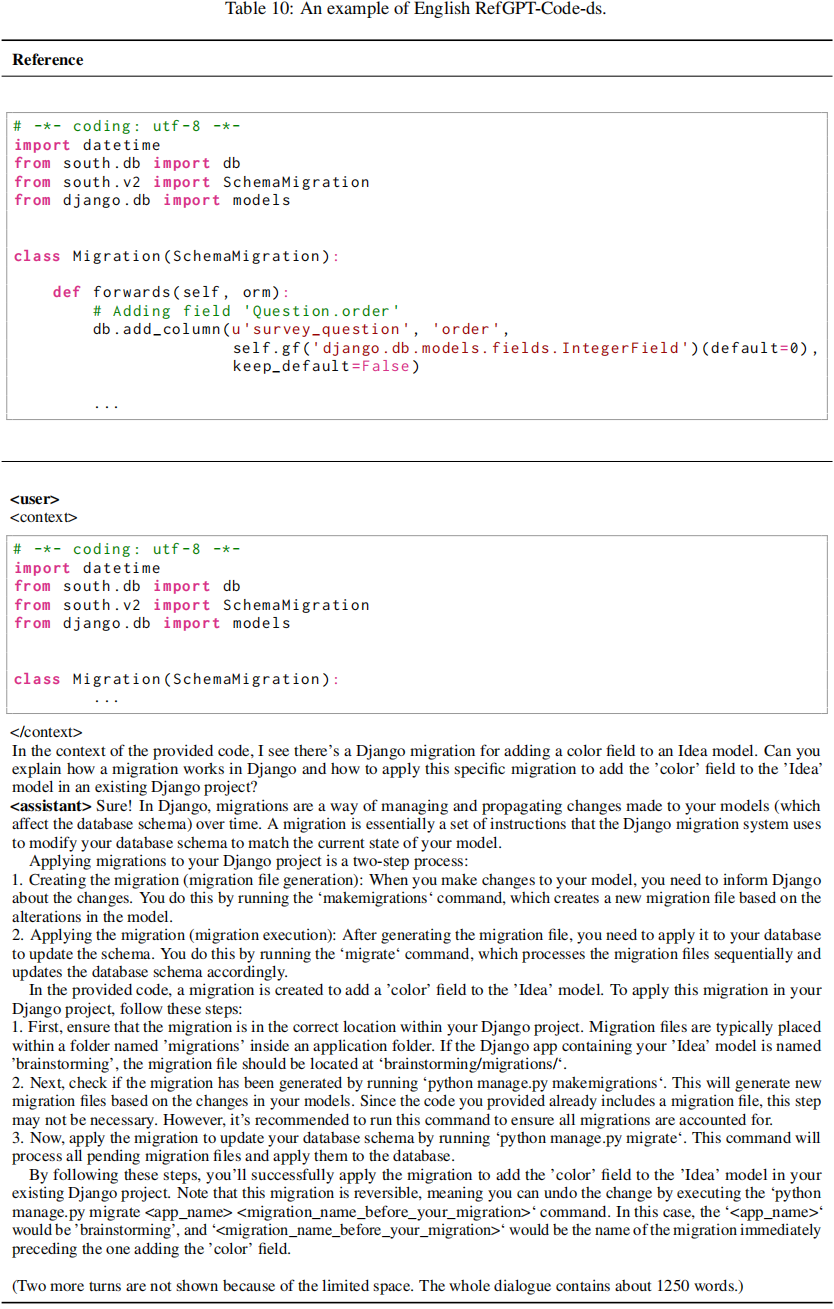

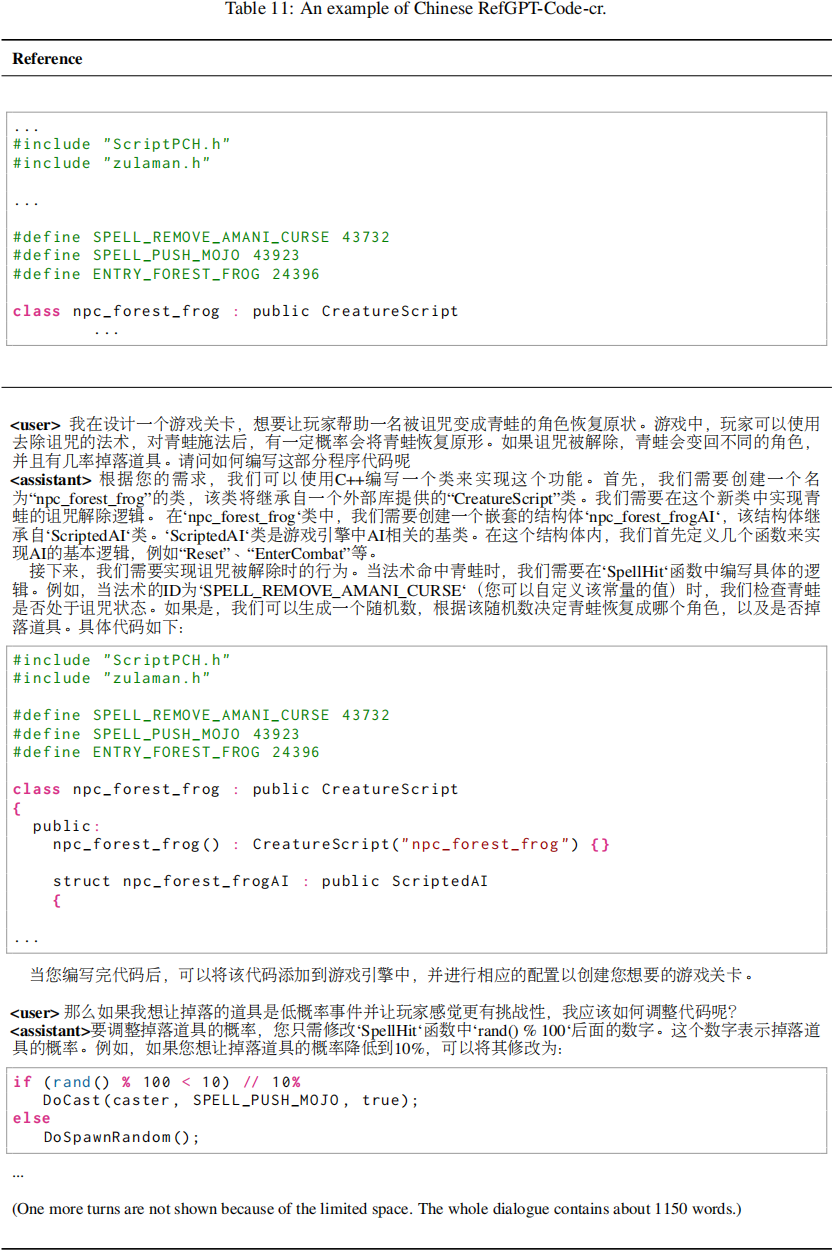

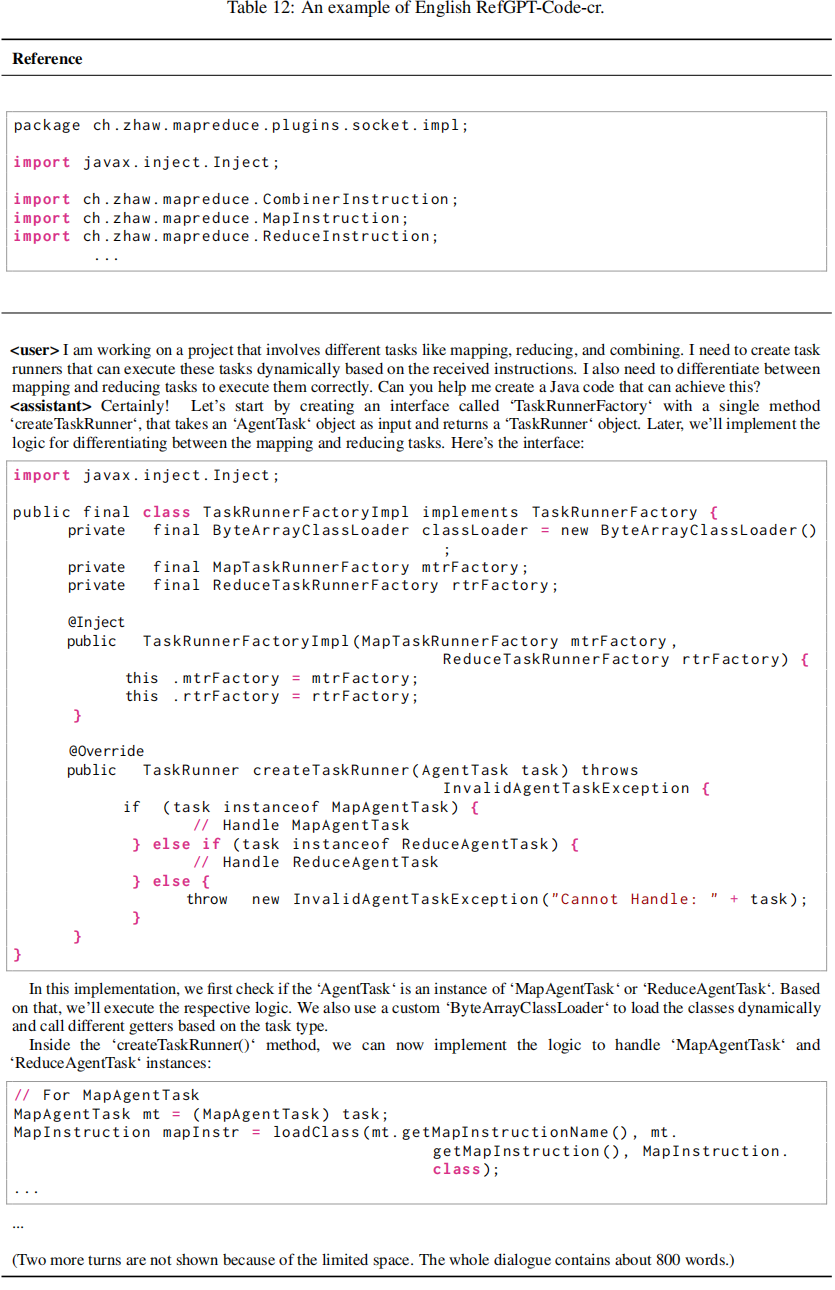

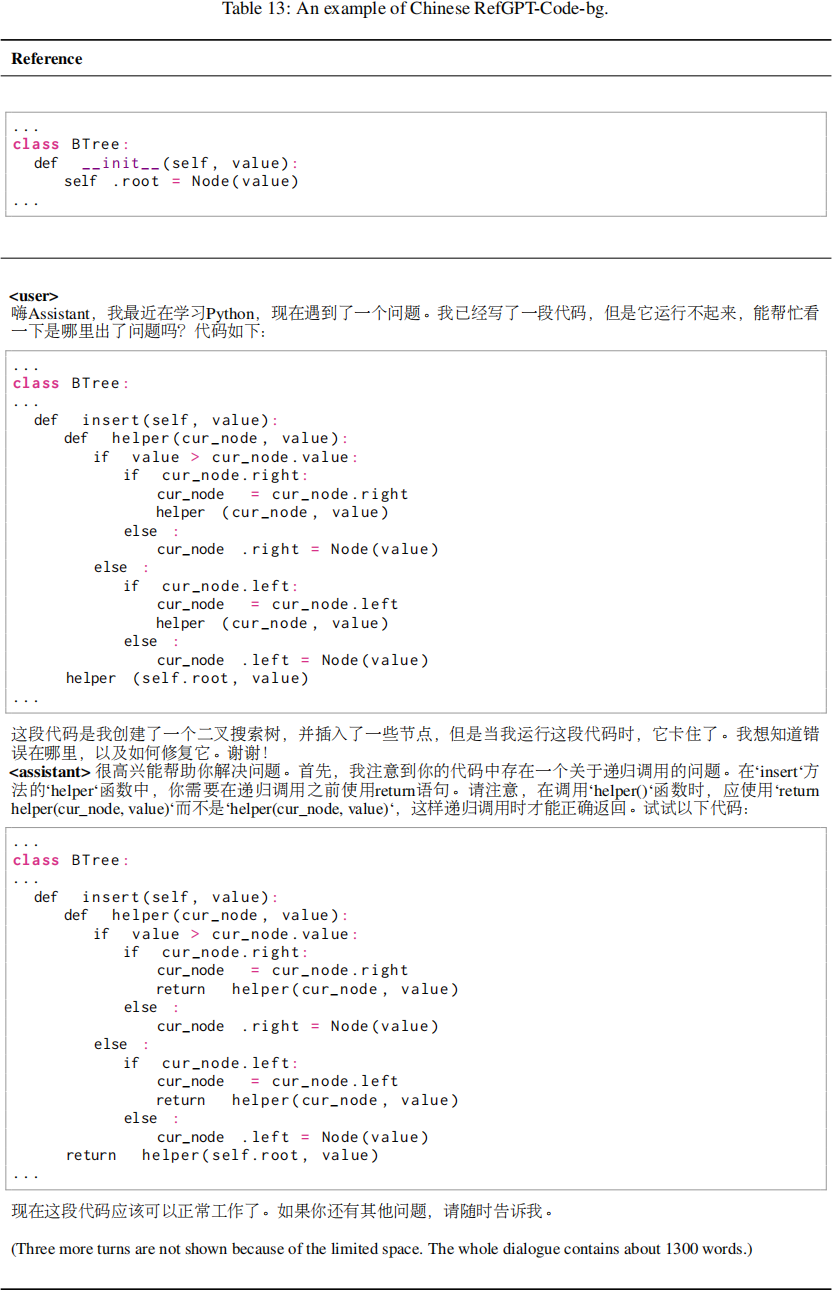

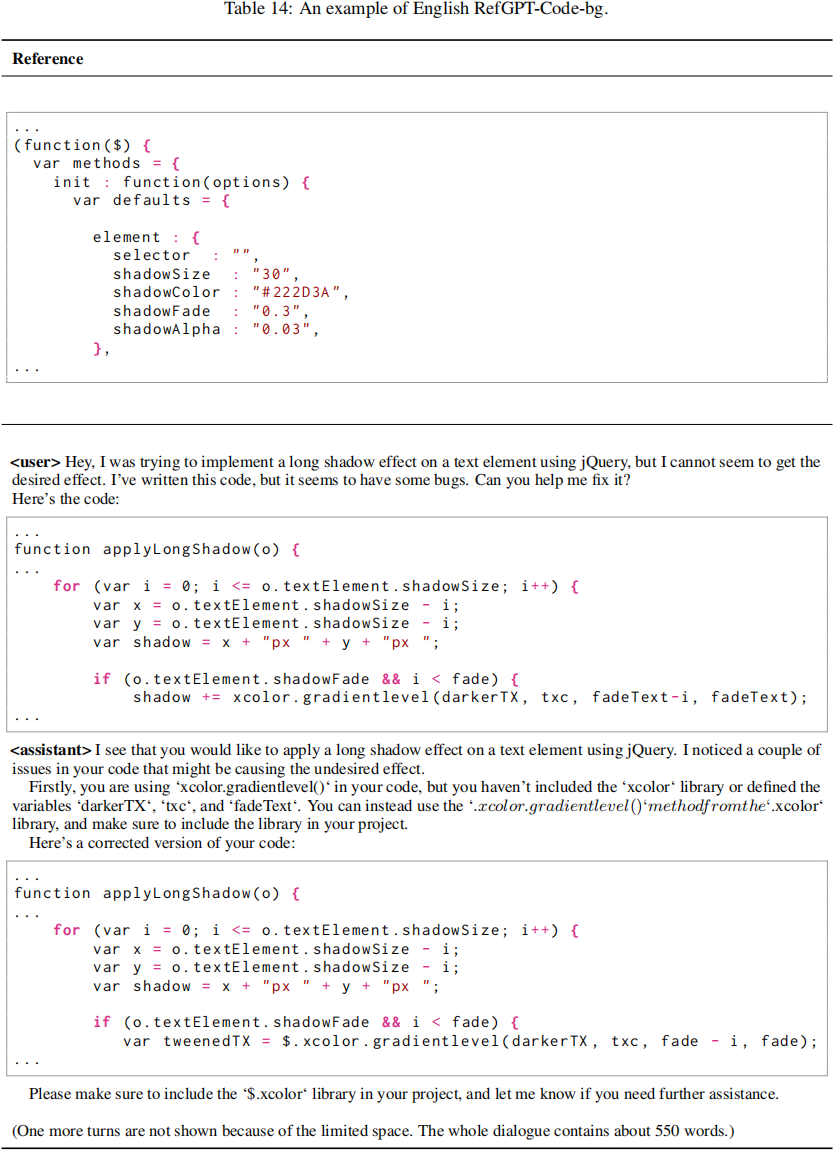

RefGPT-Code is a dataset containing 76k multi-turn dialogues about programming with 37k English and 39k Chinese, which has covered most aspects of code usage scenarios and multiple types of programming languages, as shown in Figure 2. Both the English version and Chinese version use the public GitHub dataset on Google BiqQuery with no overlap in these two languages. RefGPT-Code has derived various ways of leveraging the program code as the reference to enable different scenarios. We consider three perspectives of code discussion, code creation and bug fixing in RefGPT-Code

As the datasets RefGPT-Fact and RefGPT-Code are collected by using the references like Wikipedia and Github repositories, it can not be avoided that the reference itself has factual errors, typos, or bugs and malicious code if it is from Github repositories. The datasets may also reflect the biases of the selected references and GPT-3.5/GPT-4 model

Please pay attention that RefGPT Datasets, including RefGPT-Fact and RefGPT-Code, have not undergone manual verification, and as such, their security cannot be strictly guaranteed. Users should be aware that they are responsible for the results generated using this data.

- Dongjie Yang, djyang.tony@sjtu.edu.cn

- Ruifeng Yuan, ruifeng.yuan@connect.polyu.hk

- Yuantao Fan, yuantaofan@bupt.edu.cn

- Yifei Yang, yifeiyang@sjtu.edu.cn

- Zili Wang, ziliwang.do@gmail.com

- Shusen Wang, wssatzju@gmail.com

- Hai Zhao,zhaohai@cs.sjtu.edu.cn

If your work take inspiration from or make use of our method or data, we kindly request that you acknowledge and cite our paper as a reference.

@misc{yang2023refgpt,

title={RefGPT: Reference -> Truthful & Customized Dialogues Generation by GPTs and for GPTs},

author={Dongjie Yang and Ruifeng Yuan and YuanTao Fan and YiFei Yang and Zili Wang and Shusen Wang and Hai Zhao},

year={2023},

eprint={2305.14994},

archivePrefix={arXiv},

primaryClass={cs.CL}

}