This repo provides everything needed for a self-contained, local PySpark 1-node "cluster" running on your laptop, including a Jupyter notebook environment.

It uses Visual Studio Code and the devcontainer feature to run the Spark/Jupyter server in Docker, connected to a VS Code dev environment frontend.

-

Install Docker Desktop (you don't have to be a Docker super-expert :-))

-

Install Visual Studio Code

-

Install the VS Code Remote Development pack

-

Install required tools

-

Git clone this repo to your laptop

-

Open the local repo folder in VS Code

-

Open the VS Code command palette and select/type 'Reopen in Container'

-

Wait while the devcontainer is built and initialized, this may take several minutes

-

Open test.ipynb in VS Code

-

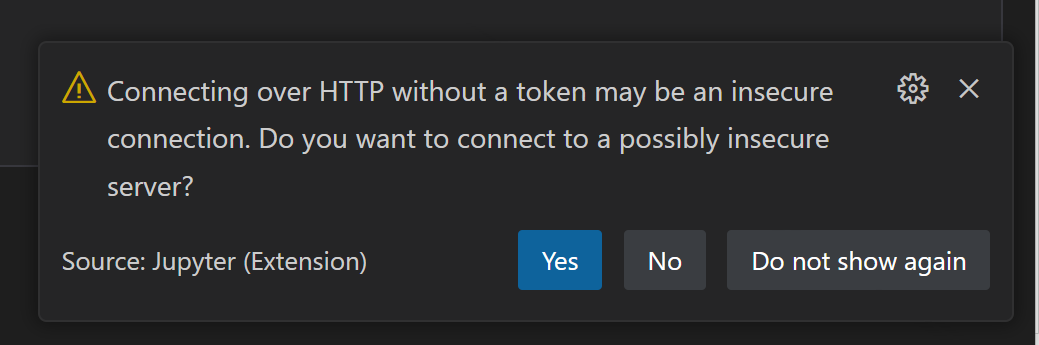

If you get an HTTP warning, click 'Yes'

-

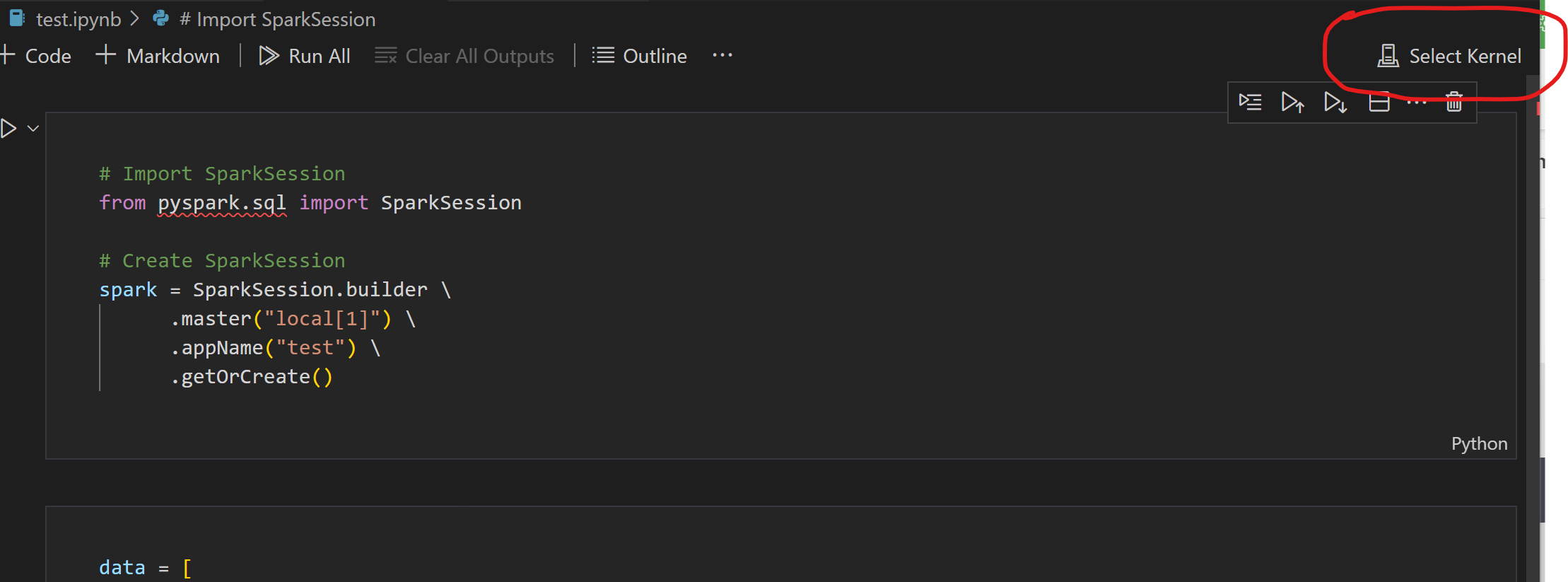

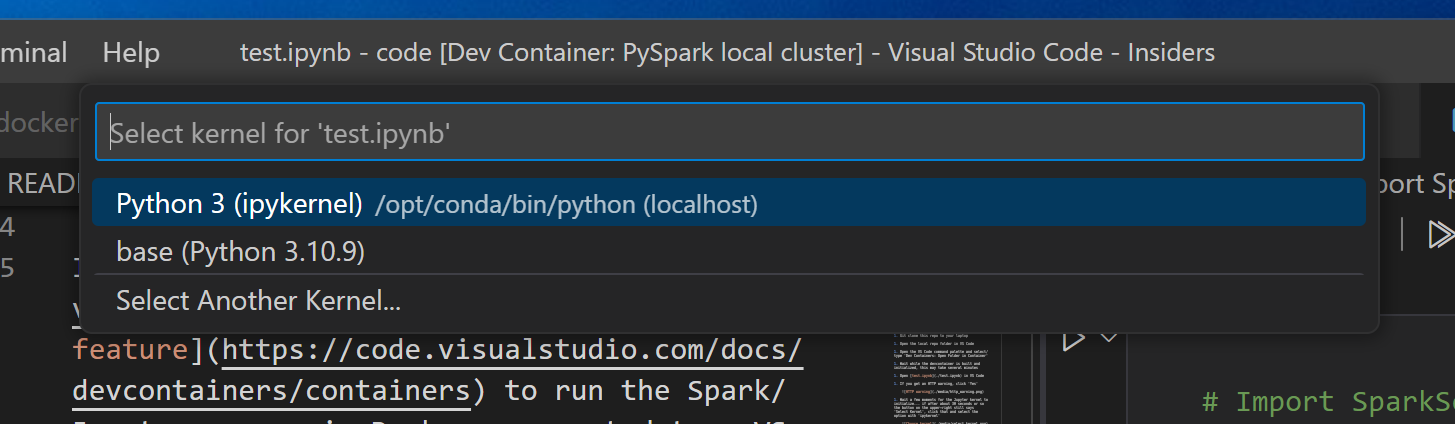

Wait a few moments for the Jupyter kernel to initialize... if after about 30 seconds or so the button on the upper-right still says 'Select Kernel', click that and select the option with 'ipykernel'

-

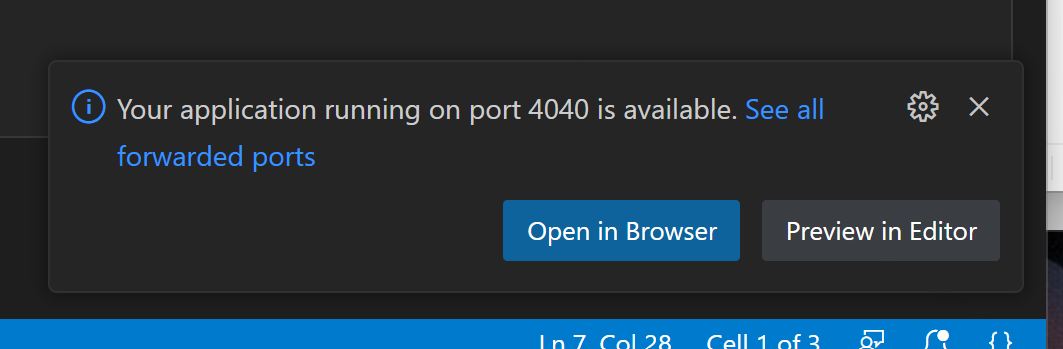

Run the first cell... it will take a few seconds to initialize the kernel and complete. You should see a message to browse to the Spark UI... click that for details of how your Spark session executes the work defined in your notebook on your 1-node Spark "cluster"

-

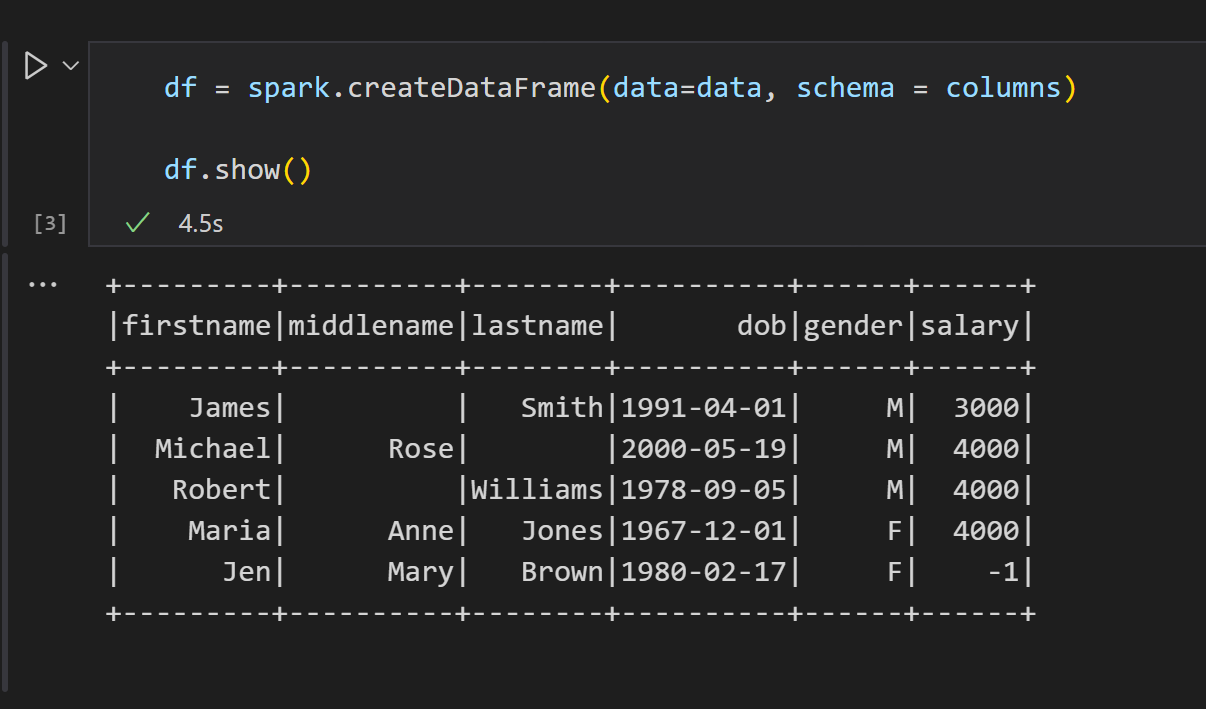

Run the remaining cells in the notebook, in order... see the output of cell 3

-

Have fun exploring PySpark!