Noval application: The study of Crashworthiness based on computer vision

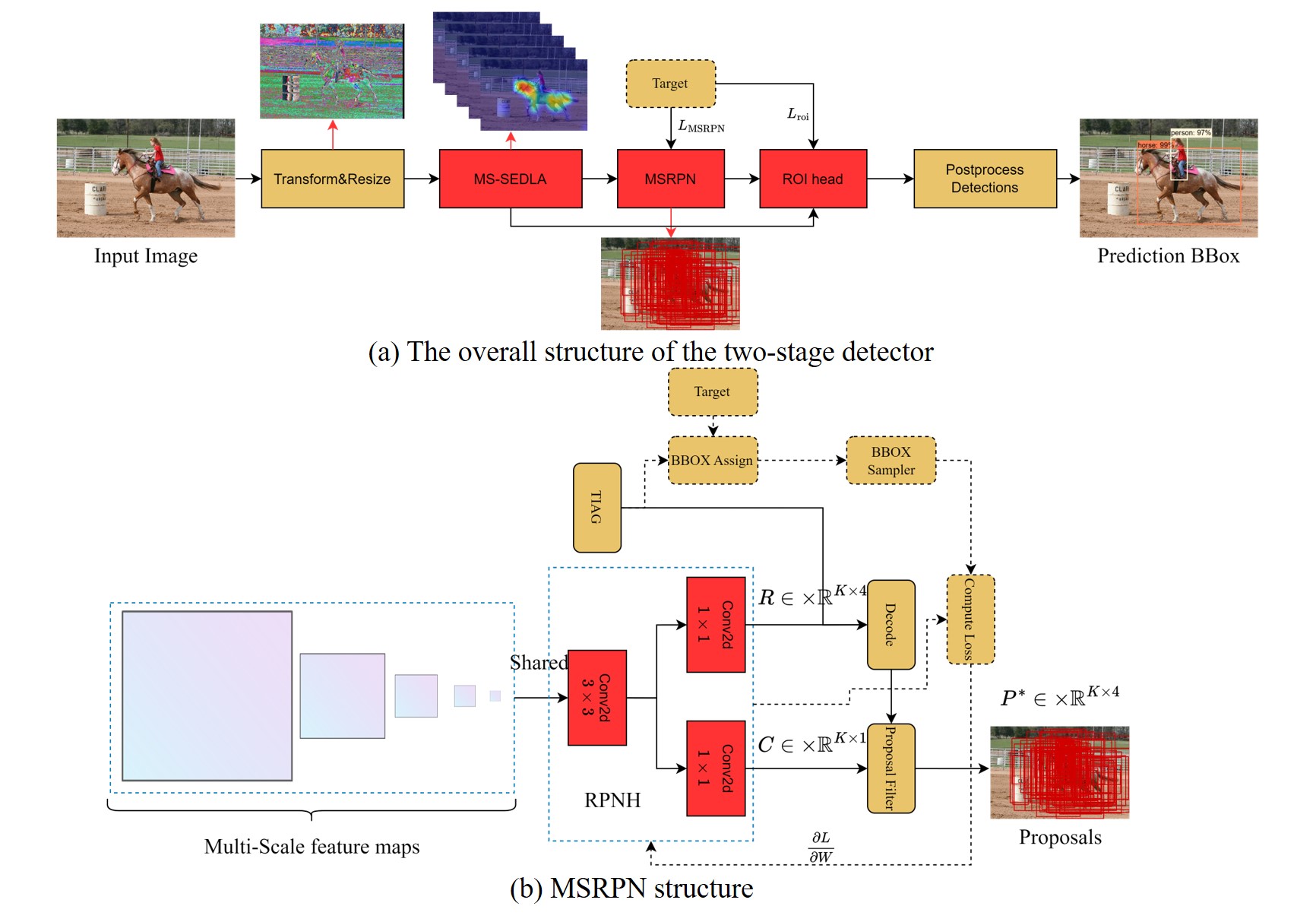

We develop a new robust detector based on the two-stage detector, which includes the Squeeze-and-Excitation Deep Layer Aggregation network (SEDLA) as backbone and the rethinking mechanism of the scale fusion neck. Our overall model is as follows:

├─.idea

│ └─inspectionProfiles

├─backbone

│ ├─pretrain

│ └─__pycache__

├─data

├─demo

├─exe

├─my_dataset

│ └─__pycache__

├─network_files

│ └─__pycache__

├─save_weights

└─train_utils

└─__pycache__

Train directly

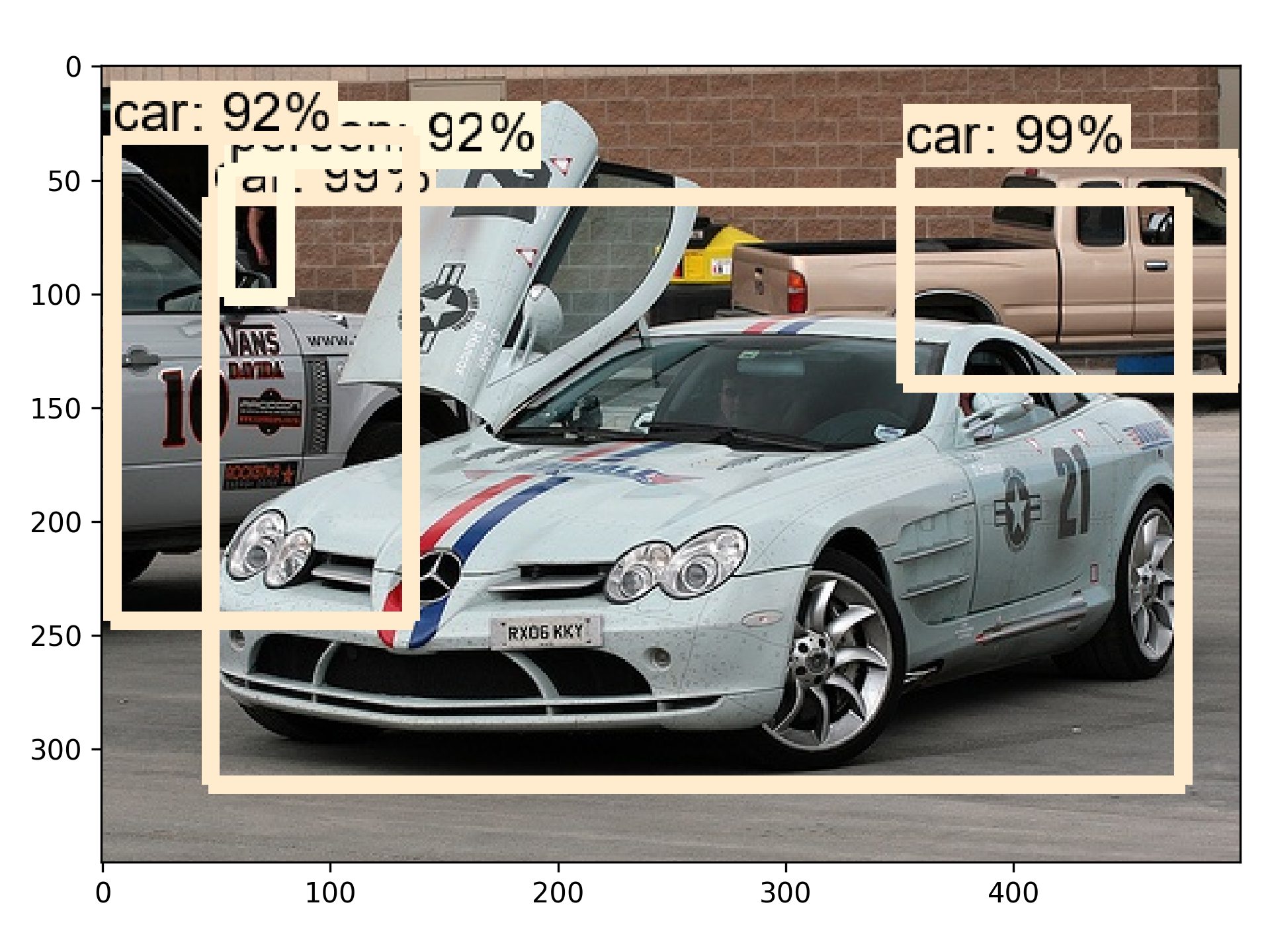

We conducted experiments on the VOC small data set, and the mAP@0.5 was 81.6 and achieved good results in the top 10 of the Tensorboard. If you want to run the training model directly, you can run train_engine directly. The premise is that your data should be placed in the [data] (data) directory like VOC type, and the pre-trained weights of the DLA backbone can be obtained in Google Cloud Disk or url and put it into the pretrain root.

You can switch between two training modes: VOC07trainval-VOC07test(VOC07) and VOC07trainval+VOC12trainval12(VOC07+12). Just modify line 91 and line 125 in train_engine.

Continue training

If you want to continue training, you can download the trained weight file from Google Cloud Disk . Next, put it into save_weights and modify resume in train_engine to point to the weights file.

Train your own data

You first need to create the data set in VOC format. Of course, you can also do it according to any data format of COCO, but just rewrite a datasets.py, about COCO dataset For reading, you can refer to pycocotools. Next, you need to make a classification similar to (pascal_voc_classes.json)[data/pascal_voc_classes.json]. The file is read as a dictionary. Finally, you only need to modify the num_classes and class_path parameters to train your dataset.

Similar to training, you only need to modify num_classes and label_json_path for evaluation, and it will generate an evaluation txt file record_mAP.txt.

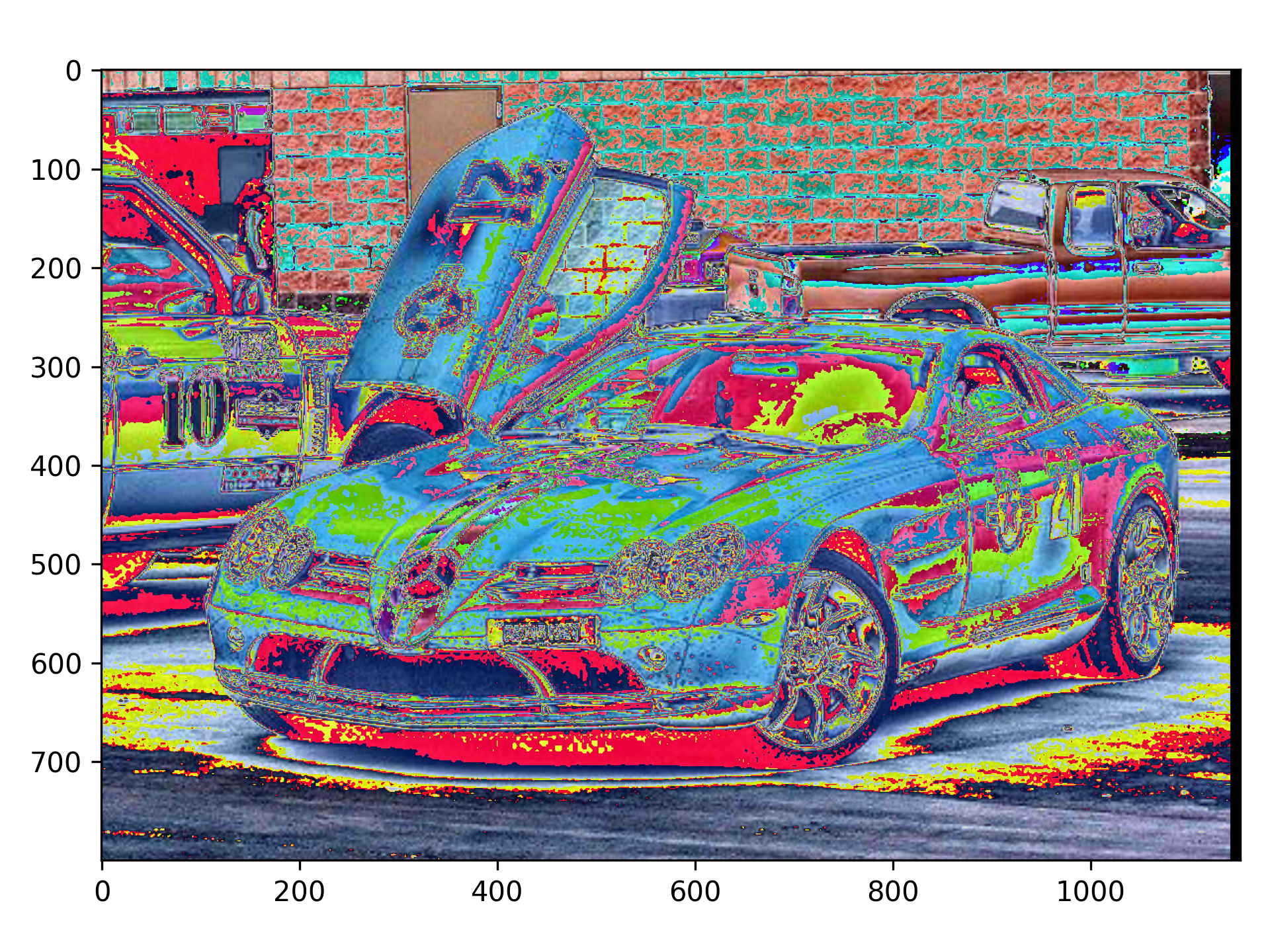

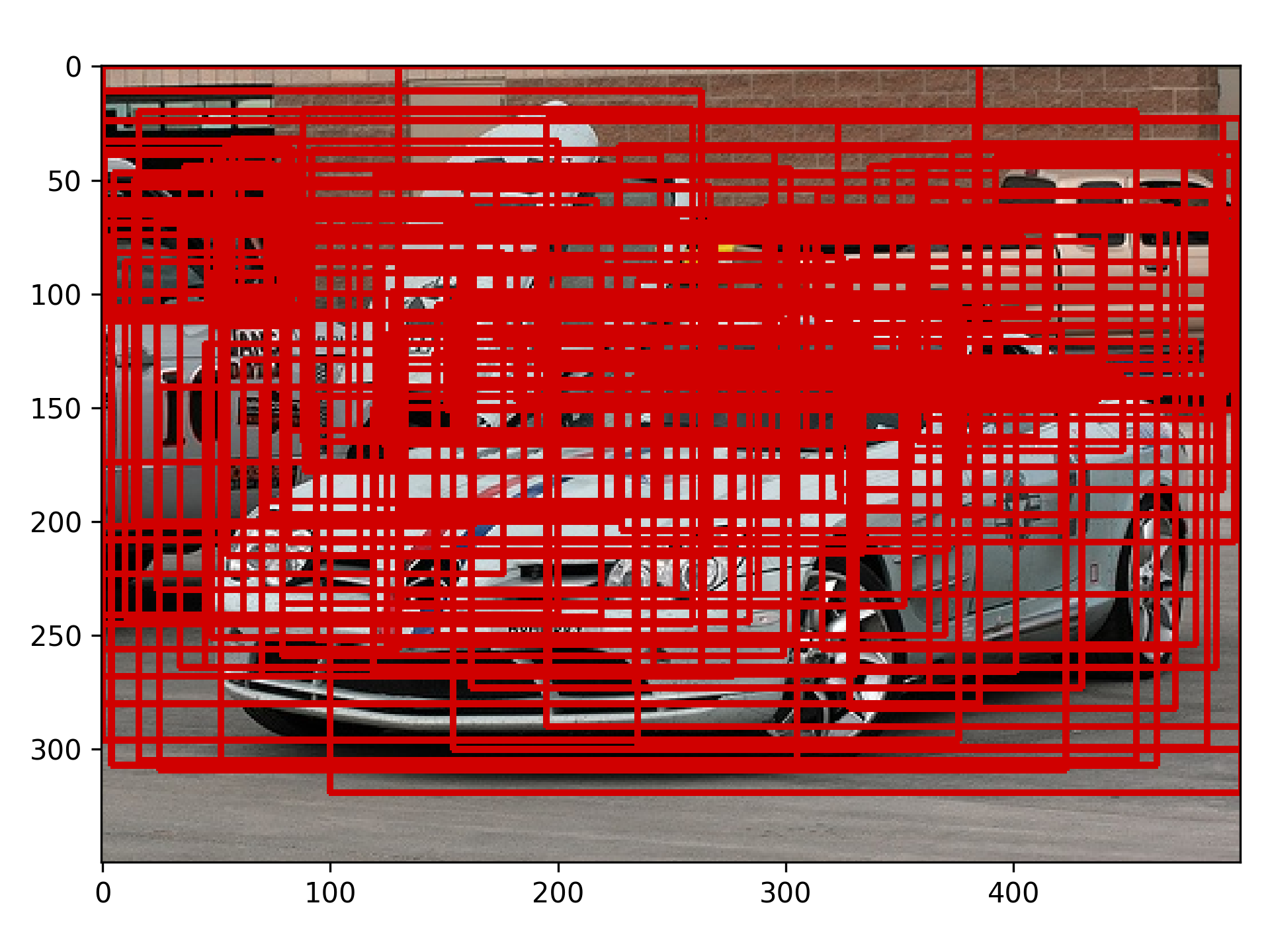

Run predict.py to generate interpretable heat maps, proposal coverage maps, data augmentation images and detection results in the demo folder. The results are stored in the exe directory.

without Grad-Grad-cam:

Grad-Cam(EigenCAM) [URL]

DLA BASE [URL]

RFPN [URL]

Otehrs [URL]

MIT © YanjieWen