Study notes of Mathematics for Lengyel, Eric. 3D Game Programming and Computer Graphics. 2011

| Quantity/Operation | Notation/Examples |

|---|---|

| Scalars | Italic letters: |

| Angles | Italic Greek letters: |

| Vectors | Boldface letters: |

| Quaternions | Boldface letters: |

| Matrices | Boldface letters: |

📌Dot Products

The dot product between two

📌Vector Projections

The projection of a vector

📌Cross Products

The cross product between two 3D vectors

📌Gram-Schmidt Orthogonalization

A basis

📌 Matrix Products

If there are matrices

📌 Determinants

The determinant of an

the

\text{and}\

\text{det}M =& \sum^{n}{j=1}M{kj}C_{kj}(M)\\

\text{where }C_{ij}(M)\text{ is the cofactor of }M_{ij}\text{defined by}\ C_{ij}(M)\equiv(-1)^{i+j}\text{det}M^{{i,j}}\\\

\text{Example of determinant of 22 and 33 matrix}\\

\text{ det}\bigg(\begin{bmatrix}a&b\c&d\end{bmatrix}\bigg) =& ad-bc

\

\text{det} \Bigg( \begin{bmatrix} a&b&c\ d&e&f\ g&h&i \end{bmatrix} \Bigg) =&a\text{ det}\bigg(\begin{bmatrix}e&f\h&i\end{bmatrix}\bigg)\ -&b\text{ det}\bigg(\begin{bmatrix}d&f\g&i\end{bmatrix}\bigg)\ +&c\text{ det}\bigg(\begin{bmatrix}d&e\g&h\end{bmatrix}\bigg)\ \end{align} $$

📌 Matrix Inverse

An

The entries of the inverse

📌 Eigenvalues and Eigenvectors

The eigenvalues of an

📌 Diagonalization

If

$n$ -dimensional vector$V$ :

vector

$V$ (column picture) represented by a matrix with 1 column and$n$ rows:

vector

$V$ (row picture) ,the transpose of their corresponding column vectors:

scalar(

$a$ ) multiply vector($V$ ):

vector(

$P$ ) + vector($Q$ ): (element-wise operation)

$\norm{V}$ ,magnitude/norm of an$n$ -dimensional vector$V$ is a scalar.

$\norm{V}$ ,is also the unit length. A vector having a magnitude/ norm is said to be a unit vector. Taking the 3-dimensional vector as an example,

vector normalization:

$V$ multiply$\frac{1}{\norm{V}}$

⭐ Big picture of its application: it measure the difference between the directions in which the two vectors point.

dot product of two vectors, also known as the scalar product or inner product

Definition: The dot product of two

$n$ -dimensional vectors$P$ and$Q$ , is the scalar quantity given by the formula.

dot product is element-wise operation

dot product represented in matrix format

tips: row vector * column vector = scalar

:pencil: Theorem: Given two

$n$ -dimensional vectors$P$ and$Q$ , the dot product$P\cdot Q$ satisfies the equation.$a$ is the angle between$P$ and$Q$ .

:pushpin: Fact: in light of theorem: if two vector

$P\cdot Q=0$ , they are orthogonal.

:pushpin: Fact:

$P\cdot Q>0$ , they are on the same side.$P\cdot Q<0$ , they are on the opposite side.

:pencil: Theorem: Given any scalar

$a$ and any three vectors$P, Q$ , and$R$ , the following properties hold.

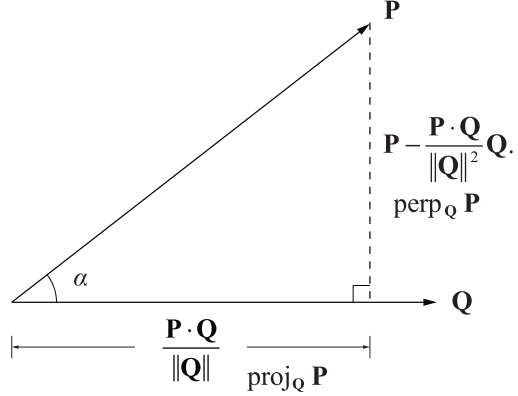

:pushpin: Projection: for a vector

$P$ projected onto$Q$ can be denoted as :pushpin:Perpendicular: the projection perpendicular to original vector

⭐ Big Picture: calculates surface normal at a particular point given two distinct tangent vectors.

cross product is also known as vector product which returns a vector rather than an scalar.

Definition 2.6. The cross product of two 3D vectors

$P$ and$Q$ , written as$P\cross Q$ , is a vector quantity given by the formula

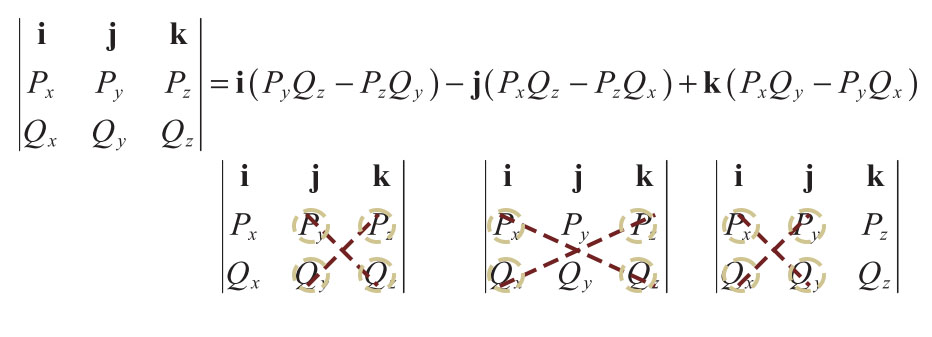

cross product in matrix picture(also known as pseudodeterminant), where

$i,j,k$ are unit vector:

:pencil:Theorem 2.7. Let

$P$ and$Q$ be any two 3D vectors. Then:

This is very easy to understand. The dot product between a vector and its orthogonal complement is ZERO because they have nothing aligned.

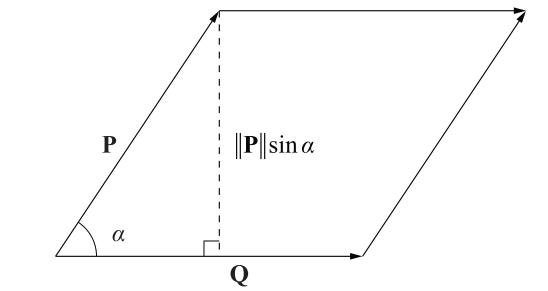

:pencil: Theorem 2.8. Given two 3D vectors

$P$ and$Q$ , the cross product$P\cross Q$ satisfies the equation. where$a$ is the planar angle between the lines connecting the origin to the points represented by$P$ and$Q$ .

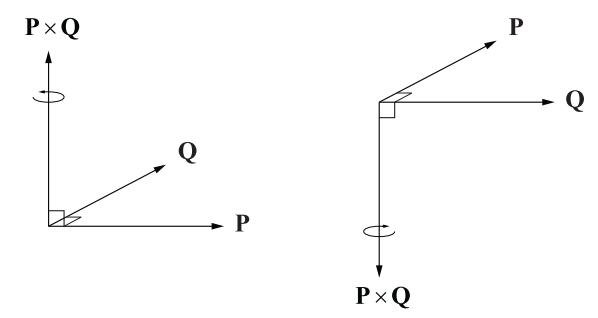

:pushpin:Right hand rule: The cross product is with orientation.

:star: Area of cross product: it is the parallelogram formed by

$P$ and$Q$ .

:pencil: Theorem 2.9. Given any two scalars

$a$ and$b$ , and any three 3D vectors$P$ ,$Q$ , and$R$ , the following properties hold.

:pushpin: Anticommutative, is a characteristic of cross product which implies the order of cross product matters.

:star: Definition 2.10. A vector space is a set

$V$ , whose elements are called vectors, for which addition and scalar multiplication are defined, and the following properties hold.

$P\in V,\space Q\in V, \quad \text{s.t. }P+Q\in V$ $P\in V, a\in \mathbb{R}, \text{ s.t. }aP\in V$ -

$\exist\space 0\in V, \text{s.t.}\quad\ P+0=P$ . -

$\exist\space Q\in V, \text{s.t.}\quad\ P+Q=0$ . $(P+Q)+R=P+(Q+R)$ $(ab)P=a(bP)$ $a(P+Q)=aP+aQ$ $(a+b)P = aP+bP$

:star:Definition 2.11. A set of

$n$ vectors${e_1,e_2,...,e_n}$ is linearly independent if there do not exist real number$a_1,a_2,...,a_n$ where at least one of the$a_i$ is not zero, such that

otherwise, the set

${e_1,e_2,...,e_n}$ is called linearly dependent.

:star:Definition 2.13. A basis

$\Beta={e_1,e_2,...,e_n}$ for a vector space is called orthogonal if for every pair$(i,j)$ with$i\neq j$ , we have:

:pencil:Theorem 2.14. Given two nonzero vectors

$e_1$ and$e_2$ , if$e_1\cdot e_2=0$ , then$e_1$ and$e_2$ are linearly independent。which is very easy to understand since they have nothing overlapped.

Definition 2.15. A basis

$\Beta={e_1,e_2,...,e_n}$ for a vector space is called orthonormal if for every pair$(i,j)$ we have $$

where

:computer:Algorithm 2.16. Gram-Schmidt Orthogonalization. Given a set of

$n$ linearly independent vectors$\Beta={e_1,e_2,...,e_n}$ , this algorithm produces a set$\Beta'={e_1',e_2',...,e_n'}$ such that

- A. set

$e_1'=e_1$ - B. Begin with index

$i=2$ - C. Subtract the projection of

$e_i$ onto the vector$e_1',e_2',...,e_{i-1}'$ from$e_i$ and store the result in$e_i'$ . That is

- D. If

$i<n$ ,$i++$ , back to step C.

⭐Big picture of Gram-Schmidt Orthogonalization: it alternates coordinate systems.

⭐ Big picture: It means to cover calculation between different Cartesian coordinate spaces.

Basic representation of a matrix:

where

Transpose of a matrix:

:star:Matrix multiplication: suppose 2 matrices

$F$ ($n\cross m_1$ ) and$G(m_2\cross p)$ , the prerequisite for a valid matrix multiplication is that$m_1=m_2$ . The shape of output matrix is$n\cross p$ . The$i,j$ element of the matrix is:

$$ (FG){ij} = \sum{k=1}^{m}F_{ik}G_{kj} $$

:bulb: Another picture of this: the

$(i,j)$ entry of$FG$ is equal to the dot product of the$i$ -th row of$F$ and the$j$ -th column of$G$ . e.g.

>>> import numpy as np

>>> F = np.array([[2,3,1],

[3,0,1]])

>>> G = np.array([[1,2],

[4,0],

[3,2]])

>>> F@G

array([[17, 6],

[ 6, 8]])The 2,2 element of output matrix is the dot product of

:pencil:Theorem 3.1. Given any two scalars a and b and any three nm× matrices F, G, and H, the following properties hold.

:pencil:Theorem 3.2. Given any scalar

$a$ , an$n\cross m$ matrix$F$ , an$m\cross p$ matrix$G$ , and a$p\cross q$ matrix$H$ , the following properties hold.

Matrix is very handy in solving linear equations:

can illustrated as matrix format:

looking in a big picture:

-

$A$ , the coefficient matrix -

$x$ , unknown -

$b$ , right-hand side, constant vector

:pushpin: nonhomogeneous : right hand side is nonzero

:pushpin: homogeneous : right hand side is full of zero

:star:Definition 3.3. Elementary row operation: It is one of the following three operations that can be performed on a matrix

- exchange 2 rows

- multiply a row by a nonzero scalar

- add a multiple of one row to another row

:star: Definition 3.4. A matrix is in reduced form if and only if it satisfies the following conditions.

- For every nonzero row, the leftmost nonzero entry, called the leading entry, is 1.

- Every nonzero row precedes every row of zeros. That is, all rows of zeros reside at the bottom of the matrix.

- If a row’s leading entry resides in column

$j$ , then no other row has a nonzero entry in column$j$ . - For every pair of nonzero rows

$i_1$ and$i_2$ such that$i_2>i_1$ , the columns$j_1$ and$j_2$ containing those rows’ leading entries must satisfy$j_2>j_1$

:bulb: Big picture: Difference between Reduced Row Echelon Form and Row Echelon Form: The main difference is that it is easy to read the null space off the RREF, but it takes more work for the REF.

Applying a row operation to

:bulb: reduced form is also called reduced echelon form. Therefore, it's better to memorize reduced form and echelon form at the same time.

| Row Echelon Form | Reduced Row Echelon Form |

|---|---|

| The first non-zero number from the left (the “leading coefficient”) is always to the right of the first non-zero number in the row above. | The first non-zero number in the first row (the leading entry) is the number 1. |

| Rows consisting of all zeros are at the bottom of the matrix. | The second row also starts with the number 1, which is further to the right than the leading entry in the first row. For every subsequent row, the number 1 must be further to the right. |

| The leading entry in each row must be the only non-zero number in its column. | |

| Any non-zero rows are placed at the bottom of the matrix. |

:computer: Algorithm 3.6. This algorithm transforms an

$n\cross(n+1)$ augmented matrix$M$ representing a linear system into its reduced form. At each step,$M$ refers to the current state of the matrix, not the original state.

- A. Set the row

$i$ equal to 1. - B. Set the column

$j$ equal to 1. We will loop through columns 1 to$n$ . - C. Find the row

$k$ with$k≥i$ for which$M_{kj}$ has the largest absolute value. If no such row exists for which$M_{kj} ≠0$ , then skip to step H. - D. If

$k≠i$ , then exchange rows$k$ and$i$ using elementary row operation(a) under Definition 3.3. - E. Multiply row

$i$ by$1/M{ij}$ . This sets the$(i,j)$ entry of$M$ to 1 using elementary row operation (b). - F. For each row

$r$ , where$1 ≤r≤n$ and$r≠i$ , add$−M_{rj}$ times row$i$ to row$r$ . This step clears each entry above and below row$i$ in column$j$ to 0 using elementary row operation (c). - G. Increment

$i$ . - H. If

$j<n$ , increment$j$ and loop to step C.

//TODO C++ program of algorithm 3.6

:star: Invertible : A matrix

$M^{-1}$ is the inverse of$M$ , such that -

:star:Singular: Matrix is not invertible.

:pencil:Theorem 3.9. A matrix possessing a row or column consisting entirely of zeros is not invertible.

:pencil:Theorem 3.10. A matrix

$M$ is invertible if and only if$M^T$ is invertible.

:pencil:Theorem 3.11. If

$F$ and$G$ are$n\cross n$ invertible matrices, then the product$FG$ is invertible, and$(FG)^{-1}=G^{-1}F^{-1}$

:pushpin: Gauss-Jordan Elimination : It is used to transform a matrix into its reduced form. But it can also be used to calculate the inverse of a matrix.

For an

- A. construct an

$n\cross 2n\text{ matrix } \tilde{M},$ - B. concatenating the identity matrix to the right of

$\tilde{M}$ , (as shown below).

- C. Performing elementary row operations on the entire matrix

$\tilde{M}$ until the left side$n×n$ matrix becomes the identity matrix$I_n$ - D. Then the right hand side is the inverse

$M^{-1}$

:computer: Algorithm 3.12. Gauss-Jordan Elimination. This algorithm calculates the inverse of an

$n\cross n$ matrix$M$ .

- A. Construct the augmented matrix

$\tilde{M}$ given in$\eqref{augmented matrix}$ . Throughout this algorithm,$\tilde{M}$ refers to the current state of the augmented matrix, not the original state. - B. Set the column

$j$ equal to 1. We will loop through columns 1 to$n$ . - C. Find the row

$i$ with$i ≥ j$ such that $\tilde{M}{ij}$ has the largest absolute value. If no such row exists for which $\tilde{M}{ij}≠ 0$, then$M$ is not invertible. - D. If

$i ≠ j$ , then exchange rows$i$ and$j$ exchange 2 rows. This is the pivot operation necessary to remove zeros from the main diagonal and to provide numerical stability. - E. Multiply row

$j$ by$1/\tilde{M}_{ij}$ . This sets the$(j,j)$ entry of$\tilde{M}$ to 1 multiply a row by a nonzero scalar. - F. For each row

$r$ where$1≤r≤n$ and$r ≠ j$ , add$−\tilde{M}_{ij}$ times row$j$ to row$r$ . This step clears each entry above and below row$j$ in column$j$ to 0, add a multiple of one row to another row. - G. If

$j<n$ , increment$j$ and loop to step C.

:pencil:Theorem 3.14. Let

$M'$ be the$n\cross n$ matrix resulting from the performance of an elementary row operation on the$n\cross n$ matrix$M$ . Then$M'=EM$ , where$E$ is the$n\cross n$ matrix resulting from the same elementary row operation performed on the identity matrix.

:pencil:Theorem 3.15. An

$n\cross n$ matrix$M$ is invertible if and only if the rows of$M$ form a linearly independent set of vectors.

:star:Geometrical Big Picture: The determinant of a matrix tell you how much the linear transformation is.

e.g.

In 2D, the determinant is how much does the area of unit

In 3D, the determinant is how much does the volume of unit

$\text{det}M$ : The determinant of a square matrix is a scalar quantity derived from the entries of the matrix.

$M^{{i,j}}$ , denote the a$(n-1)\cross(n-1)$ matrix which delete$i$ -th row and$j$ -column from original matrix M. e.g.:

:star:Definition 3.16. Let

$M$ be an$n\cross n$ matrix. We define the$cofactor C_{ij}(M)$ of the matrix entry$M_{ij}$ as follows.

:star: Calculation of determinant:

Therefore, it is a recursively process.

:pencil: Theorem 3.17. Performing elementary row operations on a matrix has the following effects on the determinant of that matrix.

-

(a) Exchanging two rows negates the determinant.

-

(b) Multiplying a row by

$a$ scalar a multiplies the determinant by$a$ . -

(c) Adding a multiple of one row to another row has no effect on the determinant.

:leftwards_arrow_with_hook: Corollary 3.18. The determinant of a matrix having two identical rows is zero.

This is easy to understand geometrically. The rows in transpose are the column. The identical columns means they are linear dependent. How much the linear transformation is would be zero.

:pencil:Theorem 3.19. An

$n\cross n$ matrix$M$ is invertible if and only if$\text{det}M≠0$ .

:pencil:Theorem 3.20. For any two

$n\cross n$ matrices$F$ and$G$ ,$\text{det}FG=\text{det}F\text{det}G$ .

:pencil: Theorem 3.21. Let

$F$ be an$n\cross n$ matrix and define the entries of an$n\cross n$ matrix$G$ using the formula

where

$C_{ij}(F)$ is the cofactor of$(F^T)_{ij}$ . Then$G=F^{-1}$

:star:Big picture: Eigenvector multiplied by the matrix, it was changed only in magnitude and not in direction.

For an

characteristic polynomial , is the degree

Formula of eigenvalues

(Tips) Because

Because

:star:Definition 3.24. An

$n\cross n$ matrix$M$ is symmetric if and only if$M_{ij}= M_{ji}$ for all$i$ and$j$ . That is, a matrix whose entries are symmetric about the main diagonal is called symmetric.

:pencil: Theorem 3.25. The eigenvalues of a symmetric matrix

$M$ having real entries are real numbers.

:pencil: Theorem 3.26. Any two eigenvectors associated with distinct eigenvalues of a symmetric matrix

$M$ are orthogonal.

Definition: if we can find a matrix

$A$ such that$A^{-1}MA$ is a diagonal matrix, then we say that$A$ diagonalizes$M$ .

:bulb: Big Picture : any

$n\cross n$ matrix for which we can find$n$ linearly independent eigenvectors can be diagonalized.

:pencil: Theorem 3.27. Let

$M$ be an$n\cross n$ matrix having eigenvalues$λ_1,λ_2,...,λ_n$ , and suppose that there exist corresponding eigenvectors$V_1,V_2,...,V_n$ that form a linearly independent set. Then the matrix$A$ given by

(i.e., the columns of the matrix

$A$ are the eigenvectors$V_1,V_2,...,V_n$ diagonalizes$M$ , and the main diagonal entries of the product$A^{−1}MA$ are the eigenvalues of$M$ :

Conversely, if there exists an invertible matrix

$A$ such that$A^{-1}MA$ is a diagonal matrix, then the columns of$A$ must be eigenvectors of$M$ , and the main diagonal entries of$A^{-1}MA$ are the corresponding eigenvalues of$M$ .

e.g.