Video to text caption

Generating text captions based on a video.

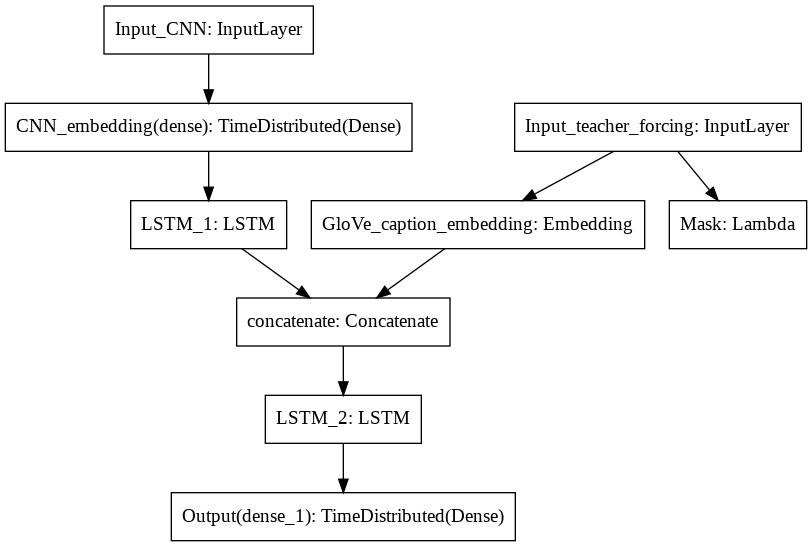

Model architecture:

Two models are stacked on top of each other. The first model is a pre-trained EfficientNet that extracts features from every frame of the video. 1 frame per second is kept.

The second model is a sequence to sequence model that takes in the CNN features and outputs the captions. Second model architecture:

Examples:

Based on the Sequence to Sequence -- Video to Text (Venugopalan et al.) paper with following modifications:

- Added pre-trained GloVe embeddings

- Used a newer pre-trained CNN model (EfficientNet)

- Implemented beam search

Data:

MSVD dataset

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)