@article{lin2020mcunet,

title={Mcunet: Tiny deep learning on iot devices},

author={Lin, Ji and Chen, Wei-Ming and Lin, Yujun and Gan, Chuang and Han, Song},

journal={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}

[News] This repo is still under development. Please check back for the updates!

[MIT News] System brings deep learning to “internet of things” devices

- Overview

- Framework Structure

- Model Zoo

- Testing

- Model Profiling

- Code Cleaning Plan

- Requirement

- Acknowledgement

- Related Projects

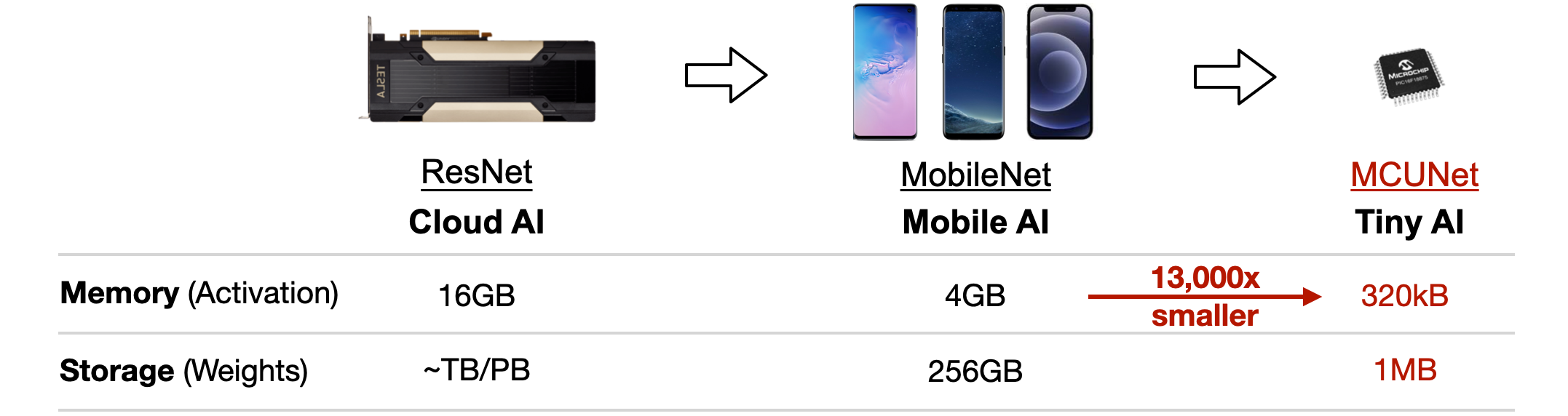

Microcontrollers are low-cost, low-power hardware. They are widely deployed and have wide applications.

But the tight memory budget (50,000x smaller than GPUs) makes deep learning deployment difficult.

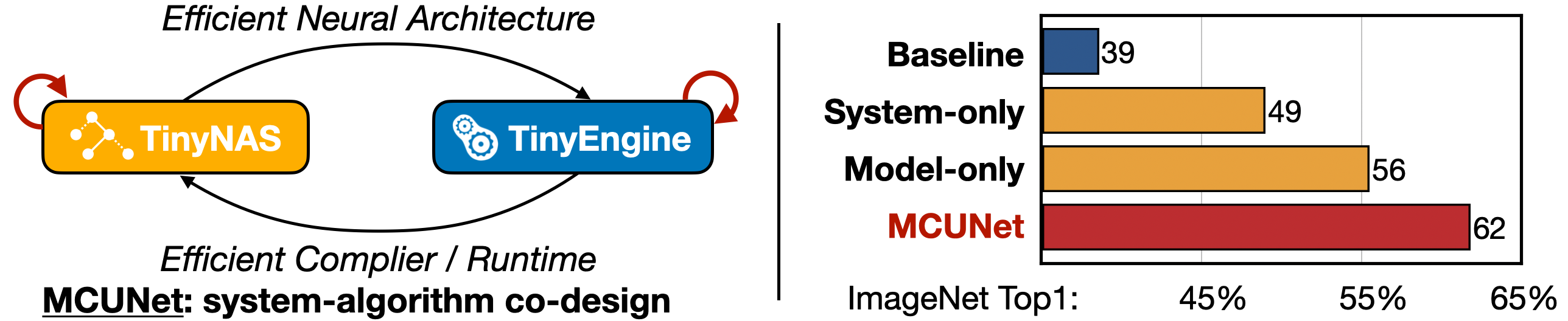

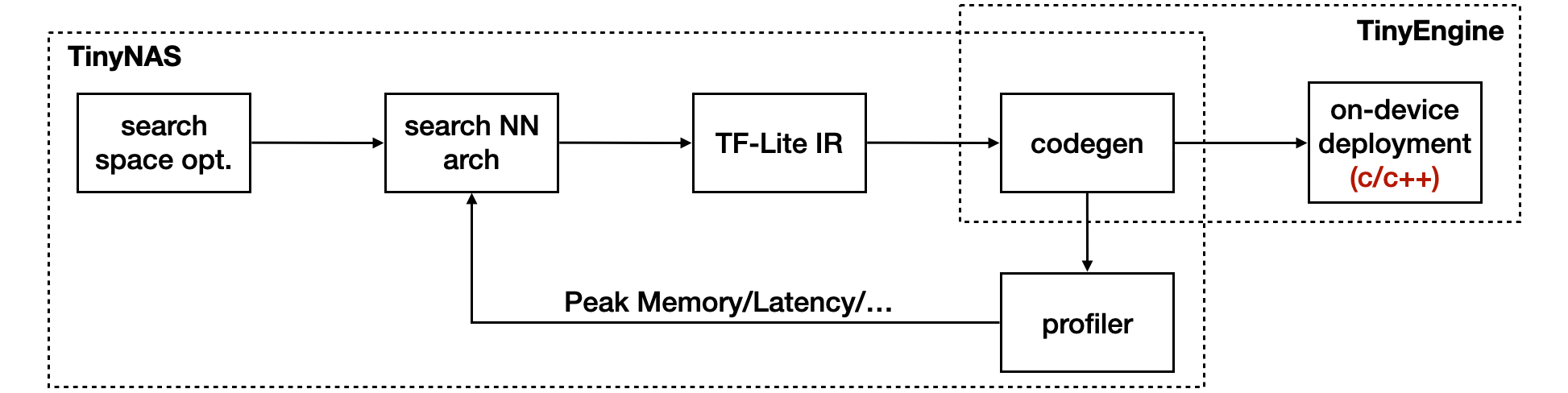

MCUNet is a system-algorithm co-design framework for tiny deep learning on microcontrollers. It consists of TinyNAS and TinyEngine. They are co-designed to fit the tight memory budgets.

With system-algorithm co-design, we can significantly improve the deep learning performance on the same tiny memory budget.

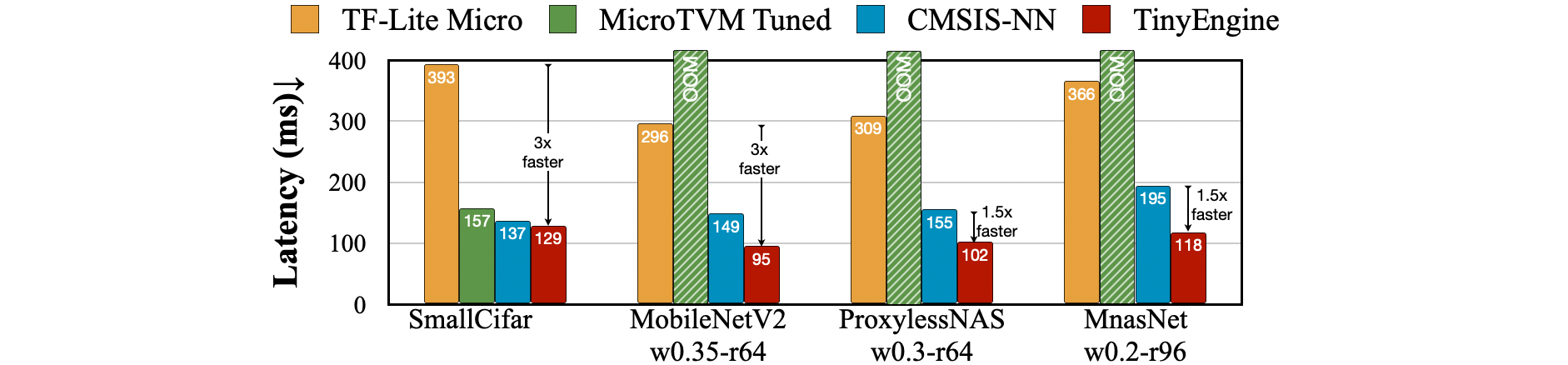

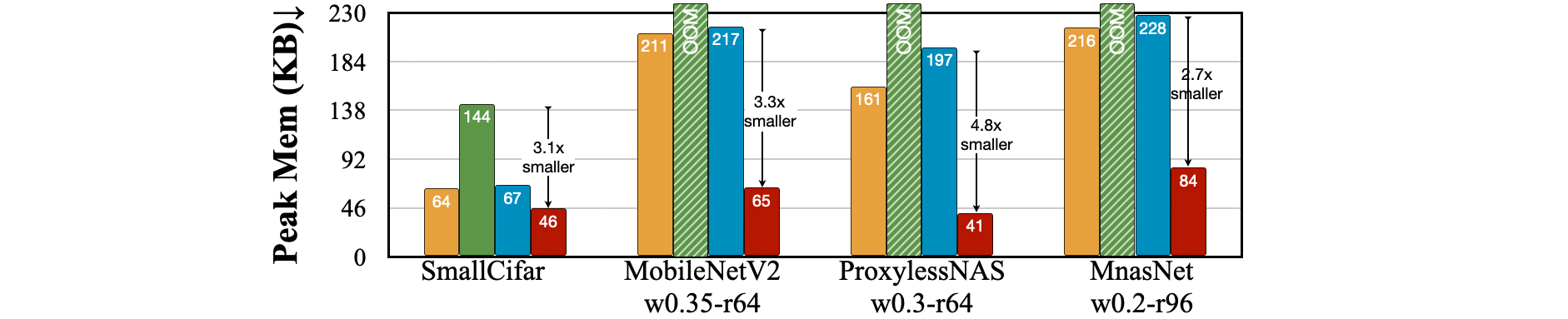

Our TinyEngine inference engine could be a useful infrastructure for MCU-based AI applications. It significantly improves the inference speed and reduces the memory usage compared to existing libraries like TF-Lite Micro, CMSIS-NN, MicroTVM, etc.

- TinyEngine improves the inference speed by 1.5-3x

- TinyEngine reduces the peak memory by 2.7-4.8x

The structure of the MCUNet implementation is as follows. The whole project is mostly based on a Python implementation, except for the device side deployment. TinyEngine and TinyNAS share the code of Code Generation, which is used for memory profiling during architecture search, and also the first step for device deployment.

We provide the searched models for ImageNet, Visual Wake Words (VWW), and Google Speech Commands for comparison. The statistics on TF-Lite Micro and TinyEngine is based on int8 quantized version of the model (unless otherwise stated).

- Inference engines: we compared both TF-Lite Micro and TinyEngine for the on-device statistics.

- Model formats: we provide the model in both PyTorch floating-point format (with json definition of architecture and checkpoint (ckpt) for weights) and also TF-Lite int8 quantized format (tflite)

- Statistics: we include

- model statistics: computation (MACs), #parameters (Param), theoretial peak activation size (Act) (by summing input and output activation)

- deployment statistics: peak SRAM usage (SRAM), Flash usage (Flash)

We provide download links in the following tables. Alternatively, you can download all the checkpoints and tf-lite files at once by running:

python jobs/download_all_models.pyWe first compare the baseline networks (scaled MobileNetV2, MbV2-s and scaled ProxylessNASMobile, Proxyless-s) with MCUNet on STM32F746. The baseline networks are scaled to fit the hardware constraints (we compoundly scale the width and resolution of the baseline networks and report the best accuracy under the constraints).

The latency and memory usage are measured on STM32F746 board. TinyEngine can reduce the Flash and SRAM usage and fit a larger model; TinyNAS can design network that has superior accuracy under the same memory budget.

| Model | Model Stats. | TF-Lite Stats. | TinyEngine Stats. | Top-1 Acc. | Top-5 Acc. | Link |

|---|---|---|---|---|---|---|

| MbV2-s for TF-Lite (w0.3-r80) |

MACs: 7.3M Param: 0.63M Act: 94kB |

SRAM: 306kB Flash: 943kB Latency: 445ms |

SRAM: 98kB Flash: 745kB Latency: 124ms |

FP: 39.3% int8: 38.6% |

FP: 64.8% int8: 64.1% |

json ckpt tflite |

| MbV2-s for TinyEngine (w0.35-r144) |

MACs: 23.5M Param: 0.75M Act: 303kB |

SRAM: 646kB Flash: 1060kB Latency: OOM |

SRAM: 308kB Flash: 862kB Latency: 393ms |

FP: 49.7% int8: 49.0% |

FP: 74.6% int8: 73.8% |

json ckpt tflite |

| Proxyless-s for TF-Lite (w0.25-r112) |

MACs: 10.7M Param: 0.57M Act: 98kB |

SRAM: 288kB Flash: 860kB Latency: 660ms |

SRAM: 114kB Flash: 701kB Latency: 169ms |

FP: 44.9% int8: 43.8% |

FP: 70.0% int8: 69.0% |

json ckpt tflite |

| Proxyless-s for TinyEngine (w0.3-r176) |

MACs: 38.3M Param: 0.75M Act: 242kB |

SRAM: 526kB Flash: 1063kB Latency: OOM |

SRAM: 292kB Flash: 892kB Latency: 572ms |

FP: 57.0% int8: 56.2% |

FP: 80.2% int8: 79.7% |

json ckpt tflite |

| TinyNAS for TinyEngine (MCUNet) |

MACs: 81.8M Param: 0.74M Act: 333kB |

SRAM: 560kB Flash: 1088kB Latency: OOM |

SRAM: 293kB Flash: 897kB Latency: 1075ms |

FP: 62.2% int8: 61.8% |

FP: 84.5% int8: 84.2% |

json ckpt tflite |

We provide the MCUNet models under different memory constraints:

- STM32 F412 (Cortex-M4, 256kB SRAM/1MB Flash)

- STM32 F746 (Cortex-M7, 320kB SRAM/1MB Flash)

- STM32 H743 (Cortex-M7, 512kB SRAM/2MB Flash)

The memory usage and latency are measured on the corresponding devices.

Int8 quantization is the most widely used quantization and default setting in our experiments.

| Constraints | Model Stats. | TF-Lite Stats. | TinyEngine Stats. | Top-1 Acc. | Top-5 Acc. | Link |

|---|---|---|---|---|---|---|

| 256kB 1MB |

MACs: 67.3M Param: 0.73M Act: 325kB |

SRAM: 546kB Flash: 1081kB Latency: OOM |

SRAM: 242kB Flash: 878kB Latency: 3535ms |

FP: 60.9% int8: 60.3% |

FP: 83.3% int8: 82.6% |

json ckpt tflite |

| 320kB 1MB |

MACs: 81.8M Param: 0.74M Act: 333kB |

SRAM: 560kB Flash: 1088kB Latency: OOM |

SRAM: 293kB Flash: 897kB Latency: 1075ms |

FP: 62.2% int8: 61.8% |

FP: 84.5% int8: 84.2% |

json ckpt tflite |

| 512kB 2MB |

MACs: 125.9M Param: 1.7M Act: 413kB |

SRAM: 863kB Flash: 2133kB Latency: OOM |

SRAM: 456kB Flash: 1876kB Latency: 617ms |

FP: 68.4% int8: 68.0% |

FP: 88.4% int8: 88.1% |

json ckpt tflite |

We can further reduce the memory usage with lower precision (int4). Notice that with int4 quantization, there will be a large accuracy drop compared to the float point models. Therefore, we have to perform quantization-aware training, and only report the quantized accuracy.

Note: Int4 quantization also does NOT bring further speed gain due to the instruction set. It may not be a better trade-off compared to int8 if inference latency is considered.

TODO: we are still organizing the code and the right model format for int4 model evaluation.

| Constraints | Model Stats. | TinyEngine Stats. | Top-1 Acc. | Link |

|---|---|---|---|---|

| 256kB 1MB |

MACs: 134.5M Param: 1.4M Act: 244kB |

SRAM: 233kB Flash: 1008kB |

int4: 62.0% | TODO |

| 320kB 1MB |

MACs: 170.0M Param: 1.4M Act: 295kB |

SRAM: 282kB Flash: 1010kB |

int4: 63.5% | TODO |

| 512kB 1MB |

MACs: 466.8M Param: 3.3M Act: 495kB |

SRAM: 498kB Flash: 1986kB |

int4: 70.7% | TODO |

We provide the MCUNet models under different latency constraints. The models are designed to fit STM32F746 with 320kB SRAM and 1MB Flash.

| Latency Limit |

Model Stats. | TF-Lite Stats. | TinyEngine Stats. | Top-1 Acc. | Top-5 Acc. | Link |

|---|---|---|---|---|---|---|

| N/A | MACs: 81.8M Param: 0.74M Act: 333kB |

SRAM: 560kB Flash: 1088kB Latency: OOM |

SRAM: 293kB Flash: 897kB Latency: 1075ms |

FP: 62.2% int8: 61.8% |

FP: 84.5% int8: 84.2% |

json ckpt tflite |

| 5FPS | MACs: 12.8M Param: 0.6M Act: 90kB |

SRAM: 295kB Flash: 941kB Latency: 659ms |

SRAM: 107kB Flash: 770kB Latency: 197ms |

FP: 51.5% int8: 49.9% |

FP: 75.5% int8: 74.1% |

json ckpt tflite |

| 10FPS | MACs: 6.4M Param: 0.7M Act: 45kB |

SRAM: 237kB Flash: 1069kB Latency: OOM |

SRAM: 54kB Flash: 889kB Latency: 92ms |

FP: 41.5% int8: 40.4% |

FP: 66.3% int8: 65.2% |

json ckpt tflite |

Will be released soon.

We provide the script to test the accuracy of models, both the float-point models in Pytorch and int8 models in TF-Lite format.

To evaluate the accuracy of PyTorch models, run:

horovodrun -np 8 \

python jobs/run_imagenet.py --evaluate --batch_size 50 \

--train-dir PATH/TO/IMAGENET/train --val-dir PATH/TO/IMAGENET/val \

--net_config assets/configs/mcunet-320kb-1mb_imagenet.json \

--load_from assets/pt_ckpt/mcunet-320kb-1mb_imagenet.pthTo evaluate the accuracy of int8 TF-Lite models, run:

python jobs/eval_tflite.py \

--val-dir PATH/TO/IMAGENET/val \

--tflite_path assets/tflite/mcunet-320kb-1mb_imagenet.tfliteDevelopment and deployment on microcontrollers can be difficult and slow. However, during neural architecture search, we will need to frequently get the hardware statistics of a random neural architecture (e.g., peak SRAM, Flash usage, latency, etc.). To accelerate the process and promote the improvement of deep learning on microcontrollers, we develop profilers to get the model statistics without the need to run on the hardware.

We provide the script to profile the following statistics:

- Model statistics: #MACs, #Param

- Memory statistics: Peak SRAM, Flash usage (on both TF-Lite Micro and TinyEngine)

- Latency statistics: on STM32F746 board (TODO: will be updated soon)

To evaluate the model statistics and deployment statitics from json config, run:

python jobs/profile_model.py assets/configs/mcunet-320kb-1mb_imagenet.jsonYou can also refer to the APIs to integrate the model profiler in your search pipeline.

Note that the profiled SRAM, Flash, and latency are estimated results. However, we find that the estimation is precise enough for neural architecture search. We will provide a detailed analysis on the estimation precision.

Due to the time limit, we cannot finish all the code cleaning for now (all the features have been implemented by the submission, but not all in a good-looking way :) ). The repo is still under development. Please check back for the updates.

Currently, our code cleaning plan is:

- Latency profiler; comparison between estimated and measured memory/latency.

- VWW & Speech Commands models.

- Int4 model format & evaluation.

- TinyNAS search & training.

- TinyEngine on-device deployment.

-

Python 3.6+

-

PyTorch 1.4.0+

-

Tensorflow 1.15

-

Horovod

We thank MIT Satori cluster for providing the computation resource. We thank MIT-IBM Watson AI Lab, Qualcomm, NSF CAREER Award #1943349 and NSF RAPID Award #2027266 for supporting this research.

Part of the code is taken from once-for-all project for development.

TinyTL: Reduce Memory, Not Parameters for Efficient On-Device Learning (NeurIPS'20)

Once for All: Train One Network and Specialize it for Efficient Deployment (ICLR'20)

ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware (ICLR'19)

AutoML for Architecting Efficient and Specialized Neural Networks (IEEE Micro)

AMC: AutoML for Model Compression and Acceleration on Mobile Devices (ECCV'18)

HAQ: Hardware-Aware Automated Quantization (CVPR'19, oral)