Codes for paper "Multi-scale Attention Network for Single Image Super-Resolution".

@article{wang2022multi,

title={Multi-scale Attention Network for Single Image Super-Resolution},

author={Wang, Yan and Li, Yusen and Wang, Gang and Liu, Xiaoguang},

journal={arXiv preprint arXiv:2209.14145},

year={2022}

}

Network architecture: MAB number (n_resblocks): 5/24/36, channel width (n_feats): 48/60/180 for tiny/light/base MAN.

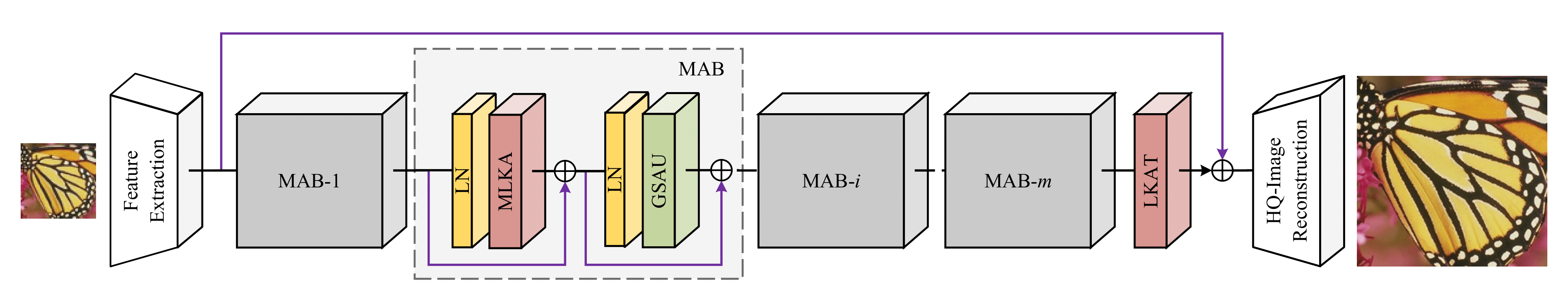

Overview of the proposed MAN constituted of three components: the shallow feature extraction module (SF), the deep feature extraction module (DF) based on multiple multi-scale attention blocks (MAB), and the high-quality image reconstruction module.

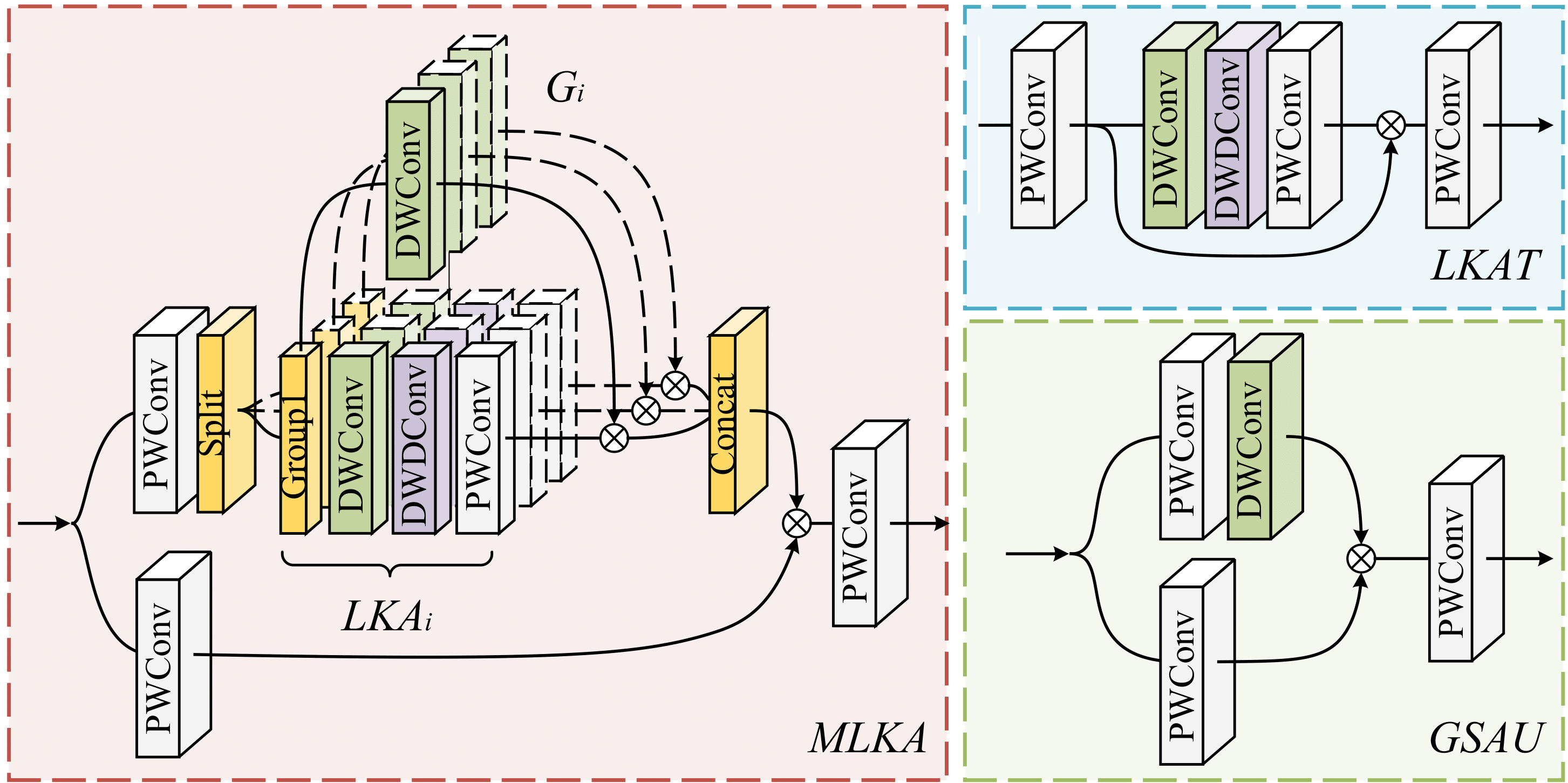

Component details: three multi-scale decomposition modes are utilized in MLKA. The 7×7 depth-wise convolution is used in the GSAU.

Details of Multi-scale Large Kernel Attention (MLKA), Gated Spatial Attention Unit (GSAU), and Large Kernel Attention Tail (LKAT).

Pretrained models avialable at Google Drive and Baidu Pan (pwd: mans for all links).

Results of our MAN-tiny/light/base models. Set5 validation set is used below to show the general performance. The visual results of five testsets are provided in the last column.

| Methods | Params | Madds | PSNR/SSIM (x2) | PSNR/SSIM (x3) | PSNR/SSIM (x4) | Results |

|---|---|---|---|---|---|---|

| MAN-tiny | 150K | 8.4G | 37.91/0.9603 | - | 32.07/0.8930 | x2/x4 |

| MAN-light | 840K | 47.1G | 38.18/0.9612 | 34.65/0.9292 | 32.50/0.8988 | x2/x3/x4 |

| MAN+ | 8712K | 495G | 38.44/0.9623 | 34.97/0.9315 | 32.87/0.9030 | x2/x3/x4 |

The BasicSR framework is utilized to train our MAN, also testing.