In this repo, we mainly provide the following things:

- Original dataset for CKG completion. (CN-100K, CN-82K, ATOMIC)

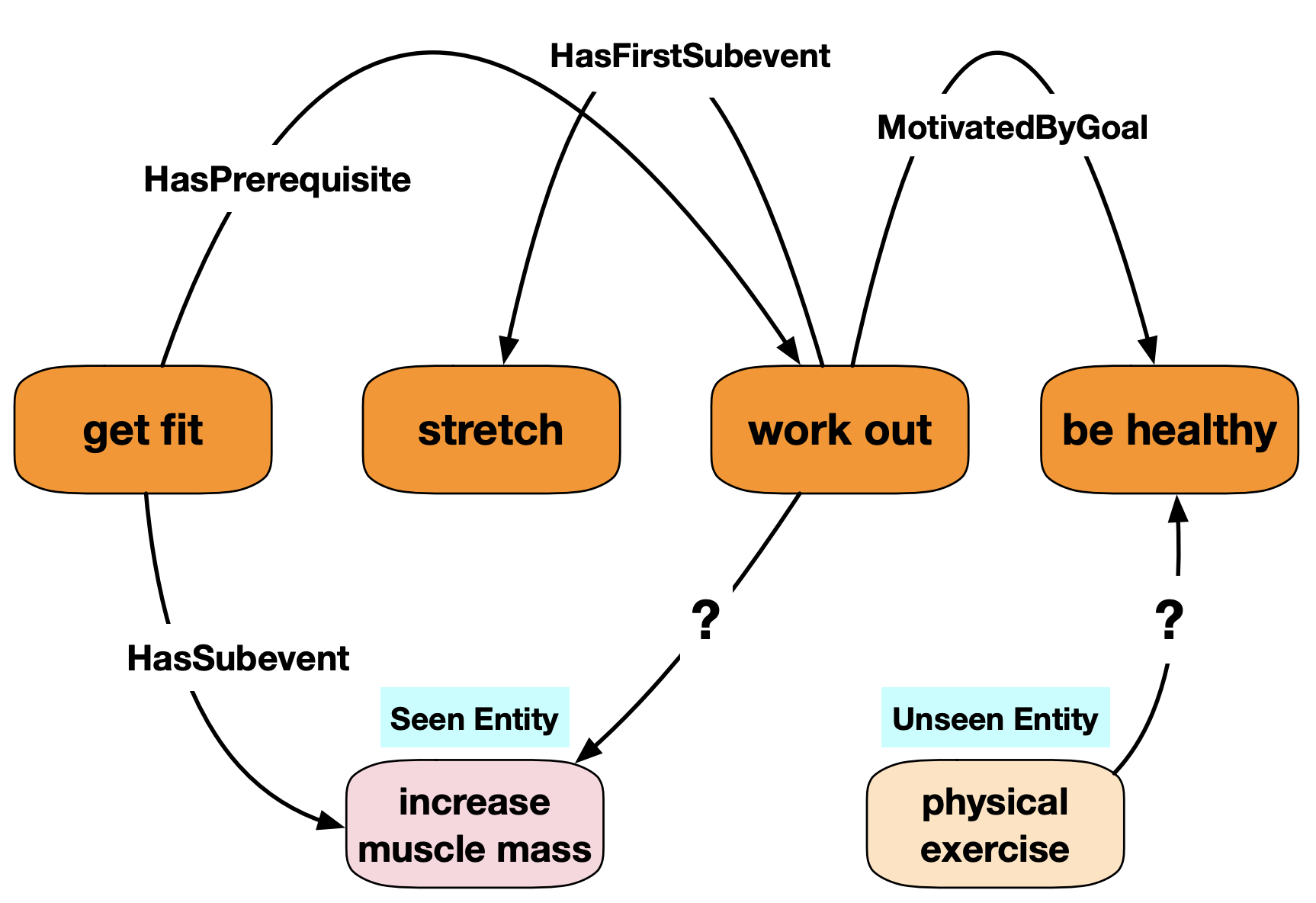

- New dataset splits for inducitve CKG completion evaluation. (CN-82K-Ind, ATOMIC-Ind)

- Example code to train and evaluate. (Textual feature + GCN (with iterative graph densifier) + ConvE)

For more details, please refer to our paper: Inductive Learning on Commonsense Knowledge Graph Completion published on IJCNN-2021.

All datasets are provided in this Repo.

You can find the five datasets in folder 'dataset_only'. Each dataset contains three files corresponding to 'train', 'validation', and 'test'.

For ATOMIC-related datasets, the triples are organized as 'head-relation-tail'.

For CN-related datasets, the triplets are organized as 'relation-head-tail'.

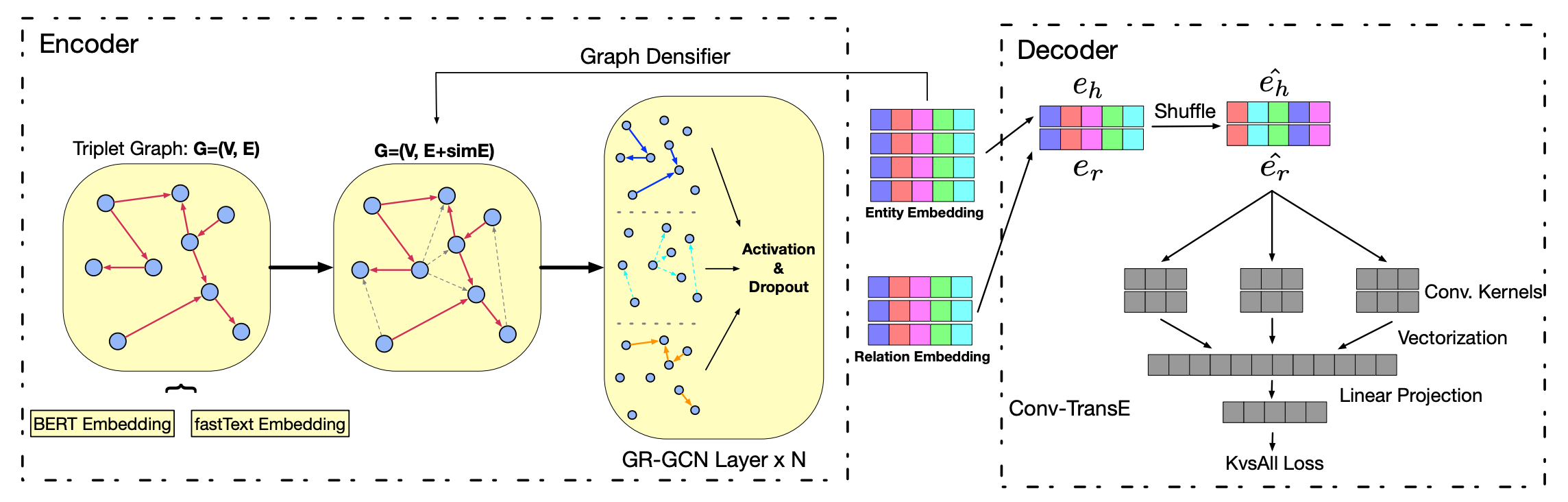

Our model contains several steps:

- Extract feature for each node (provided in the following google drive / baidu disk link.)

- Model Training and Evaluation

- Feature Extractor.

- Relational Graph Convolutional Network.

- ConvE Decoder for triplet prediction.

- Iterative graph densifier to provide more synthetic links for unseen entities.

- pytorch=1.4.0

- dgl-cuda10.1

- numpy

- transformers=2.9.1

To run our model, we pre-extract embedding features for each node using BERT and fastText methods. To download the feature files:

Baidu Disk Password: 060u

bash train.sh conceptnet-82k 15 saved/saved_ckg_model data/saved_entity_embedding/conceptnet/cn_bert_emb_dict.pkl 500 256 100 ConvTransE 10 1234 1e-25 0.20 0.15 0.15 0.0003 1024 Adam 5 300 RWGCN_NET 50000 1324 data/saved_entity_embedding/conceptnet/cn_fasttext_dict.pkl 300 0.2 5 100 50 0.1

bash train.sh conceptnet-100k 15 saved/saved_ckg_model data/saved_entity_embedding/conceptnet/cn_bert_emb_dict.pkl 500 256 100 ConvTransE 10 1234 1e-20 0.25 0.25 0.25 0.0003 1024 Adam 5 300 RWGCN_NET 50000 1324 data/saved_entity_embedding/conceptnet/cn_fasttext_dict.pkl 300 0.2 5 100 50 0.1

bash train.sh atomic 500 saved/saved_ckg_model data/saved_entity_embedding/atomic/at_bert_emb_dict.pkl 500 256 100 ConvTransE 10 1234 1e-20 0.20 0.20 0.20 0.0001 1024 Adam 5 300 RWGCN_NET 50000 1324 data/saved_entity_embedding/atomic/at_fasttext_dict.pkl 300 0.2 3 100 50 0.1

- Note that: There is a bug for entity and embedding correspondance. Please refer to: issue1

If you find our model is useful in your research, please consider cite our paper: Inductive Learning on Commonsense Knowledge Graph Completion:

@article{wang2020inductive,

title={Inductive Learning on Commonsense Knowledge Graph Completion},

author={Wang, Bin and Wang, Guangtao and Huang, Jing and You, Jiaxuan and Leskovec, Jure and Kuo, C-C Jay},

journal={arXiv preprint arXiv:2009.09263},

year={2020}

}

@article{wang2020inductive,

title={Inductive Learning on Commonsense Knowledge Graph Completion},

author={Wang, Bin and Wang, Guangtao and Huang, Jing and You, Jiaxuan and Leskovec, Jure and Kuo, C-C Jay},

booktitle={International Joint Conference on Neural Networks (IJCNN)},

year={2021}

}