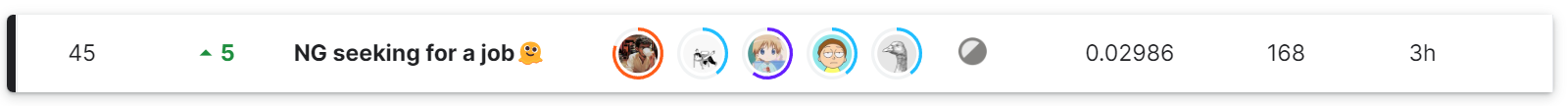

Kaggle H&M Personalized Fashion Recommendations 🥈 Silver Medal Solution 45/3006

This repo contains our final solution. Big shout out to my wonderful teammates! @zhouyuanzhe @tarickMorty @Thomasyyj @ChenmienTan

Our team ranked 45/3006 in the end with a LB score of 0.0292 and a PB score of 0.02996.

Our final solution contains 2 recall strategies and we trained 3 different ranking models (LGB ranker, LGB classifier, DNN) for each strategy.

Candidates from the two strategies are quite different so that ensembling the ranking results can help to improve the score. From our experiments, LB score of a single recall strategy can only reach 0.0286 and ensembling helps us to boost up to 0.0292. We also believe that ensembling can make our predicting result more robust.

Due to hardware limits (50G of RAM), we only generated avg 50 candidates for each user and used 4 weeks of data to train the models.

- Clone this repo

- Create data folders in the structure shown below and copy the four .csv files from the original Kaggle competition dataset to

data/raw/. - Pre-trained embeddings can be generated by this notebook or you can directly download them through the links below and put them in

data/external/. - Run Jupyter Notebooks in

notebooks/. Please note that features used by all models are generated in theFeature Engineeringpart inLGB Recall 1.ipynb, so make sure you run it first.

Google Drive Links of Pre-trained Embeddings

- dssm_item_embd.npy

- dssm_user_embd.npy

- yt_item_embd.npy

- yt_user_embd.npy

- w2v_item_embd.npy

- w2v_user_embd.npy

- w2v_product_embd.npy

- w2v_skipgram_item_embd.npy

- w2v_skipgram_user_embd.npy

- w2v_skipgram_product_embd.npy

- image_embd.npy

├── LICENSE

├── README.md

├── data

│ ├── external <- External data source, e.g. article/customer pre-trained embeddings.

│ ├── interim <- Intermediate data that has been transformed, e.g. Candidates generated form recall strategies.

│ ├── processed <- Processed data for training, e.g. dataframe that has been merged with generated features.

│ └── raw <- The original dataset.

│

├── docs <- Sphinx docstring documentation.

│

├── models <- Trained and serialized models

│

├── notebooks <- Jupyter notebooks.

│

└── src <- Source code for use in this project.

├── __init__.py <- Makes src a Python module

│

├── data <- Scripts to preprocess data

│ ├── datahelper.py

│ └── metrics.py

│

├── features <- Scripts of feature engineering

│ └── base_features.py

│

└── retrieval <- Scripts to generate candidate articles for ranking models

├── collector.py

└── rules.py

Project based on the cookiecutter data science project template. #cookiecutterdatascience