Benchmarking the Hallucination of Chinese Large Language Models via Unconstrained Generation

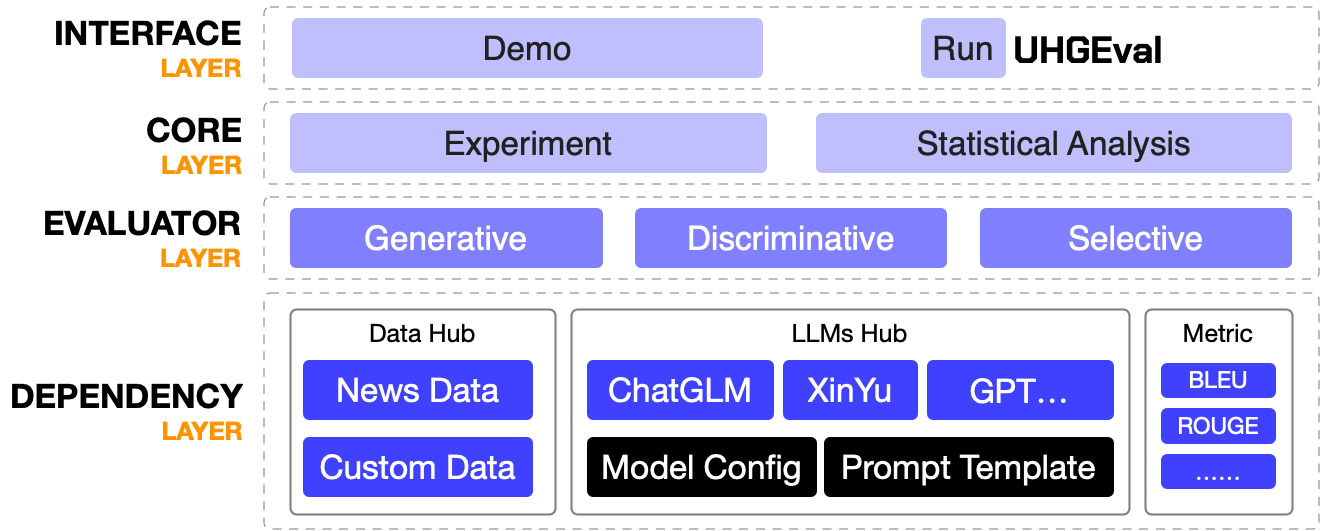

- Safety: Ensuring the security of experimental data is of utmost importance.

- Flexibility: Easily expandable, with all modules replaceable.

Get started quickly with a 20-line demo program.

- UHGEval requires Python>=3.10.0

pip install -r requirements.txt- Take

uhgeval/configs/example_config.pyas an example, createuhgeval/configs/real_config.pyto configure the OpenAI GPT section. - Run

demo.py

Utilize run_uhgeval.py or run_uhgeval_future.py for a comprehensive understanding of this project. The former is currently a provisional piece of code slated for removal in the future; whereas the latter is command-line executable code intended for future use.

The original experimental results are in ./archived_experiments/20231117.

Although we have conducted thorough automatic annotation and manual verification, there may still be errors or imperfections in our XinhuaHallucinations dataset with over 5000 data points. We encourage you to raise issues or submit pull requests to assist us in improving the consistency of the dataset. You may also receive corresponding recognition and rewards for your contributions.

Click me to show all TODOs

- llm, metric: enable loading from HuggingFace

- config: utilize conifg to realize convenient experiment

- TruthfulQA: add new dataset and corresponding evaluators

- another repo: creation pipeline of dataset

- contribution: OpenCompass

@article{UHGEval,

title={UHGEval: Benchmarking the Hallucination of Chinese Large Language Models via Unconstrained Generation},

author={Xun Liang and Shichao Song and Simin Niu and Zhiyu Li and Feiyu Xiong and Bo Tang and Zhaohui Wy and Dawei He and Peng Cheng and Zhonghao Wang and Haiying Deng},

journal={arXiv preprint arXiv:2311.15296},

year={2023},

}