Simplifying a decimated mesh

Godatplay opened this issue · comments

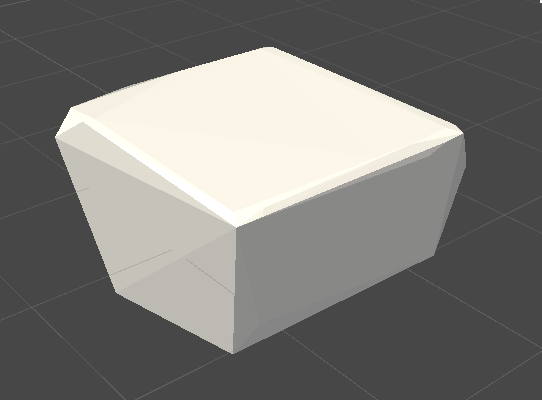

I'm trying to simplify a ~500 triangle mesh that has a topology similar to a decimated mesh.

But I seem to lose volume quickly as I get down toward my goal of 100 tris.

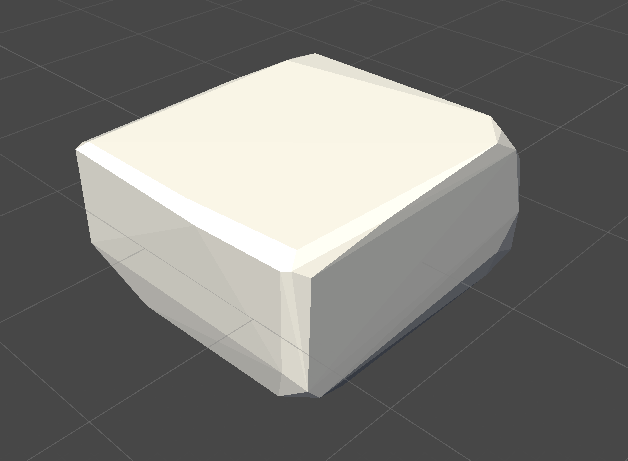

If my mesh is a little more rounded to begin with, though, it maintains its volume much better. Any suggestions?

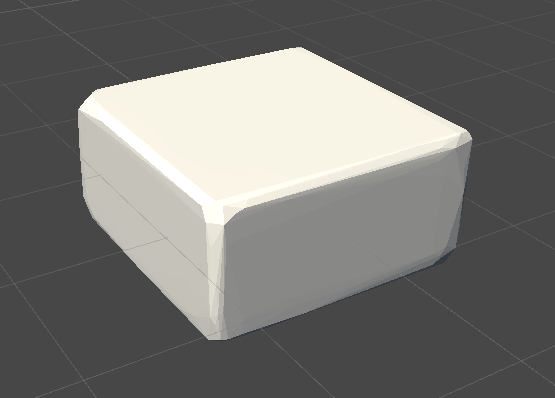

100 tri result:

Why doesn't the algorithm lead to simplifying this to a ~2x1x2 cube?

I think I've figured it out. I had to reduce the Aggressiveness, not increase it. The tooltips for the LOD Generator Helper seem to be off, which threw me off at first. Max Iteration Count tooltip seems to be wrong in general, it seems like it might be referring to Vertex Link Distance, although that one doesn't mention it being the squared distance. Then maybe the second sentence for the Aggressiveness tooltip is for Max Iteration Count? It seems like increasing Aggressiveness does not, in fact, lead to a higher quality, but rather changes the heuristic for collapsing edges?

I have actually never tested that much with primitive shapes. But you are right that the algorithm seems to be doing a bad job here. I have noticed scenarios with other meshes where the order of edge collapses ended up with a worse result. This is why I wanted to expose some of these parameters that can make a difference.

Regarding tooltips, I will have an extra look.

It's true that the aggressiveness doesn't have to lead to a better quality. It all depends on the mesh that is being decimated.

I will see if I can set up some more tests with primitive shapes to see if there's anything that I can do about it.

I don't want this to be the wrong algorithm for your use case, but it may be. This algorithm is prioritized to be faster than usual by taking certain shortcuts with the aim to still have a decent quality output.

It's all based on the work of sp4cerat at https://github.com/sp4cerat/Fast-Quadric-Mesh-Simplification

I might have made some mistakes along the way though. So give me some time to inspect it.

Thanks for your reply! Let me know if test meshes would help. And yeah I saw that. Nice work on implementing this solution, it's indeed super fast! I appreciate you sharing this with the Unity community ^_^

Any meshes that you can provide would indeed be helpful. My 3D modeling skills are not that great so I heavily rely on public meshes on the internet, haha.

I'm glad that you find it useful :)

@Godatplay I found that the algorithm even decimates a simple plane horribly as well. And it makes sense considering that the algorithm doesn't really care about the boundaries of the mesh. I did some experiments but couldn't really get good results that would work in a more generic case.

I attempted things such as adding weights for discontinuities between faces for edges, which worked better in order to leave the boundaries in higher quality depending on the configured weight. However, there appears to be some problem in the quadric math. I based these experiments on this paper (https://www.cs.cmu.edu/~./garland/Papers/quadrics.pdf), specifically the Preserving Boundaries part in section 6.

So I think that preserving the boundaries is only part of the problem. The problem in the quadric math is simply that the matrices are added together on each edge collapse, and the more this happens, the more errors starts to occur in the resulting vertex position after a collapse. This became a lot more apparent as I was doing my experimentation.

The other problem however is that I'm far from an expert in this area of mesh decimation and even this kind of math. I could read up more on the subject to get a deeper understanding, but it's outside of my interests to be honest.

What I'm saying is that I'm not sure I can fix this kind of problem. This project is essentially a hobby experiment that ended up on GitHub, where I didn't even expect that people would be interested at first. I have felt pressured to improve it as more people have shown interest, but my goal is to get this project to a point where I no longer have to maintain it. My interest is simply not in developing this further, because I have way more interesting projects in the pipeline (not yet public).

I'm okay with fixing simple bugs, but I think this might be related to the original algorithm in itself.

I will leave this issue open in case I figure something out, or if someone else out there have any clue on how to deal with this.

But just so that you are aware, this issue might be open for a long time. So if you really need this, I suggest that you find another alternative, or try to resolve the problem yourself and make a pull request.

I'm sorry that I couldn't be more helpful. But considering that I am doing this on my spare time, I have to prioritize the little time I have available. I hope that you'll understand.

Edit: If you are interested in seeing exactly what I was experimenting on, I pushed it to this branch: experiments/boundary-weight