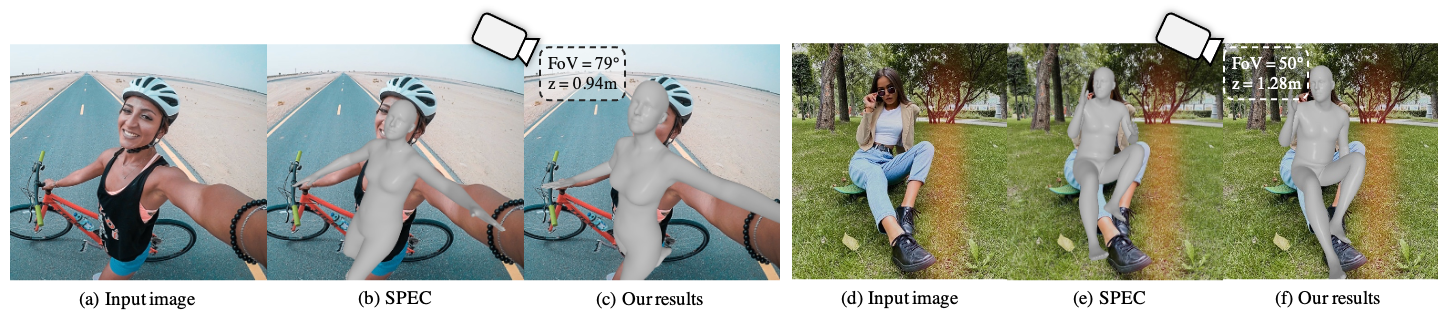

Zolly: Zoom Focal Length Correctly for Perspective-Distorted Human Mesh Reconstruction

The first work aims to solve 3D Human Mesh Reconstruction task in perspective-distorted images.

The first work aims to solve 3D Human Mesh Reconstruction task in perspective-distorted images.

🗓️ News:

🎆 2023.Nov.23, training code of Zolly is released, pretrained zolly weight will come soon.

🎆 2023.Aug.12, Zolly is selected as ICCV2023 oral, project page.

🎆 2023.Aug.7, the dataset link is released. The training code is coming soon.

🎆 2023.Jul.14, Zolly is accepted to ICCV2023, codes and data will come soon.

🎆 2023.Mar.27, arxiv link is released.

🚀 Run the code

🌏 Environments

- You should install

MMHuman3Dfirstly.

You should install the needed relies as ffmpeg, torch, mmcv, pytorch3d following its tutorials.

- It is recommended that you install the stable version of

MMHuman3D:

wget https://github.com/open-mmlab/mmhuman3d/archive/refs/tags/v0.9.0.tar.gz;

tar -xvf v0.9.0.tar.gz;

cd mmhuman3d-0.9.0;

pip install -e .You can install pytorch3d from file if you find any difficulty.

E.g. python3.8 + pytorch-1.13.1 + cuda-11.7 + pytorch3d-0.7.4

wget https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch3d/linux-64/pytorch3d-0.7.4-py38_cu117_pyt1131.tar.bz2;

pip install fvcore;

pip install iopath;

conda install --use-local pytorch3d-0.7.4-py38_cu117_pyt1131.tar.bz2;- install this repo

cd Zolly;

pip install -e .📁 Required Data and Files

You can download the files from onedrive.

This link contains:

-

Dataset annotations: all have ground-truth focal length, translation and smpl parameters.

- HuMMan (train, test_p1, test_p2, test_p3)

- SPEC-MTP (test_p1, test_p2, test_p3)

- PDHuman (train, test_p1, test_p2, test_p3, test_p4, test_p5)

- 3DPW (train(has optimized neutral betas), test_p1, test_p2, test_p3)

-

Dataset images.

- HuMMan

- SPEC-MTP

- PDHuman

- For other open sourced datasets, please downlad from their origin website.

-

Pretrained backbone

hrnetw48_coco_pose.pthresnet50_coco_pose.pth

-

Others

smpl_uv_decomr.npzmesh_downsampling.npzJ_regressor_h36m.npy

-

SMPL skinning weights

- Please find in SMPL official link.

👇 Arrange the files

Click here to unfold.

root

├── body_models

│ └── smpl

| ├── J_regressor_extra.npy

| ├── J_regressor_h36m.npy

| ├── mesh_downsampling.npz

| ├── SMPL_FEMALE.pkl

| ├── SMPL_MALE.pkl

| ├── smpl_mean_params.npz

| ├── SMPL_NEUTRAL.pkl

| └── smpl_uv_decomr.npz

├── cache

├── mmhuman_data

│ ├── datasets

| │ ├── coco

| │ ├── h36m

| │ ├── humman

| │ ├── lspet

| │ ├── mpii

| │ ├── mpi_inf_3dhp

| │ ├── pdhuman

| │ ├── pw3d

| │ └── spec_mtp

│ └── preprocessed_datasets

| ├── humman_test_p1.npz

| ├── humman_train.npz

| ├── pdhuman_test_p1.npz

| ├── pdhuman_train.npz

| ├── pw3d_train.npz

| ├── pw3d_train_transl.npz

| ├── spec_mtp.npz

| └── spec_mtp_p1.npz

└── pretrain

├── hrnetw48_coco_pose.pth

└── resnet50_coco_pose.pthAnd change the root in zolly/configs/base.py

🚅 Train

sh train_bash.sh zolly/configs/zolly_r50.py $num_gpu$ --work-dir=$your_workdir$E.g, you can use

sh train_bash.sh zolly/configs/zolly_r50.py 8 --work-dir=work_dirs/zolly🚗 Test

sh test_bash.sh zolly/configs/zolly/zolly_r50.py $num_gpu$ --checkpoint=$your_ckpt$Demo

- For Demo and pretrained checkpoint, Please wait for several days.

💻Add Your Own Algorithm

- Add your own network in

zolly/models/heads, and add it tozolly/models/builder.py. - Add your own trainer in

zolly/models/architectures, and add it tozolly/models/architectures/builder.py. - Add your own loss function in

zolly/models/losses, and add it tozolly/models/losses/builder.py. - Add your own config file in

zolly/configs/, you can modify fromzolly/configs/zolly_r50.py. And remember to change therootparameter inzolly/configs/base.py, where your files should be put.

🎓 Citation

If you find this project useful in your research, please consider cite:

@inproceedings{wangzolly,

title={Zolly: Zoom Focal Length Correctly for Perspective-Distorted Human Mesh Reconstruction Supplementary Material},

author={Wang, Wenjia and Ge, Yongtao and Mei, Haiyi and Cai, Zhongang and Sun, Qingping and Wang, Yanjun and Shen, Chunhua and Yang, Lei and Komura, Taku},

booktitle={Proceedings of the IEEE International Conference on Computer Vision (ICCV)},

year={2023}

}

😁 Acknowledge

Emojis are collected from gist:7360908.

Some of the codes are based on MMHuman3D, DecoMR.

📧 Contact

Feel free to contact me for other questions or cooperation: wwj2022@connect.hku.hk