This is an updating survey for Continual Learning of Large Language Models (CL-LLMs), a constantly updated and extended version for the manuscript "Continual Learning of Large Language Models: A Comprehensive Survey".

Welcome to contribute to this survey by submitting a pull request or opening an issue!

- [07/2024] the updated version of the paper has been released on arXiv.

- [06/2024] (⭐) add new papers released between 05/2024 - 06/2024.

- [05/2024] (🔥) add new papers released between 02/2024 - 05/2024.

- [04/2024] initial release.

- Relevant Survey Papers

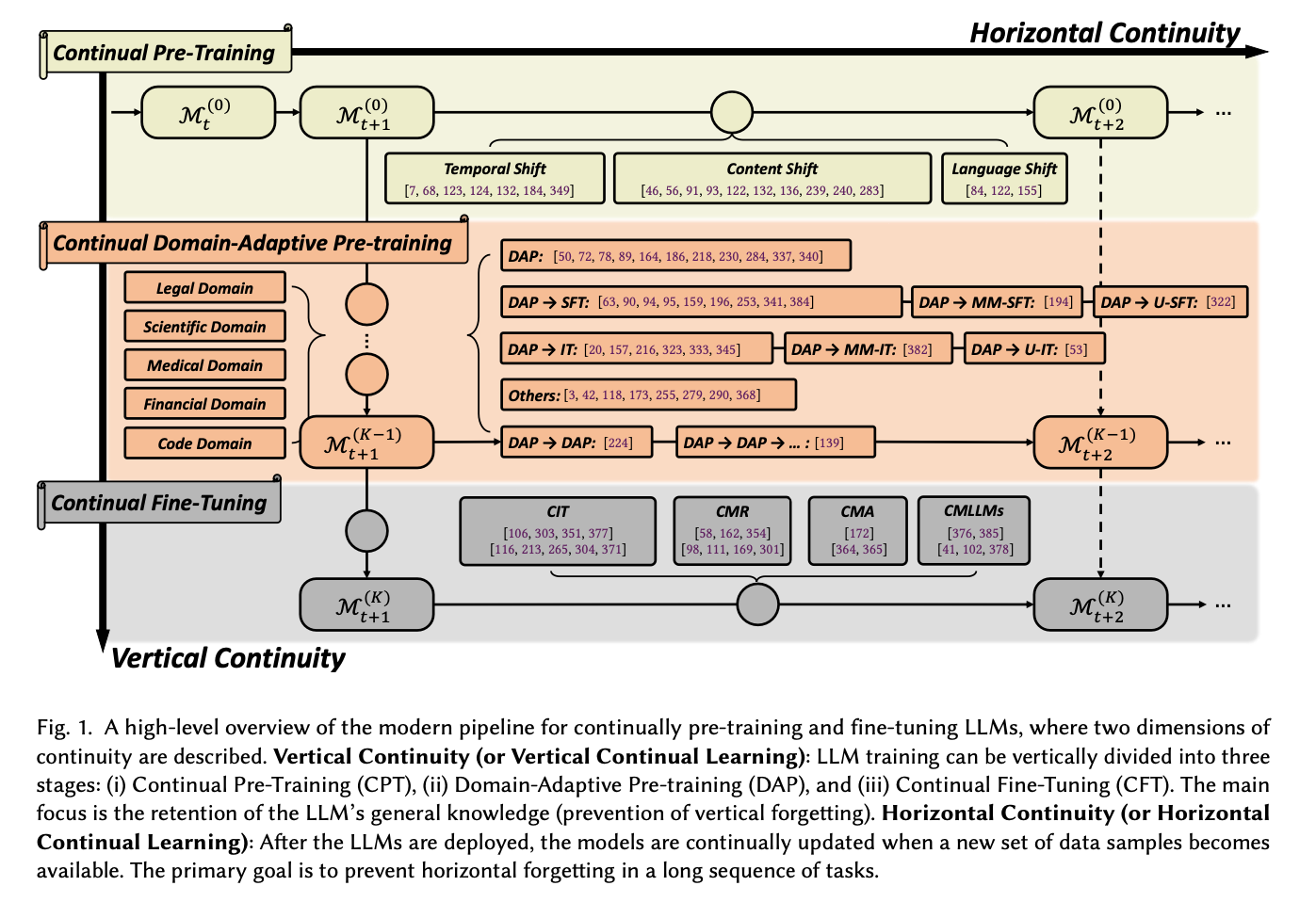

- Continual Pre-Training of LLMs (CPT)

- Domain-Adaptive Pre-Training of LLMs (DAP)

- Continual Fine-Tuning of LLMs (CFT)

- Continual LLMs Miscs

- ⭐ Towards Lifelong Learning of Large Language Models: A Survey [paper][code]

- 🔥 Recent Advances of Foundation Language Models-based Continual Learning: A Survey [paper]

- A Comprehensive Survey of Continual Learning: Theory, Method and Application (TPAMI 2024) [paper]

- Continual Learning for Large Language Models: A Survey [paper]

- Continual Lifelong Learning in Natural Language Processing: A Survey (COLING 2020) [paper]

- Continual Learning of Natural Language Processing Tasks: A Survey [paper]

- A Survey on Knowledge Distillation of Large Language Models [paper]

- ⭐ How Do Large Language Models Acquire Factual Knowledge During Pretraining? [paper]

- ⭐ DHA: Learning Decoupled-Head Attention from Transformer Checkpoints via Adaptive Heads Fusion [paper]

- ⭐ MoRA: High-Rank Updating for Parameter-Efficient Fine-Tuning [paper][code]

- 🔥 Large Language Model Can Continue Evolving From Mistakes [paper]

- 🔥 Rho-1: Not All Tokens Are What You Need [paper][code]

- 🔥 Simple and Scalable Strategies to Continually Pre-train Large Language Models [paper]

- 🔥 Investigating Continual Pretraining in Large Language Models: Insights and Implications [paper]

- 🔥 Take the Bull by the Horns: Hard Sample-Reweighted Continual Training Improves LLM Generalization [paper][code]

- TimeLMs: Diachronic Language Models from Twitter (ACL 2022, Demo Track) [paper][code]

- Continual Pre-Training of Large Language Models: How to (re)warm your model? [paper]

- Continual Learning Under Language Shift [paper]

- Examining Forgetting in Continual Pre-training of Aligned Large Language Models [paper]

- Towards Continual Knowledge Learning of Language Models (ICLR 2022) [paper][code]

- Lifelong Pretraining: Continually Adapting Language Models to Emerging Corpora (NAACL 2022) [paper]

- TemporalWiki: A Lifelong Benchmark for Training and Evaluating Ever-Evolving Language Models (EMNLP 2022) [paper][code]

- Continual Training of Language Models for Few-Shot Learning (EMNLP 2022) [paper][code]

- ERNIE 2.0: A Continual Pre-Training Framework for Language Understanding (AAAI 2020) [paper][code]

- Dynamic Language Models for Continuously Evolving Content (KDD 2021) [paper]

- Continual Pre-Training Mitigates Forgetting in Language and Vision [paper][code]

- DEMix Layers: Disentangling Domains for Modular Language Modeling (NAACL 2022) [paper][code]

- Time-Aware Language Models as Temporal Knowledge Bases (TACL 2022) [paper]

- Recyclable Tuning for Continual Pre-training (ACL 2023 Findings) [paper][code]

- Lifelong Language Pretraining with Distribution-Specialized Experts (ICML 2023) [paper]

- ELLE: Efficient Lifelong Pre-training for Emerging Data (ACL 2022 Findings) [paper][code]

- ⭐ Instruction Pre-Training: Language Models are Supervised Multitask Learners [paper][code][huggingface]

- ⭐ D-CPT Law: Domain-specific Continual Pre-Training Scaling Law for Large Language Models [paper]

- 🔥 BLADE: Enhancing Black-box Large Language Models with Small Domain-Specific Models [paper]

- 🔥 Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains [paper]

- Adapting Large Language Models via Reading Comprehension (ICLR 2024) [paper][code]

- SaulLM-7B: A pioneering Large Language Model for Law [paper][huggingface]

- Lawyer LLaMA Technical Report [paper]

- ⭐ PediatricsGPT: Large Language Models as Chinese Medical Assistants for Pediatric Applications [paper]

- 🔥 Hippocrates: An Open-Source Framework for Advancing Large Language Models in Healthcare [paper][project][huggingface]

- 🔥 Me LLaMA: Foundation Large Language Models for Medical Applications [paper][code]

- BioMedGPT: Open Multimodal Generative Pre-trained Transformer for BioMedicine [paper][code]

- Continuous Training and Fine-tuning for Domain-Specific Language Models in Medical Question Answering [paper]

- PMC-LLaMA: Towards Building Open-source Language Models for Medicine [paper][code]

- AF Adapter: Continual Pretraining for Building Chinese Biomedical Language Model [paper]

- Continual Domain-Tuning for Pretrained Language Models [paper]

- HuatuoGPT-II, One-stage Training for Medical Adaption of LLMs [paper][code]

- 🔥 Construction of Domain-specified Japanese Large Language Model for Finance through Continual Pre-training [paper]

- 🔥 Pretraining and Updating Language- and Domain-specific Large Language Model: A Case Study in Japanese Business Domain [paper][huggingface]

- BBT-Fin: Comprehensive Construction of Chinese Financial Domain Pre-trained Language Model, Corpus and Benchmark [paper][code]

- CFGPT: Chinese Financial Assistant with Large Language Model [paper][code]

- Efficient Continual Pre-training for Building Domain Specific Large Language Models [paper]

- WeaverBird: Empowering Financial Decision-Making with Large Language Model, Knowledge Base, and Search Engine [paper][code][huggingface][demo]

- XuanYuan 2.0: A Large Chinese Financial Chat Model with Hundreds of Billions Parameters [paper][huggingface]

- ⭐ PRESTO: Progressive Pretraining Enhances Synthetic Chemistry Outcomes [paper][code]

- 🔥 ClimateGPT: Towards AI Synthesizing Interdisciplinary Research on Climate Change [paper][hugginface]

- AstroLLaMA: Towards Specialized Foundation Models in Astronomy [paper]

- OceanGPT: A Large Language Model for Ocean Science Tasks [paper][code]

- K2: A Foundation Language Model for Geoscience Knowledge Understanding and Utilization [paper][code][huggingface]

- MarineGPT: Unlocking Secrets of "Ocean" to the Public [paper][code]

- GeoGalactica: A Scientific Large Language Model in Geoscience [paper][code][huggingface]

- Llemma: An Open Language Model For Mathematics [paper][code][huggingface]

- PLLaMa: An Open-source Large Language Model for Plant Science [paper][code][huggingface]

- CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis [paper][code][huggingface]

- Code Needs Comments: Enhancing Code LLMs with Comment Augmentation [code]

- StarCoder: may the source be with you! [ppaer][code]

- DeepSeek-Coder: When the Large Language Model Meets Programming -- The Rise of Code Intelligence [paper][code][huggingface]

- IRCoder: Intermediate Representations Make Language Models Robust Multilingual Code Generators [paper][code]

- Code Llama: Open Foundation Models for Code [paper][code]

- ⭐ InstructionCP: A fast approach to transfer Large Language Models into target language [paper]

- 🔥 Continual Pre-Training for Cross-Lingual LLM Adaptation: Enhancing Japanese Language Capabilities [paper]

- 🔥 Sailor: Open Language Models for South-East Asia [paper][code]

- 🔥 Aurora-M: The First Open Source Multilingual Language Model Red-teamed according to the U.S. Executive Order [paper][huggingface]

- LLaMA Pro: Progressive LLaMA with Block Expansion [paper][code][huggingface]

- ECONET: Effective Continual Pretraining of Language Models for Event Temporal Reasoning [paper][code]

- Pre-training Text-to-Text Transformers for Concept-centric Common Sense [paper][code][project]

- Don't Stop Pretraining: Adapt Language Models to Domains and Tasks (ACL 2020) [paper][code]

- EcomGPT-CT: Continual Pre-training of E-commerce Large Language Models with Semi-structured Data [paper]

- Achieving Forgetting Prevention and Knowledge Transfer in Continual Learning (NeurIPS 2021) [paper][code]

- Can BERT Refrain from Forgetting on Sequential Tasks? A Probing Study (ICLR 2023) [paper][code]

- CIRCLE: Continual Repair across Programming Languages (ISSTA 2022) [paper]

- ConPET: Continual Parameter-Efficient Tuning for Large Language Models [paper][code]

- Enhancing Continual Learning with Global Prototypes: Counteracting Negative Representation Drift [paper]

- Investigating Forgetting in Pre-Trained Representations Through Continual Learning [paper]

- Learn or Recall? Revisiting Incremental Learning with Pre-trained Language Models [paper][code]

- LFPT5: A Unified Framework for Lifelong Few-shot Language Learning Based on Prompt Tuning of T5 (ICLR 2022) [paper][code]

- On the Usage of Continual Learning for Out-of-Distribution Generalization in Pre-trained Language Models of Code [paper]

- Overcoming Catastrophic Forgetting in Massively Multilingual Continual Learning (ACL 2023 Findings) [paper]

- Parameterizing Context: Unleashing the Power of Parameter-Efficient Fine-Tuning and In-Context Tuning for Continual Table Semantic Parsing (NeurIPS 2023) [paper][code]

- Fine-tuned Language Models are Continual Learners [paper][code]

- TRACE: A Comprehensive Benchmark for Continual Learning in Large Language Models [paper][code]

- Large-scale Lifelong Learning of In-context Instructions and How to Tackle It [paper]

- CITB: A Benchmark for Continual Instruction Tuning [paper][code]

- Mitigating Catastrophic Forgetting in Large Language Models with Self-Synthesized Rehearsal [paper]

- Don't Half-listen: Capturing Key-part Information in Continual Instruction Tuning [paper]

- ConTinTin: Continual Learning from Task Instructions [paper]

- Orthogonal Subspace Learning for Language Model Continual Learning [paper][code]

- SAPT: A Shared Attention Framework for Parameter-Efficient Continual Learning of Large Language Models [paper]

- InsCL: A Data-efficient Continual Learning Paradigm for Fine-tuning Large Language Models with Instructions [paper]

- 🔥 WISE: Rethinking the Knowledge Memory for Lifelong Model Editing of Large Language Models [paper][code]

- Aging with GRACE: Lifelong Model Editing with Discrete Key-Value Adaptors [paper][code]

- On Continual Model Refinement in Out-of-Distribution Data Streams [paper][code][project]

- Melo: Enhancing model editing with neuron-indexed dynamic lora [paper][code]

- Larimar: Large language models with episodic memory control [paper]

- Wilke: Wise-layer knowledge editor for lifelong knowledge editing [paper]

- ⭐ Online Merging Optimizers for Boosting Rewards and Mitigating Tax in Alignment [paper][code]

- Alpaca: A Strong, Replicable Instruction-Following Model [project] [code]

- Self-training Improves Pre-training for Few-shot Learning in Task-oriented Dialog Systems [paper] [code]

- Training language models to follow instructions with human feedback (NeurIPS 2022) [paper]

- Direct preference optimization: Your language model is secretly a reward model (NeurIPS 2023) [paper]

- Copf: Continual learning human preference through optimal policy fitting [paper]

- CPPO: Continual Learning for Reinforcement Learning with Human Feedback (ICLR 2024) [paper]

- A Moral Imperative: The Need for Continual Superalignment of Large Language Models [paper]

- Mitigating the Alignment Tax of RLHF [paper]

- ⭐ CLIP model is an Efficient Online Lifelong Learner [paper]

- 🔥 CLAP4CLIP: Continual Learning with Probabilistic Finetuning for Vision-Language Models [paper][code]

- 🔥 Boosting Continual Learning of Vision-Language Models via Mixture-of-Experts Adapters (CVPR 2024) [paper][code]

- 🔥 CoLeCLIP: Open-Domain Continual Learning via Joint Task Prompt and Vocabulary Learning [paper]

- 🔥 Select and Distill: Selective Dual-Teacher Knowledge Transfer for Continual Learning on Vision-Language Models [paper]

- Investigating the Catastrophic Forgetting in Multimodal Large Language Models (PMLR 2024) [paper]

- MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models [paper] [code]

- Visual Instruction Tuning (NeurIPS 2023, Oral) [paper] [code]

- Continual Instruction Tuning for Large Multimodal Models [paper]

- CoIN: A Benchmark of Continual Instruction tuNing for Multimodel Large Language Model [paper] [code]

- Model Tailor: Mitigating Catastrophic Forgetting in Multi-modal Large Language Models [paper]

- Reconstruct before Query: Continual Missing Modality Learning with Decomposed Prompt Collaboration [paper] [code]

- 🔥 Data Mixing Laws: Optimizing Data Mixtures by Predicting Language Modeling Performance [paper][code]

- 🔥 AdapterSwap: Continuous Training of LLMs with Data Removal and Access-Control Guarantees [paper]

- 🔥 COPAL: Continual Pruning in Large Language Generative Models [paper]

- 🔥 HippoRAG: Neurobiologically Inspired Long-Term Memory for Large Language Models [paper][code]

- Reawakening knowledge: Anticipatory recovery from catastrophic interference via structured training [paper][code]

If you find our survey or this collection of papers useful, please consider citing our work by

@misc{shi2024continuallearninglargelanguage,

title={Continual Learning of Large Language Models: A Comprehensive Survey},

author={Haizhou Shi and

Zihao Xu and

Hengyi Wang and

Weiyi Qin and

Wenyuan Wang and

Yibin Wang and

Zifeng Wang and

Sayna Ebrahimi and

Hao Wang},

year={2024},

eprint={2404.16789},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2404.16789},

}