This project provides a helper for evaluating your Qanary-based Question Answering system. In general, it is providing a framework for controlling the quality of the QA system’s components. The script can be fully customized to check the data flow requirements of your QA system.

Typical QA systems depend on several components. We need to control the quality of all components, s.t., we know:

-

if the expected functionality is actually achieved (not just claimed),

-

if changes of the QA system are improving the quality of the system (and not decreasing the quality), and

-

what needs to be improved next.

Hence, we need automatic end-to-end tests while also evaluating each process step. This is called end-to-end micro-benchmarking Fortunately, Qanary is already providing the interfaces to conveniently control the quality of a QA system.

The quality is evaluated using SPARQL queries to the Qanary triplestore as it contains all relevant information of the QA process for a given question. Hence, we will define questions that will be executed on your QA system and check thereafter if the expected information is contained in the Qanary triplestore.

The quality benchmarking tool is started while providing a directory that contains all configuration files (executed using a Python 3 interpreter):

python evaluate-qanary-system.py --directory=<DIRECTORYNAME>Example:

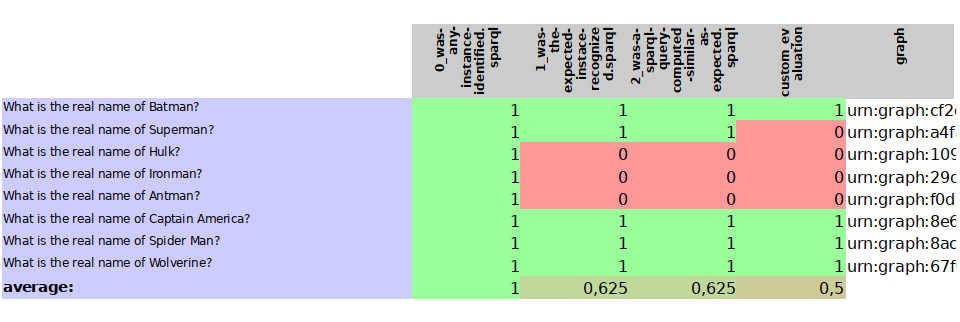

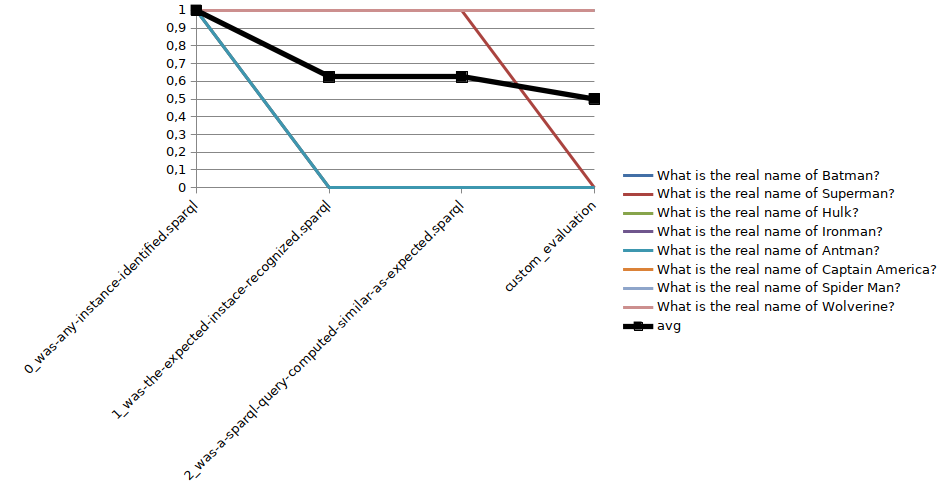

python evaluate-qanary-system.py --directory=superhero-real-namesThe script will create a logfile, a JSON file containing all test results in JSON format, and an XLSX file containing a tabular representation of the test results including a predefined chart showing visually the measured quality of your QA system.

git clone git@github.com:WSE-research/Qanary-quality-assurance.gitor

git clone https://github.com/WSE-research/Qanary-quality-assurance.gitThe test script is useing Python 3. Install Python dependencies using pip:

pip install -r requirements.txtThe configuration of the tests is defined in the file qanary-test-definition.json.

The structure of the file is as follows:

{

"qanary": {

"system_url": "...",

"componentlist": [ ... ]

},

"validation-sparql-templates": [ ... ],

"custom-validation": "...",

"tests": [ ... ]

}The qanary section configures the connection data to the Qanary system (see [example](superhero-real-names/qanary-test-definition.json)).

Here a list of filenames defined referring to files where each is containing one SPARQL ASK query template.

An ASK query always responds with True or False.

Hence, this is perfectly suited for validating if the Qanary triplestore contains the expected information.

All ASK queries templates in the list will be processed for each (later defined) question.

One important issue is the use of a placeholder which is typically required to customize the query for each question.

Any string can be a placeholder, therefore, choose a unique string to prevent problems during the replacing of the placeholders.

A default replacement key is defined: <GRAPHID>.

It is used to inject the name of the current graph in your query template.

See the section Step 3. Create ASK query template files for ASK queries.

Typically at the last step of the quality control process, one would like to check if it is possible to actually retrieve the answer from the target data (i.e., the data that should contain the answer to the given question).

For example, you might use your computed answer SPARQL query to retrieve data from the DBpedia knowledge graph.

This step cannot be generalized.

Therefore, a custom test file is defined here.

The file contains the test function named validate that is defined as follows:

def validate(test, logger, conf_qanary, connection, graphid):Hence, one can work with the test object (see above), a connection to the Qanary triplestore (Stardog connection), and the graphid is referring to the graph containing the current process data of the question defined in the test (see below).

The logger is writing to the standard file log (see above).

The dictionary conf_qanary is containing the data of the section qanary (see above).

You can implement anything you want in this method.

However, the method needs to return True or False to fit into the defined process.

The method validate needs to be available.

If you do not need this custom implementation, then define the method as follows:

def validate(test, logger, conf_qanary, connection, graphid):

return FalseAn example is available here.

This section contains an array of test objects. Each object has the following structure:

{

"question": "TEXT",

"replacements": {

"KEY0": "VALUE0",

"KEY1": "VALUE1"

}

}The property question contains the textual question.

The property replacements is an object defining search (placeholder) and replace (new value) structures.

They are applied to all ASK SPARQL queries individually depending on the currently processed question.

Hence, here an ASK query templates are transformed into an executable ASK query.

For examples see here.

For each test template defined in the section validation-sparql-templates a file needs to be created. The file need to contain a ASK SPARQL query (i.e., each query need to return True or False).

For details on ASK queries see https://www.futurelearn.com/info/courses/linked-data/0/steps/16094 or https://codyburleson.com/blog/sparql-examples-ask. For examples of a real test configuration see here, here and here.

After the execution of the test script a new directory output is created (if not existing before).

It will contain the output files:

Every file name contain the timestamp (datetime when the test was started). If the test is executed several times, then the files are not overwritten.

See the stored exemplary tests for the output structure.

See the folder superhero-real-names for a complete example.

The script is currently designed for textual questions only.

The script is evaluating one scenario only (e.g., one type of questions). Typically, in a project there will be many scenarios. In this case, just define several directories containing particular definitions for an additional scenario.

Feel free to fork and modify the script to meet your requirements.