WHC2021SIC Project Template

Project Template for the IEEE World Haptics Conference 2021 Student Innovation Challenge

https://2021.worldhaptics.org/sic/

Authors

Tactile Mirror

Team

Diar Abdlkarim

Diar is a PhD student at the University of Bimirngham working on upper limb rehabilitation following nerve injury using virtual reality training.

Find more information on his website.

Davide Deflorio

Davide is a PhD student at the University of Bimirngham working on sensory mechanisms of tactile perception with psychophysics and computational modeling.

Advisor

Massimiliano Di Luca

Max is Senior Lecturer at the University of Birmingham in the Centre for Computational Neuroscience and Cognitive Robotics. He performs both fundamental and applied research to investigate how humans process multisensory stimuli, with an accent on understanding the temporal, dynamic, and interactive nature of perception. He uses psychophysical experiments and neuroimaging methods to capture how the brain employs multiple sources of sensory information and combines them with assumptions, predictions, and information obtained through active exploration.

Find more information on their website.

Chairs

Christian Frisson

Christian Frisson is an associate researcher at the Input Devices and Music Interaction Laboratory (IDMIL) (2021), previously postdoctoral researcher at McGill University with the IDMIL (2019-2020), at the University of Calgary with the Interactions Lab (2017-2018) and at Inria in France with the Mjolnir team (2016-2017). He obtained his PhD at the University of Mons, numediart Institute, in Belgium (2015); his MSc in “Art, Science, Technology” from Institut National Polytechnique de Grenoble with the Association for the Creation and Research on Expression Tools (ACROE), in France (2006); his Masters in Electrical (Metrology) and Mechanical (Acoustics) Engineering from ENSIM in Le Mans, France (2005). Christian Frisson is a researcher in Human-Computer Interaction, with expertise in Information Visualization, Multimedia Information Retrieval, and Tangible/Haptic Interaction. Christian creates and evaluates user interfaces for manipulating multimedia data. Christian favors obtaining replicable, reusable and sustainable results through open-source software, open hardware and open datasets. With his co-authors, Christian obtained the IEEE VIS 2019 Infovis Best Paper award and was selected among 4 finalists for IEEE Haptics Symposium 2020 Most Promising WIP.

Find more information on his website.

Jun Nishida

Jun Nishida is Currently Postdoctoral Fellow at University of Chicago & Research Fellow at Japan Society for the Promotion of Science (JSPS PDRA) / Previously JSPS Research Fellow (DC1), Project Researcher at Japanese Ministry of Internal Affairs and Communications, SCOPE Innovation Program & PhD Fellow at Microsoft Research Asia / Graduated from Empowerment Informatics Program, University of Tsukuba, Japan.

I’m a postdoctoral fellow at University of Chicago. I have received my PhD in Human Informatics at University of Tsukuba, Japan in 2019. I am interested in designing experiences in which all people can maximize and share their physical and cognitive capabilities to support each other. I explore the possibility of this interaction in the field of rehabilitation, education, and design. To this end, I design wearable cybernic interfaces which share one’s embodied and social perspectives among people by means of electrical muscle stimulation, exoskeletons, virtual/augmented reality systems. Received more than 40 awards including Microsoft Research Asia Fellowship Award, national grants, and three University Presidential Awards. Review service at ACM SIGCHI, SIGGRAPH, UIST, TEI, IEEE VR, HRI.

Find more information on their website.

Heather Culbertson

Heather Culbertson is a Gabilan Assistant Professor of Computer Science at the University of Southern California. Her research focuses on the design and control of haptic devices and rendering systems, human-robot interaction, and virtual reality. Particularly she is interested in creating haptic interactions that are natural and realistically mimic the touch sensations experienced during interactions with the physical world. Previously, she was a research scientist in the Department of Mechanical Engineering at Stanford University where she worked in the Collaborative Haptics and Robotics in Medicine (CHARM) Lab. She received her PhD in the Department of Mechanical Engineering and Applied Mechanics (MEAM) at the University of Pennsylvania in 2015 working in the Haptics Group, part of the General Robotics, Automation, Sensing and Perception (GRASP) Laboratory. She completed a Masters in MEAM at the University of Pennsylvania in 2013, and earned a BS degree in mechanical engineering at the University of Nevada, Reno in 2010. She is currently serving as the Vice-Chair for Information Dissemination for the IEEE Technical Committee on Haptics. Her awards include a citation for meritorious service as a reviewer for the IEEE Transactions on Haptics, Best Paper at UIST 2017, and the Best Hands-On Demonstration Award at IEEE World Haptics 2013.

Find more information on her website.

Contents

Generated with npm run toc, see INSTALL.md.

Once this documentation becomes very comprehensive, the main file can be split in multiple files and reference these files.

Abstract

Introduction

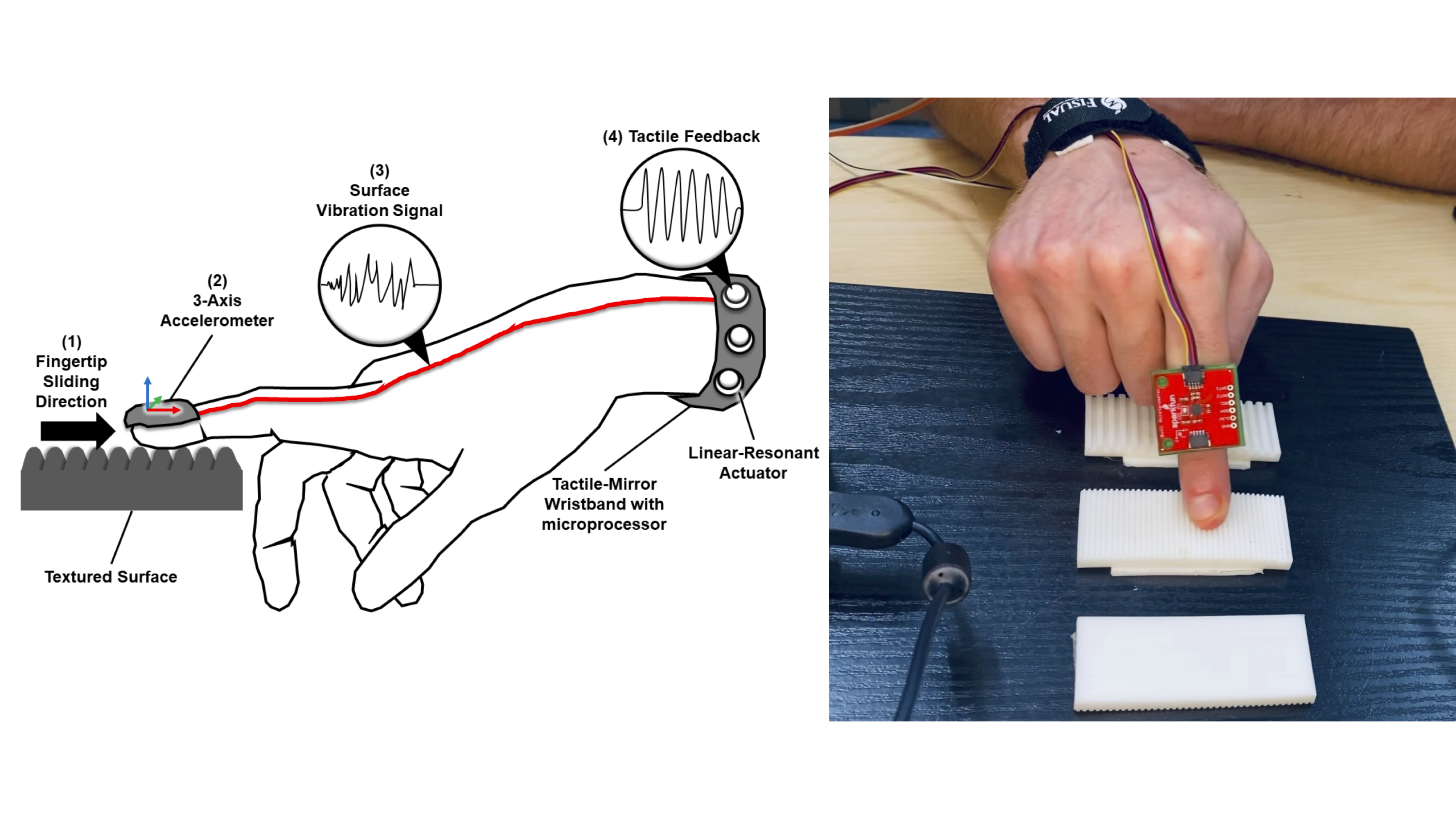

Nerve injuries (e.g. carpal tunnel syndrome and traumatic median nerve section) affect the innervation of the fingertip with a detrimental effect on tactile perception. Patients affected, however, can still perform roughness discrimination tasks by sensing the vibrations that (despite being attenuated and distorted) propagate from the finger to the wrist. In this research, we built a wearable device with the goal of relaying the vibration at the fingertip to the wrist so as to improve perceptual skills related to vibrotactile roughness discrimination in affected patients. We mounted an accelerometer on the dorsal side of the distal interphalangeal joint of the index finger to capture vibrations generated by sliding the fingertip on a surface. We mapped vibrations experienced by the fingertip to the wrist via an optimised function to generate frequencies in the range of 100-Hz to 800-Hz, which best activate the rapidly adapting mechano-receptors in the wrist. The signal is sent to two actuators positioned on the wrist. The actuators activate in a temporal order according to the direction of finger movement on the surface. We tested our device with three participants to finetune the mapping parameters for three different surface-gratings with smooth, fine and coarse spatial periods.

Documentation

Hardware

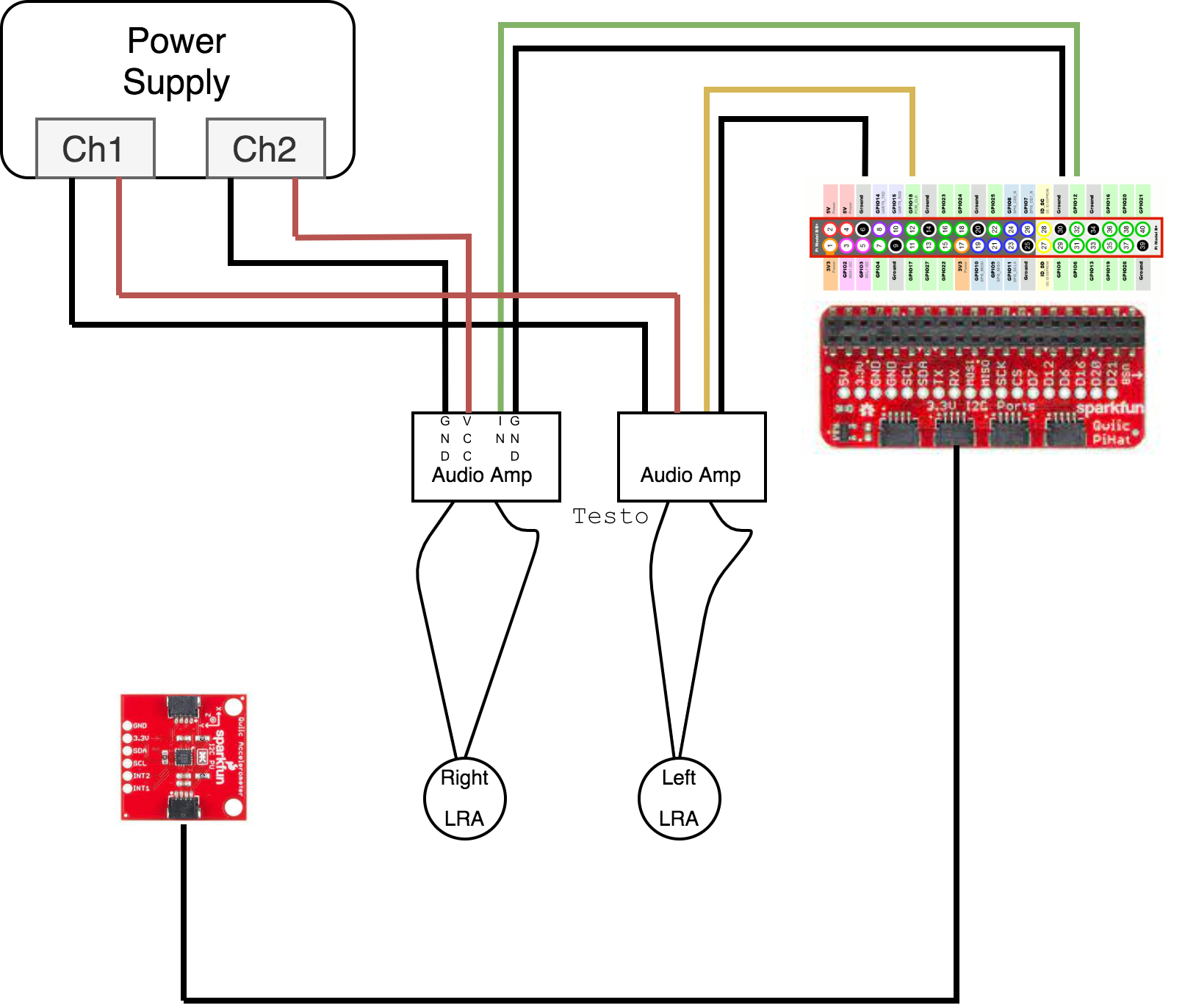

- Raspberry Pi 4 x 1

- Qwiic Hat for Raspberry Pi x 1

- MMA 8452Q Accelerometer x 1

- LRAs x 2

- TDA2030A Audio Amplifier module x 2 (other 18W audio amplifier module will work as well)

- 2 channels power supply (20W per channel)

Sensors wiring

- Connect the piHat to Raspberry Pi

- Connect the accelerometer to the piHat with Qwiic cable

- Connect the audio amplifiers to power supply (5 or 12 V)

- Connect the input of the audio amplifiers to the GPIO and GND pins on rpi —> the amp for the left LRA is connected to pin 12, the amp for the right LRA to pin 32

- Connect the LRAs to the amplifiers output

Made with drawio-desktop (online version: diagrams.net).

Software

Libraries to install:

smbus2 numpy socket struct

Running instructions:

(Softwares are written in Python 3)

- Open file ‘mainRead.py’ in Thonny IDE (or any other IDE)

- Run ‘mainRead.py’

- Open terminal

- Go to the folder of ‘mainWrite.py’

- Type ‘python3 mainWrite.py’ and press enter to run ‘mainWrite.py’

Acknowledgements

SIC chairs would like to thank Evan Pezent, Zane A. Zook and Marcia O'Malley from MAHI Lab at Rice University for having distributed to them 2 Syntacts kits for the IROS 2020 Intro to Haptics for XR Tutorial. SIC co-chair Christian Frisson would like to thank Edu Meneses and Johnty Wang from IDMIL at McGill University for their recommendations on Raspberry Pi hats for audio and sensors.

License

This documentation is released under the terms of the Creative Commons Attribution Share Alike 4.0 International license (see LICENSE.txt).