A strong baseline for body part-based person re-identification

🔥 Our new work on Keypoint Promptable ReID was accepted at ECCV24 🔥

Body Part-Based Representation Learning for Occluded Person Re-Identification, WACV23

Vladimir Somers, Christophe De Vleeschouwer, Alexandre Alahi

- [2024.08.23] 🚀🔥 Our new work on Keypoint Promptable ReID was accepted to ECCV24, full codebase available here.

- [2023.09.20] New paper and big update coming soon 🚀 ...

- [2023.07.26] The Python script from @samihormi to generate human parsing labels based on PifPaf and MaskRCNN has been released, have a look at the "Generate human parsing labels" section below. This script is different from the one used by the authors (especially when facing multiple pedestrians in a single image): resulting human parsing labels will not be exactly the same.

- [2023.06.28] Please find a non-official script to generate human parsing labels from PifPaf and MaskRCNN in this Pull Request. The PR will be merged soon.

- [2022.12.02] We release the first version of our codebase. Please update frequently as we will add more documentation during the next few weeks.

We plan on extending BPBReID in the near future, put a star and stay updated for future changes:

- part-based video/tracklet reid

- part-based reid for multi-object tracking

- ...

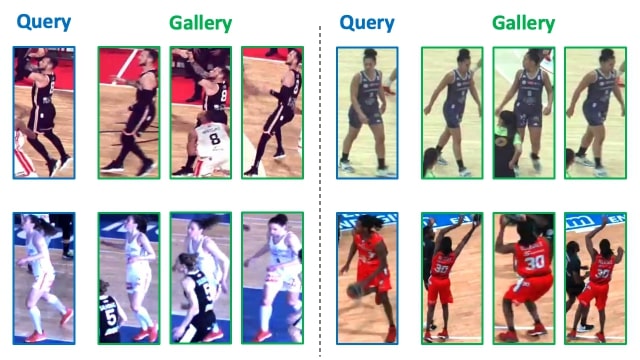

Welcome to the official repository for our WACV23 paper "Body Part-Based Representation Learning for Occluded Person Re-Identification". In this work, we propose BPBReID, a part-based method for person re-identification using body part feature representations to compute to similarity between two samples. As illustrated in the figure below, part-based ReID methods output multiple features per input sample, i.e. one for each part, whereas standard global methods only output a single feature. Compared to global methods, part-based ones come with some advantages:

- They achieve explicit appearance feature alignement for better ReID accuracy.

- They are robust to occlusions, since only mutually visible parts are used when comparing two samples.

Our model BPBreID uses pseudo human parsing labels at training time to learn an attention mechanism. This attention mechanism has K branches to pool the global spatial feature map into K body part-based embeddings. Based on the attention maps activations, visibility scores are computed for each part. At test time, no human parsing labels is required. The final similarity score between two samples is computed using the average distance of all mutually visible part-based embeddings. Please refer to our paper for more information.

In this repository, we propose a framework and a strong baseline to support further research on part-based ReID methods. Our code is based on the popular Torchreid framework for person re-identification. In this codebase, we provide several adaptations to the original framework to support part-based ReID methods:

- The ImagePartBasedEngine to train/test part-based models, compute query-gallery distance matrix using multiple features per test sample with support for visibility scores.

- The fully configurable GiLt loss to selectively apply id/triplet loss on holistics (global) and part-based features.

- The BodyPartAttentionLoss to train the attention mechanism.

- The BPBreID part-based model to compute part-based features with support for body-part learnable attention, fixed attention heatmaps from an external model, PCB-like horizontal stripes, etc.

- The Albumentation data augmentation library used for data augmentation, with support for external heatmaps/masks transforms.

- Support for Weights & Biases and other logging tools in the Logger class.

- An EngineState class to keep track of training epoch, etc.

- A new ranking visualization tool to display part heatmaps, local distance for each part and other metrics.

- For more information about all available configuration and parameters, please have a look at the default config file.

You can also have a look at the original Torchreid README for additional information, such as documentation, how-to instructions, etc. Be aware that some of the original Torchreid functionnality and models might be broken (for example, we don't support video re-id yet).

Make sure conda is installed.

# clone this repository

git clone https://github.com/VlSomers/bpbreid

# create conda environment

cd bpbreid/ # enter project folder

conda create --name bpbreid python=3.10

conda activate bpbreid

# install dependencies

# make sure `which python` and `which pip` point to the correct path

pip install -r requirements.txt

# install torch and torchvision (select the proper cuda version to suit your machine)

conda install pytorch torchvision cudatoolkit=9.0 -c pytorch

# install torchreid (don't need to re-build it if you modify the source code)

python setup.py develop

You can download the human parsing labels on GDrive.

These labels were generated using the PifPaf pose estimation model and then filtered using segmentation masks from Mask-RCNN.

We provide the labels for five datasets: Market-1501, DukeMTMC-reID, Occluded-Duke, Occluded-ReID and P-DukeMTMC.

After downloading, unzip the file and put the masks folder under the corresponding dataset directory.

For instance, Market-1501 should look like this:

Market-1501-v15.09.15

├── bounding_box_test

├── bounding_box_train

├── masks

│ └── pifpaf_maskrcnn_filtering

│ ├── bounding_box_test

│ ├── bounding_box_train

│ └── query

└── query

Make also sure to set data.root config to your dataset root directory path, i.e., all your datasets folders (Market-1501-v15.09.15, DukeMTMC-reID, Occluded_Duke, P-DukeMTMC-reID, Occluded_REID) should be under this path.

You can create human parsing labels for your own dataset using the following command:

conda activate bpbreid

python scripts/get_labels --source [Dataset Path]

The labels will be saved under the source directory in the masks folder as per the code convention.

We also provide some state-of-the-art pre-trained models based on the HRNet-W32 backbone.

You can put the downloaded weights under a 'pretrained_models/' directory or specify the path to the pre-trained weights using the model.load_weights parameter in the yaml config.

The configuration used to obtain the pre-trained weights is also saved within the .pth file: make sure to set model.load_config to True so that the parameters under the model.bpbreid part of the configuration tree will be loaded from this file.

You can test the above downloaded models using the following command:

conda activate bpbreid

python scripts/main.py --config-file configs/bpbreid/bpbreid_<target_dataset>_test.yaml

For instance, for the Market-1501 dataset:

conda activate bpbreid

python scripts/main.py --config-file configs/bpbreid/bpbreid_market1501_test.yaml

Configuration files for other datasets are available under configs/bpbreid/.

Make sure the model.load_weights in these yaml config files points to the pre-trained weights you just downloaded.

Training configs for five datasets (Market-1501, DukeMTMC-reID, Occluded-Duke, Occluded-ReID and P-DukeMTMC) are provided in the configs/bpbreid/ folder.

A training procedure can be launched with:

conda activate bpbreid

python ./scripts/main.py --config-file configs/bpbreid/bpbreid_<target_dataset>_train.yaml

For instance, for the Occluded-Duke dataset:

conda activate bpbreid

python scripts/main.py --config-file configs/bpbreid/bpbreid_occ_duke_train.yaml

Make sure to download and install the human parsing labels for your training dataset before runing this command.

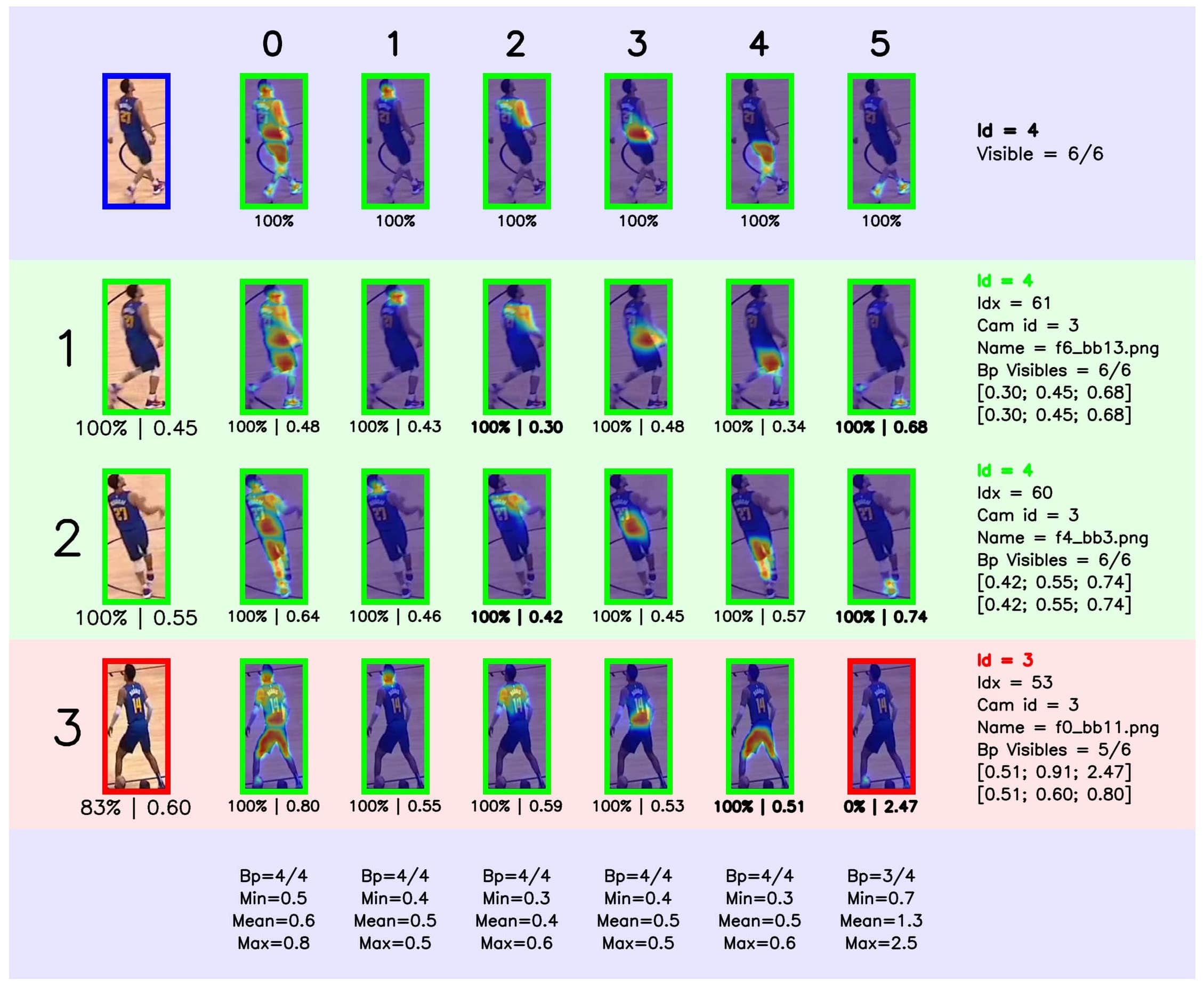

The ranking visualization tool can be activated by setting the test.visrank config to True.

As illustrated below, this tool displays the Top-K ranked samples as rows (K can be set via test.visrank_topk). The first row with blue background is the query, and the following green/red rows indicated correct/incorrect matches.

The attention maps for each test embedding (foreground, parts, etc) are displayed in the row.

An attention map has a green/red border when it is visible/unvisible.

The first number under each attention map indicate the visibility score and the second number indicate the distance of the embedding to the corresponding query embedding.

The distances under the images in the first column on the left are the global distances of that sample to the query, which is usually computed as the average of all other distances weighted by the visibility score.

If you need more information about the visualization tool, fell free to open an issue.

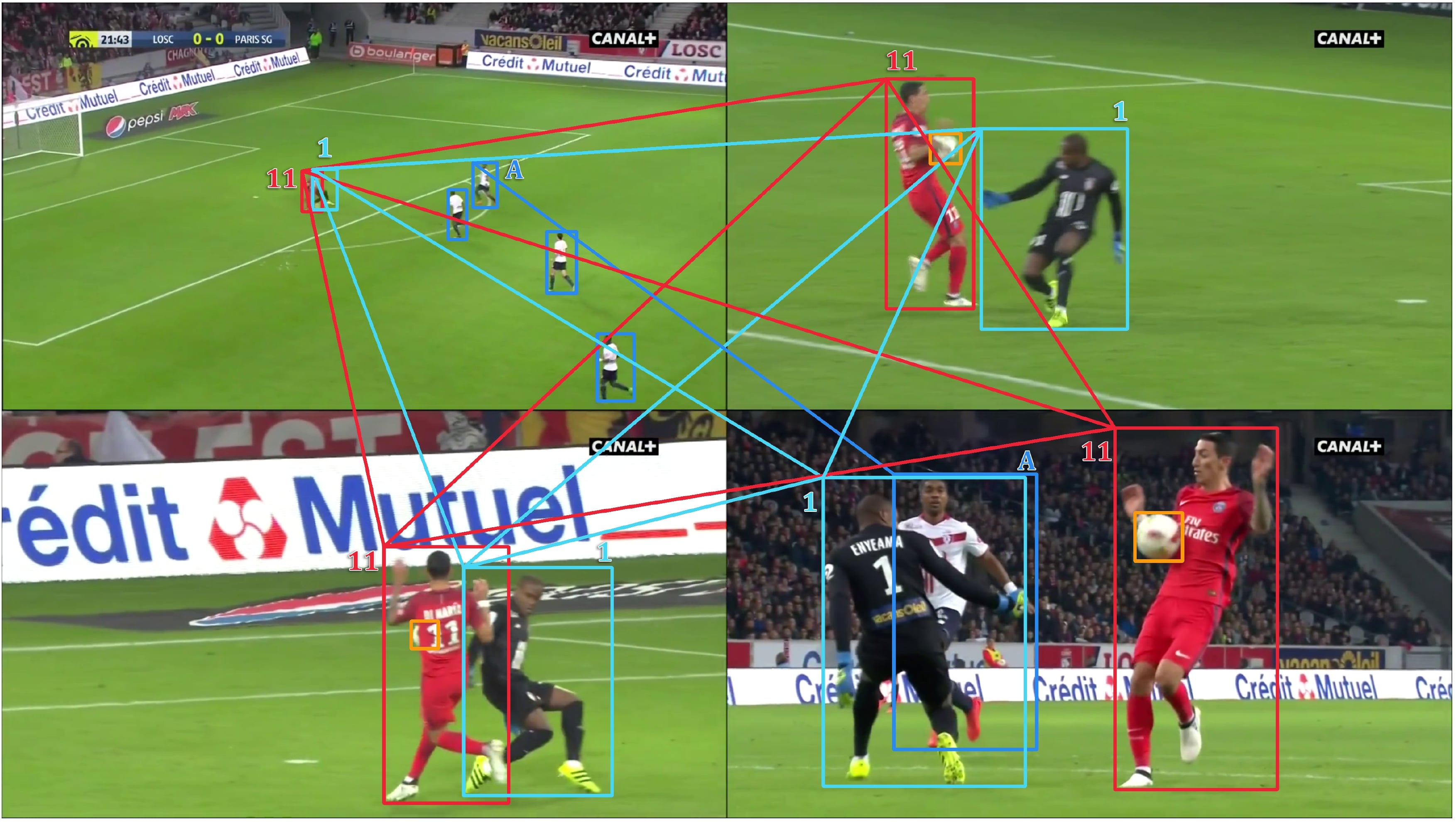

If you are looking for datasets to evaluate your re-identification models, please have a look at our other works on player re-identification for team sport events:

- The SoccerNet Player Re-Identification dataset

- The DeepSportRadar Player Re-Identification dataset

If you have any question/suggestion, or find any bug/issue with the code, please raise a GitHub issue in this repository, I'll be glab to help you as much as I can! I'll try to update the documentation regularly based on your questions.

If you use this repository for your research or wish to refer to our method BPBReID, please use the following BibTeX entry:

@article{bpbreid,

archivePrefix = {arXiv},

arxivId = {2211.03679},

author = {Somers, Vladimir and {De Vleeschouwer}, Christophe and Alahi, Alexandre},

doi = {10.48550/arxiv.2211.03679},

eprint = {2211.03679},

isbn = {2211.03679v1},

journal = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV23)},

month = {nov},

title = {{Body Part-Based Representation Learning for Occluded Person Re-Identification}},

url = {https://arxiv.org/abs/2211.03679v1 http://arxiv.org/abs/2211.03679},

year = {2023}

}

This codebase is a fork from Torchreid