Donghyun Kim, Kuniaki Saito, Tae-Hyun Oh, Bryan A. Plummer, Stan Sclaroff, and Kate Saenko

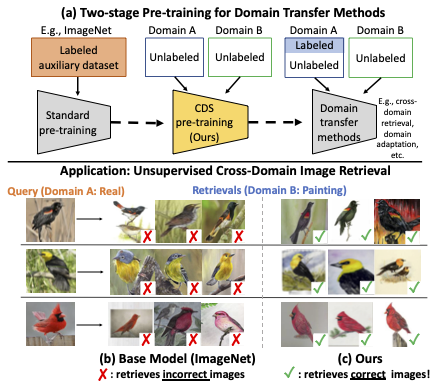

We present Cross-Domain Self-supervised Pretraining (CDS), a two-stage pre-training approach that improves the generalization ability of standard single-domain pre-training. This is an PyTorch implementation of CDS. This implementation is based on Instance Discrimination, CDAN, and MME. This repository currently supports codes for experiments for few-shot DA on Office-Home. We will keep updating this repository to support other experiments.

CDS_pretraining: Our implementation of CDS.

data: Datasets (e.g. Office-Home) used in this paper

CDAN: Implementation borrowed from CDAN

Python 3.6.9, Pytorch 1.6.0, Torch Vision 0.7.0.

CDS pretraining on Real and Clipart domains in Office-Home.

cd CDS_pretraining

python CDS_pretraining.py --dataset office_home --source Real --target Clipart

cd CDAN

python CDAN_fewshot_DA.py --method CDAN+E --dataset office_home --source Real --target Clipart

This repository is contributed by Donghyun Kim. If you consider using this code or its derivatives, please consider citing:

@inproceedings{kim2021cds,

title={CDS: Cross-Domain Self-Supervised Pre-Training},

author={Kim, Donghyun and Saito, Kuniaki and Oh, Tae-Hyun and Plummer, Bryan A and Sclaroff, Stan and Saenko, Kate},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={9123--9132},

year={2021}

}