It is Okay to Not Be Okay: Overcoming Emotional Bias in Affective Image Captioning by Contrastive Data Collection

This repo host the codebase used in this paper which was accepted at CVPR 2022. It contains the following:

- How to download/preprocess the dataset?

- How to train and test Neural Speakers?

- Semantic Space Theory analysis

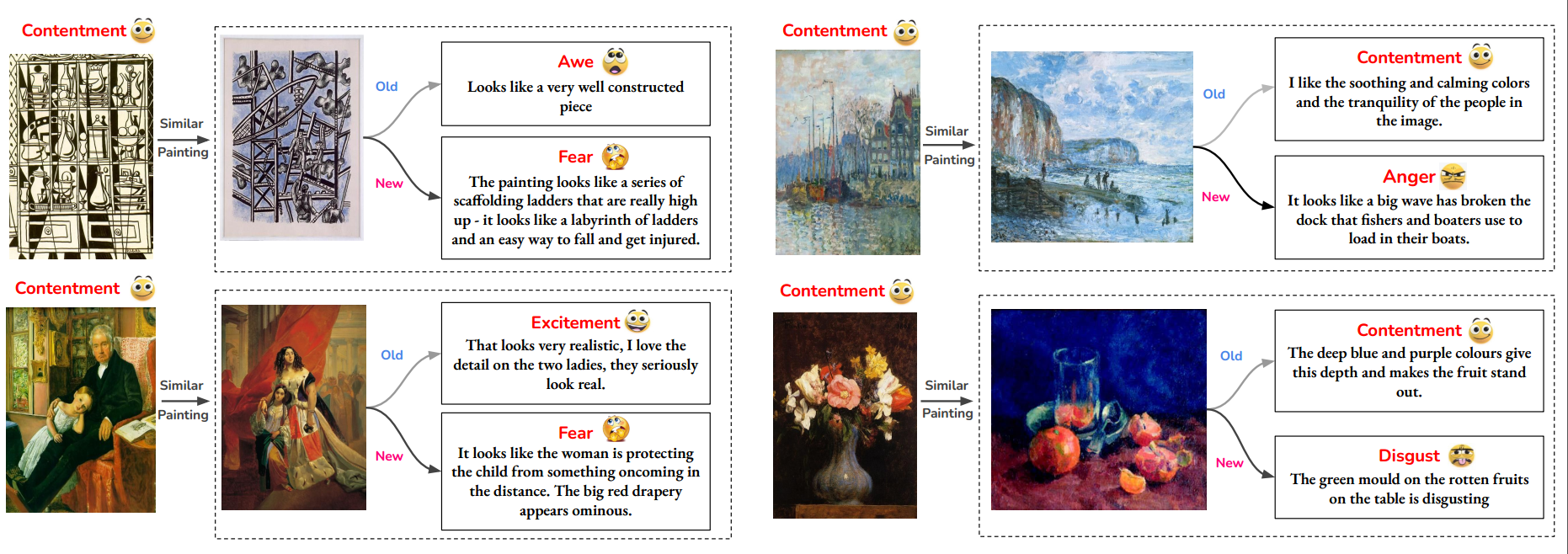

The following figure shows a sample of contrasting emotions for visually similar paintings.

Our contrastive dataset complements ArtEmis, thus to compile the full version, you should download:

Next, unzip ArtEmis into dataset/official_data/ and place the Contrastive.csv file under the same directory. Unzip the WikiArt paintings in any directory and update the <PATH_TO_WIKIART> whenever you come across it.

To merge them and create the train test splits. Please follow the preprocessing steps in the SAT section.

Our implementation is heavily based on the SAT model in the original ArtEmis dataset[1]. We made this repo self-contained by including the instructions and files to reproduce the results of our paper. But, for more detailed instructions and analysis notebooks, please refer to the original repo.

Please install conda if you have not already. Then,

cd neural_speaker/sat/

conda create -n artemis-sat python=3.6.9 cudatoolkit=10.0

conda activate artemis-sat

pip install -e .Now, you can resume combining and preprocessing the dataset.

conda activate artemis-sat

python dataset/combine.py --root dataset/official_data/

python neural_speaker/sat/artemis/scripts/preprocess_artemis_data.py -save-out-dir dataset/full_combined/train/ -raw-artemis-data-csv dataset/official_data/combined_artemis.csv --preprocess-for-deep-nets True

python dataset/create_parts.pyCongratulations, you now have the preprocessed data ready!

The naming of each directory reflects the type of instances in each dataset:

Train sets:

combinedis basically ArtEmis 2.0, it contains all the contrastive data and a sample of similar size from ArtEmis 1.0full_combinedcontains all contrastive data and all of ArtEmis 1.0newcontains all the contrastive data onlyold_fullis ArtEmis 1.0old_largea sample of same size ascombinedbut all samples come from ArtEmis 1.0old_smalla sample of same size asnewbut comes only from ArtEmis 1.0

Test sets:

test_40is the subset from ArtEmis 1.0 with paintings having 40+ annotations, it contains ~700 paintingstest_allis a test set from the ArtEmis 2.0 with samples from both ArtEmis 1.0 and the contrastive datatest_newcontains samples only from the contrastive datatest_oldcontains samples only from ArtEmis 1.0test_refis the same test set used in the original ArtEmis paper

Except for test_40 all test sets have paintings with 5 captions. We made sure that these paintings are not included in any training set.

We created all of these test sets to guarantee a fair comparison and ensure no data leak.

Now, let's train some Neural Speakers next. We will start first with Show, Attend, and Tell since we have already setup its environment, then we will train Meshed Memory Transformer.

The pipeline for the SAT neural speaker is as follows (each phase has it's scripts):

- Train a SAT model on a preprocessed dataset, and save the trained model

- Caption a test set using the trained SAT model, and save the generated captions to a file

- Evaluate the generated captions via a master script and notebooks for in depth analysis

To train a SAT model,

conda activate artemis-sat

mkdir -p <LOGS PATH>

python neural_speaker/sat/artemis/scripts/train_speaker.py \

-log-dir <LOGS PATH> \

-data-dir <TRAIN DATASET PATH> \

-img-dir <PATH TO WIKIART IMAGES> \

[--use-emo-grounding True]For example to train on the Combined dataset with emotional grounding (if you followed the exact setup)

conda activate artemis-sat

mkdir -p sat_logs/sat_combined

python neural_speaker/sat/artemis/scripts/train_speaker.py \

-log-dir sat_logs/sat_combined \

-data-dir dataset/combined/train/ \

-img-dir <PATH TO WIKIART IMAGES> \

--use-emo-grounding TrueThe trained SAT model will be saved under the log-dir

NOTE: you can try different training sets found at datasets/

Now, let's use our trained model to generate captions. For example let's use the model trained above and generate captions for the

conda activate artemis-sat

mkdir -p sat_sampled

python neural_speaker/sat/artemis/scripts/sample_speaker.py \

-speaker-saved-args sat_logs/sat_combined/config.json.txt \

-speaker-checkpoint sat_logs/sat_combined/checkpoints/best_model.pt \

-img-dir <PATH TO WIKIART IMAGES> \

-out-file sat_sampled/sat_combined.pkl \

--custom-data-csv dataset/test_40/test_40.csv The generated captions will be saved at sampled/sat_combined.pkl. Next, we will evaluate them against the ground truth captions.

To produce the metrics reported in the paper for the generated captions,

conda activate artemis-sat

python neural_speaker/sat/get_scores.py \

-captions_file sat_sampled/sat_combined.pkl \

-vocab_path dataset/combined/train/vocabulary.pkl \

-test_set dataset/test_40/test_40.csv \

[--filter (fine | coarse)]--filter argument is used to break down the scores per emotions as reported in the paper. You can select one of the 3 available options [fine, coarse]; fine break the score per 9 emotions while coarse break the scores per 3 classes (positive, negative, and something-else)

NOTE: get_scores.py has more metrics, have fun trying them out.

Finally, you can use neural_speaker/sat/visualizations.ipynb to get the plots used in the paper. You can also use it to visualize the captions and some attention maps over the input images. We also decided to leave the original analysis notebooks from ArtEmis 1.0 under neural_speaker/sat/artemis/notebooks/ because they are very rich and can help gain useful insights.

Again, our implementation is heavily based on the M2 model in the original ArtEmis dataset found here. For more details, please visit their repo. We included mostly relevant instructions and files to reproduce the results in our paper.

To reproduce our results, unfortunately, you have to install a conda env for each neural speaker. We faced lots of comptability issues when we tried to merge the two envs into one, so we kept them separate.

cd neural_speaker/m2/

conda env create -f environment.yml

conda activate artemis-m2You also have to download spacy data for calculating the metrics,

python -m spacy download enDISCLAIMER: THIS PART IS COPIED FROM THE ORIGINAL REPO

Please, prepare annotations and detection features files for the ArtEmis dataset to run the code:

- Download Detection-Features and unzip it to some folder.

- Download pickle file which contains [<image_name>, <image_id>], and put it in the same folder where you have extracted detection features.

We suggest placing the above files under neural_speaker/m2/extracted_features/

To train a M2 model,

conda activate artemis-m2

python neural_speaker/m2/train.py \

--exp_name combined \

--batch_size 128 \

--features_path neural_speaker/m2/extracted_features/ \

--workers 32 \

--annotation_file dataset/combined/train/artemis_preprocessed.csv \

[--use_emotion_labels True]The trained model will be saved under the directory saved_m2_models/

Evaluating the M2 model is more straightforward since you need to run only one script

conda activate artemis-m2

python neural_speaker/m2/test.py \

--exp_name combined \

--batch_size 128 \

--features_path neural_speaker/m2/extracted_features/ \

--workers 32 \

--annotation_folder dataset/test_40/test_40.csv \

[--use_emotion_labels True]This will automatially load the model at saved_m2_models/ with the --exp_name and use it to generate captions for the dataset provided using --annotation_folder. Finally, it will evaluate the generated captions.

The M2 directory contains the script to train a baseline NN model. There is only one script to generate the captions and evaluate them since no training is needed.

conda activate artemis-m2

python neural_speaker/m2/nn_baseline.py \

-splits_file dataset/combined/train/artemis_preprocessed.csv \

-test_splits_file dataset/test_all/test_all.csv \

--idx_file neural_speaker/m2/extracted_features/wikiart_split.pkl

--nn_file neural_speaker/m2/extracted_features/vgg_nearest_neighbors.pklIn this final section, we finetune a roBERTa language model to classify the extended set of emotions provided by GoEmotions dataset.

We start by training the model, provided by huggingfaces, on the GoEmotions dataset. Then, we use the trained model to predict the labels for the captions in the different versions of ArtEmis. Finally, we plot the histogram of the predicted emotions as well as produce a heatmap corresponding to the pairwise correlation of predicted emotions.

Unfortunately, there is one more conda env you need to install 😅

cd extended_emotions

conda env create -f environment.yml

conda activate artemis-sstWe provide two scripts:

extended_emotions/train_goemotions.pyto train a roBERTa model on GoEmotionsextended_emotions/train_artemis.pyto train a roBERTa model on ArtEmis

To train the GoEmotions model,

conda activate artemis-sst

python extended_emotions/train_goemotions.py \

-o goemotions_classifier \

-m roberta \

-s base The model will be saved under go_models/ directory

To predict the extended emotions on ArtEmis dataset,

conda activate artemis-sst

python extended_emotions/artemis_analysis/predict_emotions.py \

-model_dir <PATH TO TRAINED MODEL> \

--model roberta \

--model_size base \

--dataset dataset/combined/train/artemis_preprocessed.csv \

--dataset_name combinedThis will save a version of the dataset augmented with the scores for the extended set of emotions provided by GoEmotions.

We provide the code to produce the plots in our paper in the notebook extended_emotions/artemis_analysis/extended_emotions.ipynb

[1] ArtEmis

[2] Huggingface

[3] GoEmotions

[4] M2 Transformer