This is an implementation of DreamBooth based on Stable Diffusion.

- This repository has been migrated into

diffusers! Less than 14 GB memeory is required!

See more in https://github.com/huggingface/diffusers/tree/main/examples/dreambooth

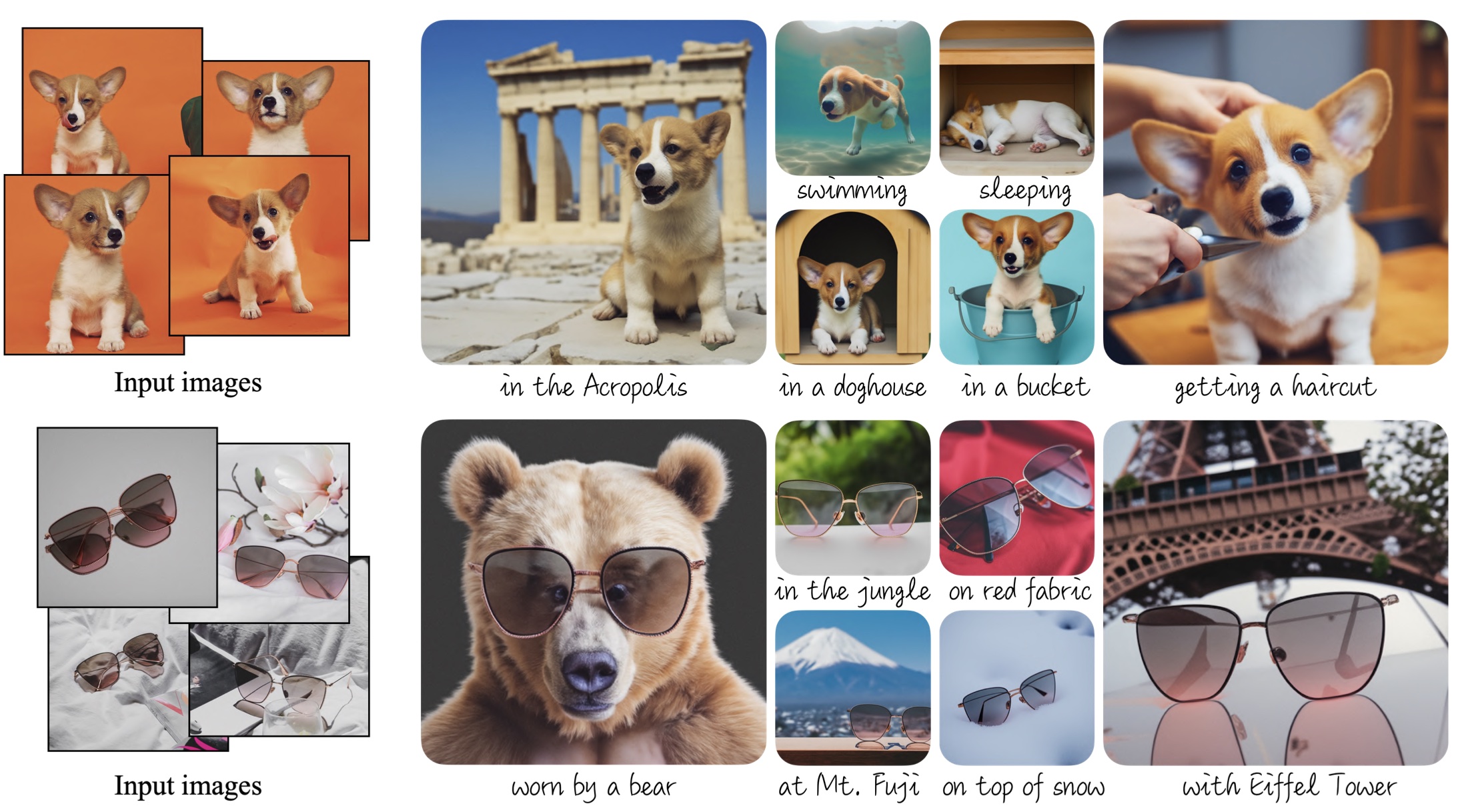

Dreambooth results from original paper:

- A GPU with at least 30G Memory.

- The training requires about 10 minites on A100 80G GPU with

batch_sizeset to 4.

Create conda environment with pytorch>=1.11.

conda env create -f environment.yaml

conda activate stable-diffusionpython sample.py # Generate class samples.

python train.py # Finetune stable diffusion model.The generation results are in logs/dog_finetune.

- Collect 3~5 images of an object and save into

data/mydata/instancefolder. - Sample images of the same class as specified object using

sample.py.- Change corresponding variables in

sample.py. Thepromptshould be like "a {class}". And thesave_dirshould be changed todata/mydata/class. - Run the sample script.

python sample.py

- Change corresponding variables in

- Change the TrainConfig in

train.py. - Start training.

python train.py

python inference.py --prompt "photo of a [V] dog in a dog house" --checkpoint_dir logs/dogs_finetuneGenerated images are in outputs by default.

- Stable Diffusion by CompVis https://github.com/CompVis/stable-diffusion

- DreamBooth https://dreambooth.github.io/

- Diffusers https://github.com/huggingface/diffusers