简体中文 | English

本项目在幻方萤火超算集群上用 PyTorch 实现了 Informer 和 Autoformer 两个模型的分布式训练版本,它们是近年来采用 transformer 系列方法进行长时间序列预测的代表模型之一。

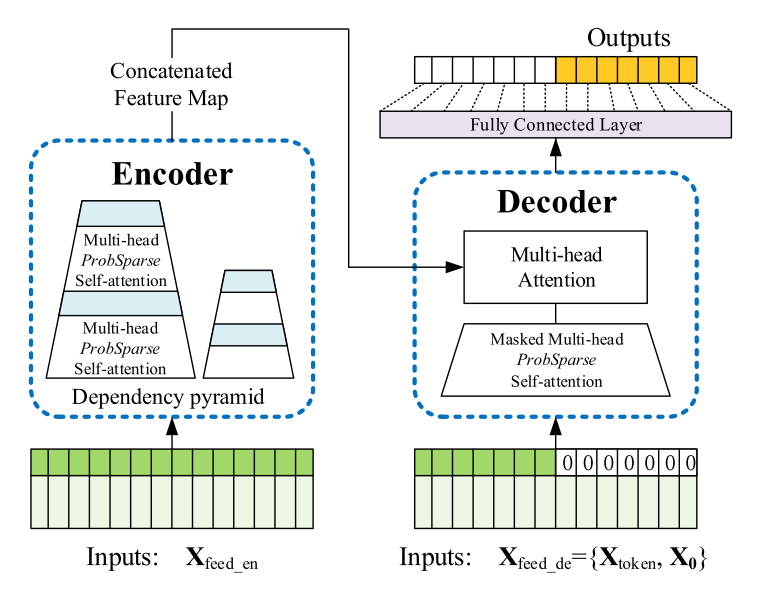

- Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting (AAAI 2021)

- Autoformer: Decomposition Transformers with Auto-Correlation for Long-Term Series Forecasting (NeurIPS 2021)

- hfai (to be released soon)

- torch >=1.8

原始数据来自 Autoformer开源仓库 ,整理进 hfai.datasets 数据集仓库中,包括:ETTh1, ETTh2, ETTm1, ETTm2, exchange_rate, electricity, national_illness, traffic。 使用参考hfai开发文档。

-

训练 informer

提交任务至萤火集群

hfai python train.py --ds ETTh1 --model informer -- -n 1 -p 30

本地运行:

python train.py --ds ETTh1 --model informer

-

训练 Autoformer

提交任务至萤火集群

hfai python train.py --ds ETTh1 --model autoformer -- -n 1 -p 30

本地运行:

python train.py --ds ETTh1 --model autoformer

@inproceedings{haoyietal-informer-2021,

author = {Haoyi Zhou and

Shanghang Zhang and

Jieqi Peng and

Shuai Zhang and

Jianxin Li and

Hui Xiong and

Wancai Zhang},

title = {Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting},

booktitle = {The Thirty-Fifth {AAAI} Conference on Artificial Intelligence, {AAAI} 2021, Virtual Conference},

volume = {35},

number = {12},

pages = {11106--11115},

publisher = {{AAAI} Press},

year = {2021},

}@inproceedings{wu2021autoformer,

title={Autoformer: Decomposition Transformers with {Auto-Correlation} for Long-Term Series Forecasting},

author={Haixu Wu and Jiehui Xu and Jianmin Wang and Mingsheng Long},

booktitle={Advances in Neural Information Processing Systems},

year={2021}

}