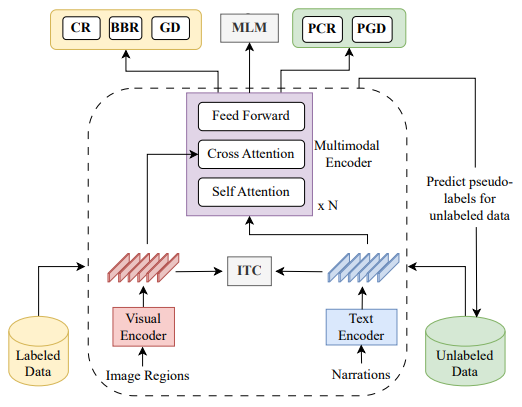

In this paper, we study multimodal coreference resolution, specifically where a longer descriptive text, i.e., a narration is paired with an image. This poses significant challenges due to fine-grained image-text alignment, inherent ambiguity present in narrative language, and unavailability of large annotated training sets. To tackle these challenges, we present a data efficient semi-supervised approach that utilizes image-narration pairs to resolve coreferences and narrative grounding in a multimodal context.

Semi-supervised multimodal coreference resolution in image narrations,

Arushi Goel, Basura Fernando, Frank Keller, Hakan Bilen,

EMNLP 2023 (arXiv)Who are you referring to? Coreference resolution in image narrations,

Arushi Goel, Basura Fernando, Frank Keller, Hakan Bilen,

ICCV 2023 (CVF)

This code requires the following:

- Python 3.7 or greater

- PyTorch 1.8 or greater

conda create -n mcr python=3.8

conda activate mcr

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=10.2 -c pytorch

pip install transformers==4.11.3

pip install spacy==3.4.1

pip install numpy==1.23.3

pip install spacy-transformers

python -m spacy download en_core_web_sm

pip install h5py

pip install scipy

pip install sense2vec

pip install scorchCreate a folder datasets/.

Download the CIN annotations from here. This will create a folder cin_annotations inside the datasets folder.

Download the Localized narrative caption vocabulary flk30k_LN.json json file to datasets/.

Download the Localized narrative captions flk30k_LN_label.h5 hdf5 file to datasets/.

Download train_features_compress.hdf5(6GB), val features_compress.hdf5, and test features_compress.hdf5 to datasets/faster_rcnn_image_features.

Download train_detection_dict.json, val_detection_dict.json, and test_detection_dict.json to datasets/faster_rcnn_image_features.

Download train_imgid2idx.pkl, val_imgid2idx.pkl, and test_imgid2idx.pkl to datasets/faster_rcnn_image_features.

(Optional) Download the processed mouse traces flk30k_LN_trace_box for the flickr30k localized narrative captions from here

To save the models create a folder saved/final_model and then run the training script below for the final model.

CUDA_VISIBLE_DEVICES=1,2,3,4 python -m torch.distributed.launch --master_port 10006 --nproc_per_node=4 --use_env main.py --use-ema --use-ssl --model_config configs/mcr_config.json --batch 6 --ssl_loss con --label-prop --bbox-reg --grounding --save_name final_model/

This test script will save the predicted coreference chains in the folder coref/modelrefs/test. Create this directory prior to running the script.

CUDA_VISIBLE_DEVICES=0 python -m torch.distributed.launch --master_port 10003 --nproc_per_node=1 --use_env test_coref.py --bbox-reg --use-phrase-mask --model_config configs/mcr_config.json --save_name saved/final_model/models_17.pt

Run the scorch script below to calculate CR metrics.

CUDA_VISIBLE_DEVICES=5 python -m torch.distributed.launch --master_port 10003 --nproc_per_node=1 --use_env test_grounding.py --bbox-reg --use-phrase-mask --model_config configs/mcr_config.json --save_name saved/final_model/models_17.pt

Download the ground truth coreference chains from here. Unzip the gold.zip file to a folder named coref/.

scorch coref/gold_count/test/ coref/modelrefs/test/

Please contact the first author for any queries or concerns at goel.arushi@gmail.com.