Revisiting Self-Supervised Monocular Depth Estimation

Introduction

We propose to have a closer look at potential synergies between various depth and motion learning methods and CNN architectures.

- For this, we revisit a notable subset of previously proposed learning approaches and categorize them into four classes: depth representation, illumination variation, occlusion and dynamic objects.

- Next, we design a comprehensive empirical study to unveil the potential synergies and architectural benefits. To cope with the large search space, we take an incremental approach in designing our experiments.

As a result of our study, we uncover a number of vital insights. We summarize the most important as follows:

- Choosing the right depth representation substantially improves the performance.

- Not all learning approaches are universal, and they have their own context.

- The combination of auto-masking and motion map handles dynamic objects in a robust manner

- CNN architectures influence the performance significantly

- There would exist a trade-off between depth consistency and performance enhancement.

Moreover, we obtain new state-of-the-art performance in the process of performing our study.

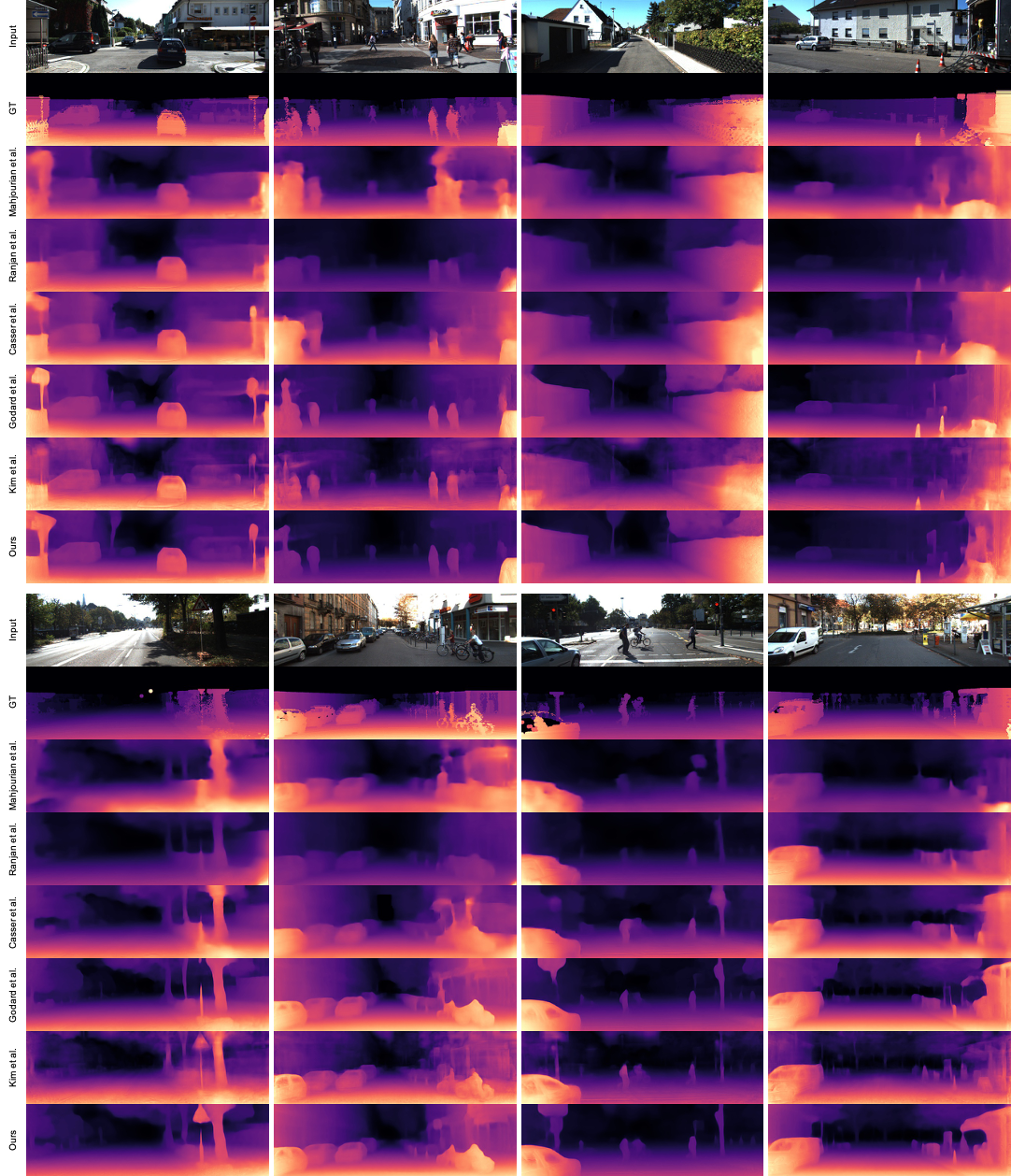

Below presents the qualitative and quantitative results of the proposed method compared to conventional methods.

You can download all the precomputed depth maps from here

| Method | Architecture | ARD | SRD | RMSE | RMSElog | delta < 1.25 | delta < 1.25^2 | delta < 1.25^3 |

|---|---|---|---|---|---|---|---|---|

| Mahjourian et al. | DispNet | 0.163 | 1.240 | 6.220 | 0.250 | 0.762 | 0.916 | 0.968 |

| Ranjan et al. | DispNet | 0.148 | 1.149 | 5.464 | 0.226 | 0.815 | 0.935 | 0.973 |

| Casser et al. | ResNet-18 | 0.141 | 1.026 | 5.291 | 0.215 | 0.816 | 0.945 | 0.979 |

| Godard et al. | ResNet-18 | 0.115 | 0.903 | 4.863 | 0.193 | 0.877 | 0.959 | 0.981 |

| Kim et al. | ResNet-50 | 0.123 | 0.797 | 4.727 | 0.193 | 0.854 | 0.960 | 0.984 |

| Johnston et al. | ResNet-18 | 0.111 | 0.941 | 4.817 | 0.189 | 0.885 | 0.961 | 0.981 |

| Johnston et al. | ResNet-101 | 0.106 | 0.861 | 4.699 | 0.185 | 0.889 | 0.962 | 0.982 |

| Ours | ResNet-18 | 0.114 | 0.825 | 4.706 | 0.191 | 0.877 | 0.960 | 0.982 |

| Ours | DeResNet-50 | 0.108 | 0.737 | 4.562 | 0.187 | 0.883 | 0.961 | 0.982 |

Revisiting Self-Supervised Monocular Depth Estimation

Ue-Hwan Kim, Jong-Hwan Kim

arXiv preprint

If you find this project helpful, please consider citing this project in your publications. The following is the BibTeX of our work.

@inproceedings{kim2020a,

title={Revisiting Self-Supervised Monocular Depth Estimation},

author={Kim Ue-Hwan, Kim Jong-Hwan},

journal = {arXiv preprint arXiv:2103.12496},

year = {2021}

}Notification

This repo is under construction... The full code will be available soon!

Installation

We implemented and tested our framework on Ubuntu 18.04/16.04 with python >= 3.6. We highly recommend that you set up the development environment using Anaconda.

Then, the environment setting becomes easy as below.

conda create --name rmd python=3.7

conda activate rmd

conda install -c pytorch pytorch=1.4 torchvision cudatoolkit=10.1

conda install -c conda-forge tensorboard opencv

conda install -c anaconda scikit-image

pip install efficientnet_pytorch

git clone https://github.com/Uehwan/rmd.git

cd ./rmd/DCNv2

python setup.py build developTraining

Pre-Trained Models

Coming soon.

Evaluation

Pre-Computed Results

Coming soon.

Depth Evaluation

First of all, you need to export the ground-truth depth maps as follows:

python export_gt_depth.py --data_path kitti_data --split eigenThen, you can measure the depth estimation performance simply as follows (you need to specify the model architecture and other training options):

python evaluate_depth.py --load_weights_folder ./logs/model_name/models/weights_19/ --depth_repr softplus --data_path kitti_data --motion_map --num_layers 180Pose Evaluation

For pose evaluation, you first need to prepare the KITTI odometry dataset (color, 65GB). The dataset includes the ground truth poses as zip files.

You could convert the png images to jpg images. Or, you can pass the file extension as a parameter to the dataset class.

For evaluation, run the following:

python evaluate_pose.py --eval_split odom_9 --load_weights_folder ./logs/model_name/models/weights_19 --data_path kitti_odom/

python evaluate_pose.py --eval_split odom_10 --load_weights_folder ./logs/model_name/models/weights_19 --data_path kitti_odom/Acknowledgments

We base our project on the following repositories

This work was supported by Institute for Information & communications Technology Promotion (IITP) grant funded by the Korea government (MSIT) (No.2020-0-00440, Development of Artificial Intelligence Technology that Continuously Improves Itself as the Situation Changes in the Real World)