Official implementation of the SIGGRAPH Asia 2024 paper "Portrait Video Editing Empowered by Multimodal Generative Priors". This repository contains code, data and released pretrained model.

|Project Page|Paper|Dataset|Pretrained Models|

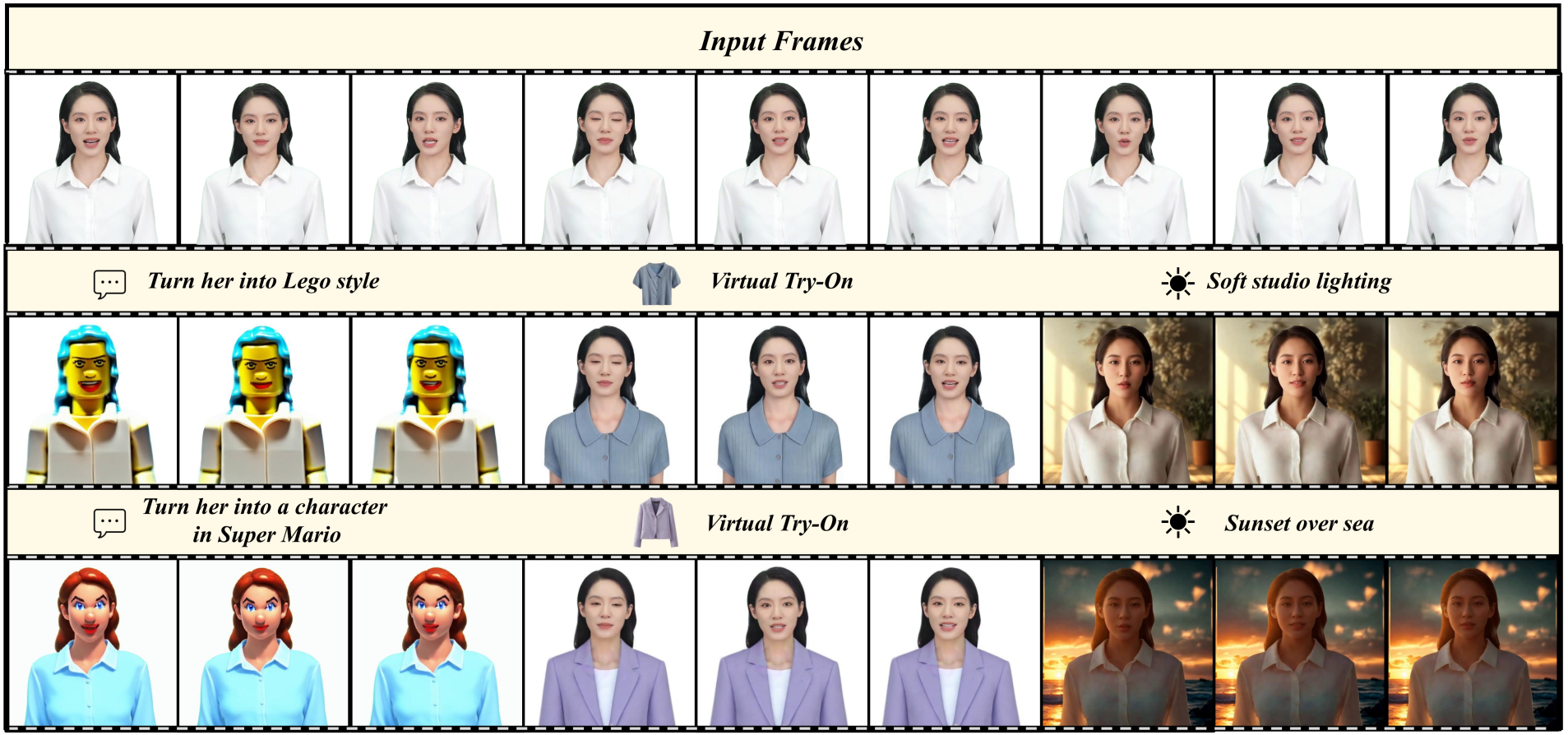

PortraitGen is a powerful portrait video editing method that achieves consistent and expressive stylization with multimodal prompts. Given a monocular RGB video, our model could perform high-quality text driven editing, image driven editing and relighting.

PortraitGen is a powerful portrait video editing method that achieves consistent and expressive stylization with multimodal prompts. Given a monocular RGB video, our model could perform high-quality text driven editing, image driven editing and relighting.

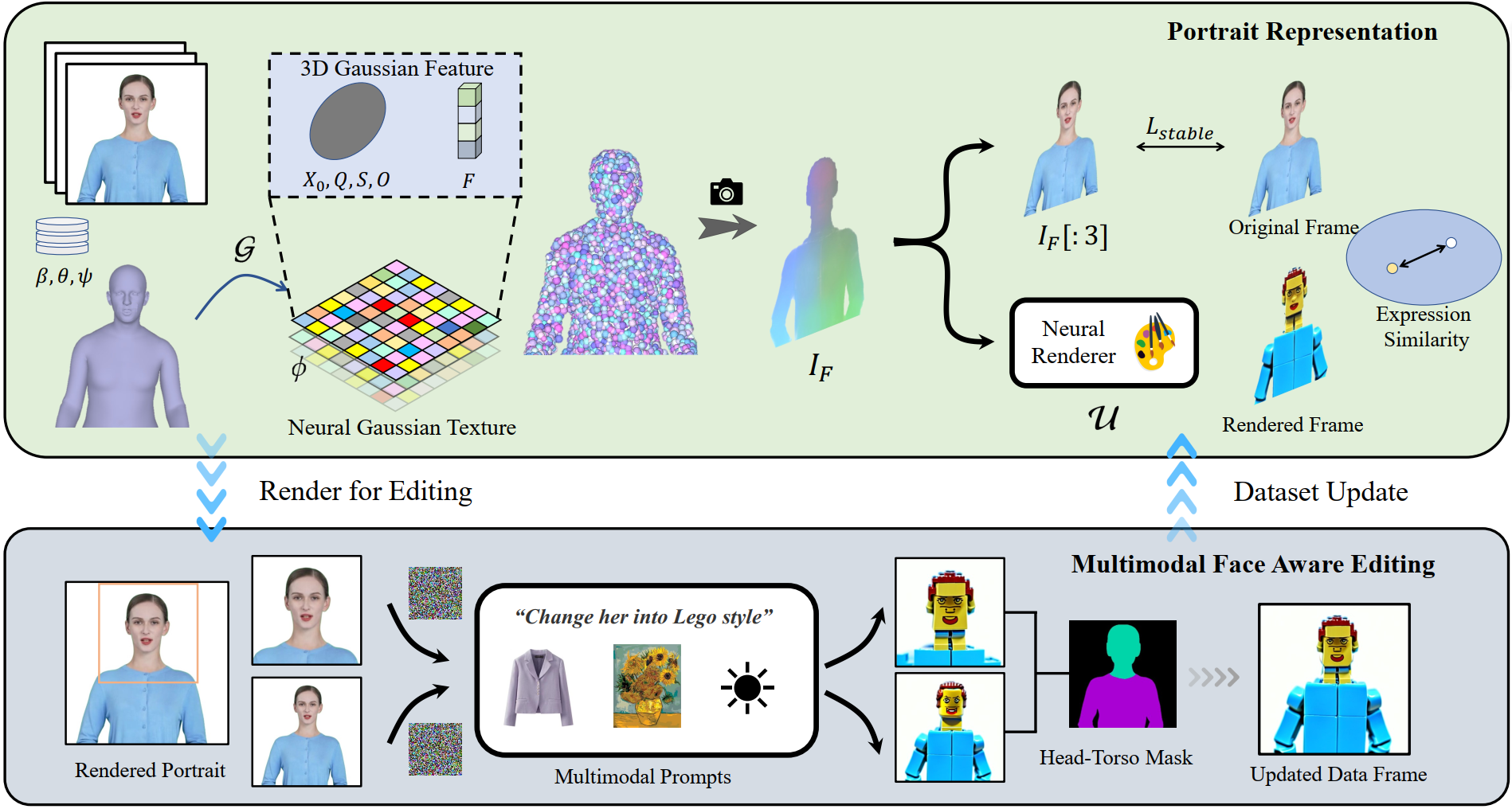

We first track the SMPL-X coefficients of the given monocular video, and then use a Neural Gaussian Texture mechanism to get a 3D Gaussian feature field. These neural Gaussians are further splatted to render portrait images. An iterative dataset update strategy is applied for portrait editing, and a Multimodal Face Aware Editing module is proposed to enhance expression quality and preserve personalized facial structures.

create environment

conda create -n portraitgen python=3.9

conda activate portraitgen

pip install -r requirements.txt

Install torch and torchvision according to your OS and Compute Platform.

Install nvdiffrast

git clone https://github.com/NVlabs/nvdiffrast.git

cd nvdiffrast

pip install .

Install EMOCA according to its README.

Install simple-knn.

Install neural feature splatting module:

cd diff_gaussian_rasterization_fea

pip install -e .

Download SMPL-X 2020 models in code/dmm_models/smplx/SMPLX2020, the required files are:

MANO_SMPLX_vertex_ids.pkl

pts51_bary_coords.txt

pts51_faces_idx.txt

SMPLX_FEMALE.npz

SMPL-X__FLAME_vertex_ids.npy

SMPLX_MALE.npz

SMPLX_NEUTRAL_bc.npz

SMPLX_NEUTRAL.npz

SMPLX_NEUTRAL.pkl

SMPLX_to_J14.pkl

wflw_to_pts51.txt

Download our preprocessed dataset and unzip it to ./testdataset or organize your own data in the same folder structure.

Then you could run run_recon.sh to reconstruct a 3D Gaussian portrait.

bash run_recon.sh GPUID "IDNAME"

You could also use our pretrained portrait models to run editing directly. After downloading dataset and models, run:

python move_ckpt.py

rm -rf ./pretrained

We use InstructPix2Pix as 2D editor.

Then you could run run_edit_ip2p.sh to edit with instruction style prompt.

bash run_edit_ip2p.sh GPUID "IDNAME" "prompt"

We use Neural Style Transfer for style transfer. Firstly git clone the repository to ./code

git clone https://github.com/tjwhitaker/a-neural-algorithm-of-artistic-style.git ./code/neuralstyle

Then run:

bash run_edit_style.sh GPUID "IDNAME" /your/reference/image/path

We have prepared some reference style images in ./ref_image

We use AnyDoor to change the clothes of the subject.

Firstly git clone AnyDoor:

git clone https://github.com/ali-vilab/AnyDoor.git ./code/anydoor

Then follow its requirments.txt to install necessary packages and download its released models.

Finally run:

bash run_edit_anydoor.sh GPUID "IDNAME" /your/reference/image/path

We have prepared some reference cloth images in ./ref_image

Firstly git clone IC-Light:

git clone https://github.com/lllyasviel/IC-Light ./code/ReLight

Then run:

bash run_edit_relight.sh GPUID "IDNAME" BG prompt

BG is the direction of light. prompt is the description of light.

You could also use relight.py to generate background images according to the prompts.

If you find our paper useful for your work please cite:

@inproceedings{Gao2024PortraitGen,

title = {Portrait Video Editing Empowered by Multimodal Generative Priors},

author = {Xuan Gao and Haiyao Xiao and Chenglai Zhong and Shimin Hu and Yudong Guo and Juyong Zhang},

booktitle = {ACM SIGGRAPH Asia Conference Proceedings},

year = {2024},

}