What If We Recaption Billions of Web Images with LLaMA-3?

Xianhang Li*, Haoqin Tu*, Mude Hui*, Zeyu Wang*, Bingchen Zhao*, Junfei Xiao, Sucheng Ren, Jieru Mei, Qing Liu, Huangjie Zheng, Yuyin Zhou, Cihang Xie

UC Santa Cruz, University of Edinburgh, JHU, Adobe, UT Austin

- [🔥June. 12, 2024.] 💥 We are excited to announce the release of Recap-DataComp-1B. Stay tuned for the upcoming release of our models!!!

Star 🌟us if you think it is helpful!!

TL; DR: Recap-Datacomp-1Bis a large-scale image-text dataset that has been recaptioned using an advanced LLaVA-1.5-LLaMA3-8B model to enhance the alignment and detail of textual descriptions.

CLICK for the full abstract

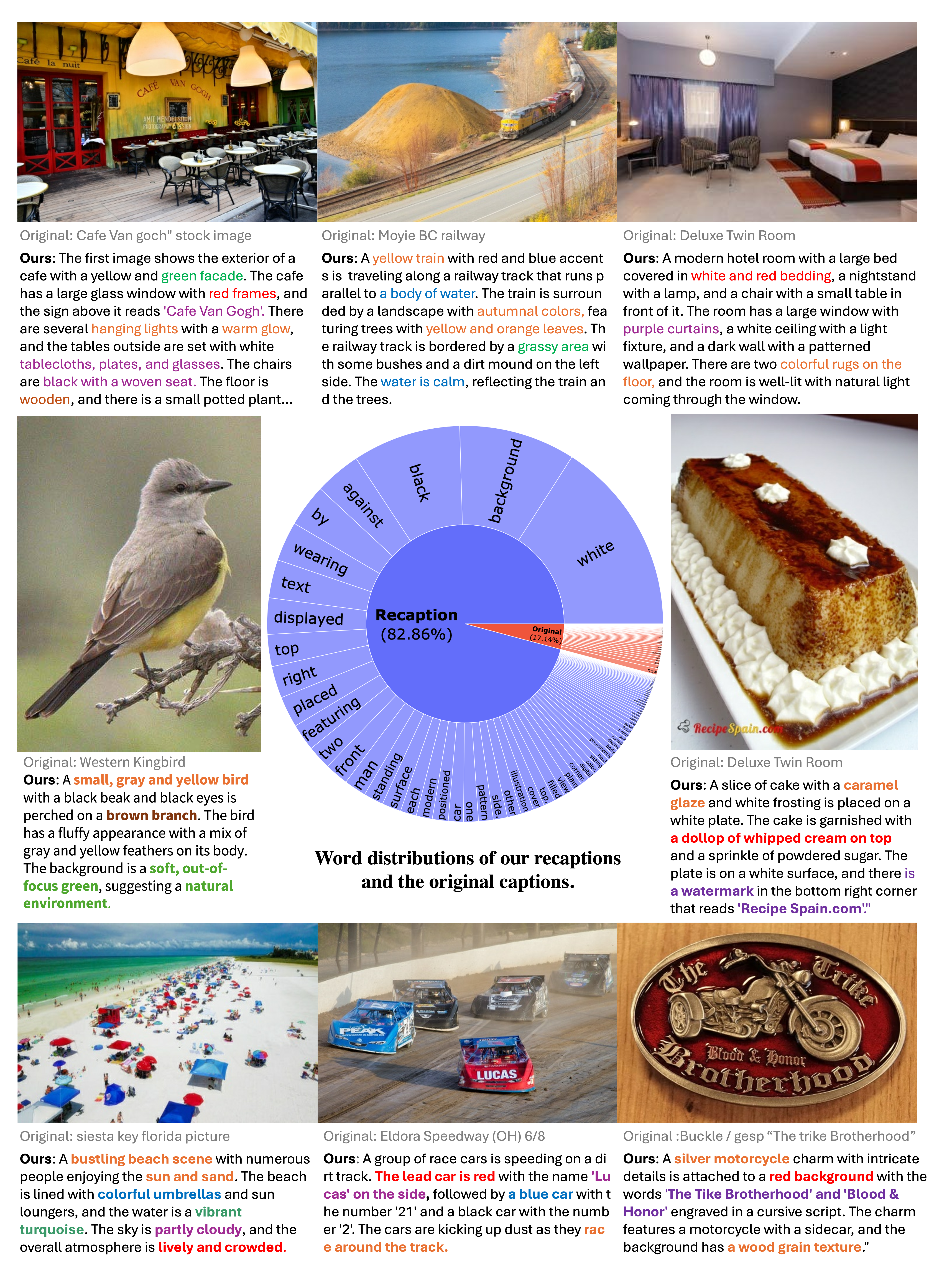

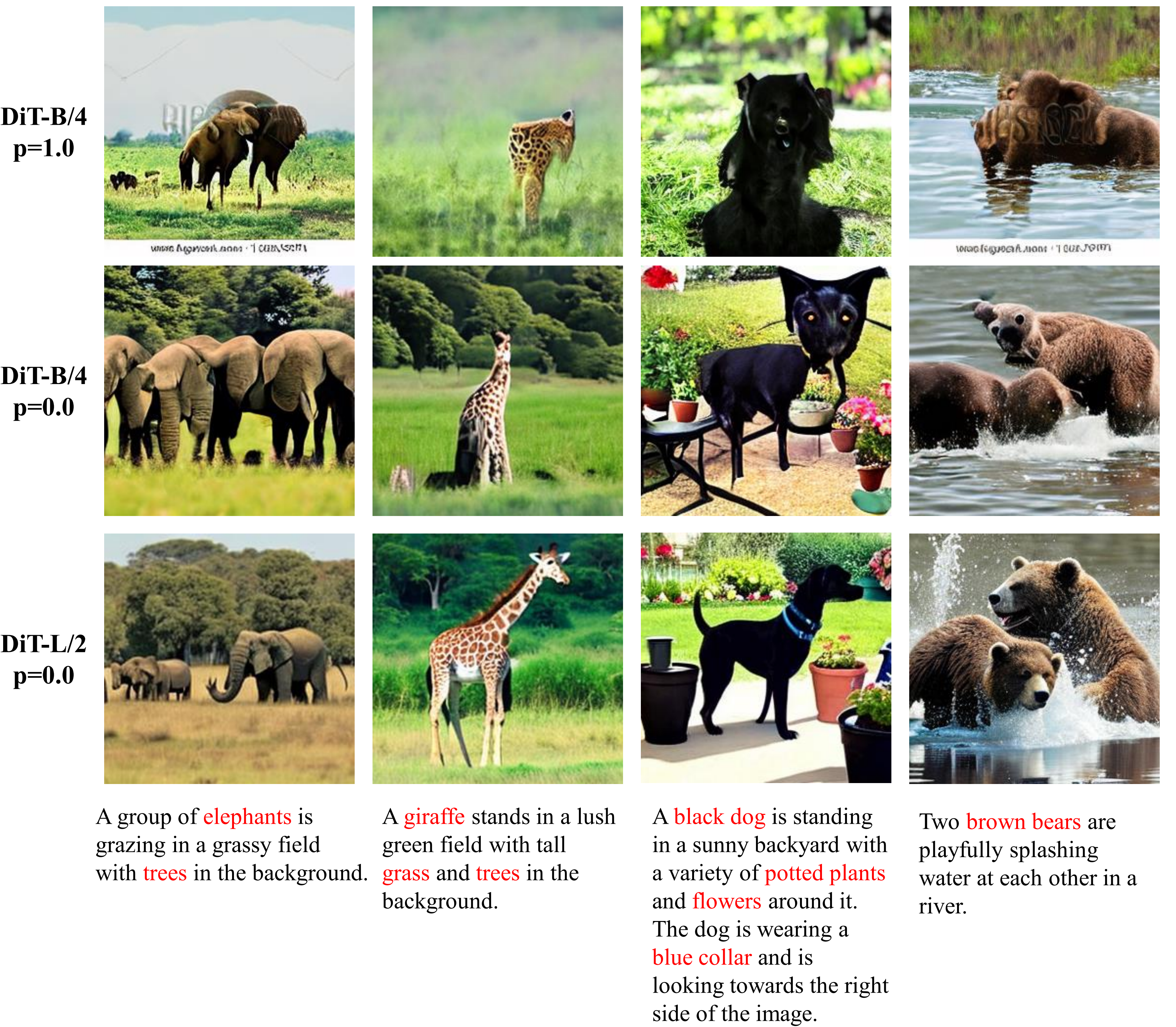

Web-crawled image-text pairs are inherently noisy. Prior studies demonstrate that semantically aligning and enriching textual descriptions of these pairs can significantly enhance model training across various vision-language tasks, particularly text-to-image generation. However, large-scale investigations in this area remain predominantly closed-source. Our paper aims to bridge this community effort, leveraging the powerful and open-sourced LLaMA-3, a GPT-4 level LLM. Our recaptioning pipeline is simple: first, we fine-tune a LLaMA-3-8B powered LLaVA- 1.5 and then employ it to recaption ∼1.3 billion images from the DataComp-1B dataset. Our empirical results confirm that this enhanced dataset, Recap-DataComp- 1B, offers substantial benefits in training advanced vision-language models. For discriminative models like CLIP, we observe enhanced zero-shot performance in cross-modal retrieval tasks. For generative models like text-to-image Diffusion Transformers, the generated images exhibit a significant improvement in alignment with users’ text instructions, especially in following complex queries.We are pleased to announce the release of our recaptioned datasets, including Recap-DataComp-1B and Recap-COCO-30K, as well as our caption model, LLaVA-1.5-LLaMA3-8B. Stay tuned for the upcoming release of our CLIP and T2I models!

| Dataset | #Samples | url |

|---|---|---|

| Recap-DataComp-1B | 1.24B | https://huggingface.co/datasets/UCSC-VLAA/Recap-DataComp-1B |

| Recap-COCO-30K | 30.5K | https://huggingface.co/datasets/UCSC-VLAA/Recap-COCO-30K |

| Model | Type | url |

|---|---|---|

| LLaVA-1.5-LLaMA3-8B | Our caption model | https://huggingface.co/tennant/llava-llama-3-8b-hqedit |

| Recap-CLIP | CLIP | https://huggingface.co/UCSC-VLAA/ViT-L-16-HTxt-Recap-CLIP |

| Recap-DiT | text2image | incoming |

- Download all the shards contains url and captions from huggingface.

- Use img2dataset tool to download the images and captions.

- Example script:

img2dataset --url_list Recap-DataComp-1B/train_data --input_format "parquet" \

--url_col "url" --caption_col "re_caption" --output_format webdataset \

--output_folder recap_datacomp_1b_data --processes_count 16 --thread_count 128 \

--save_additional_columns '["org_caption"]' --enable_wandb True- [] Model Release

@article{li2024recaption,

title={What If We Recaption Billions of Web Images with LLaMA-3?},

author={Xianhang Li and Haoqin Tu and Mude Hui and Zeyu Wang and Bingchen Zhao and Junfei Xiao and Sucheng Ren and Jieru Mei and Qing Liu and Huangjie Zheng and Yuyin Zhou and Cihang Xie},

journal={arXiv preprint arXiv:2406.08478},

year={2024}

}

This work is partially supported by a gift from Adobe, TPU Research Cloud (TRC) program, Google Cloud Research Credits program, AWS Cloud Credit for Research program, Edinburgh International Data Facility (EIDF) and the Data-Driven Innovation Programme at the University of Edinburgh.