This repository contains the official implementation of "MixCon3D" in our paper.

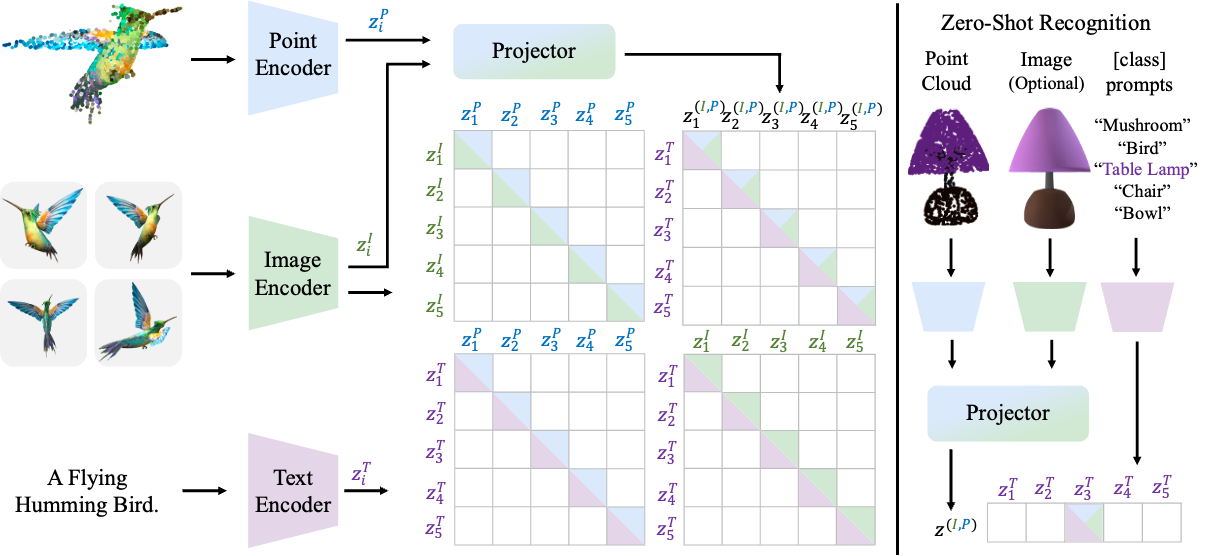

Overview of the MixCon3D. We integrate the image and point cloud modality information, formulating a holistic 3D instance-level representation for cross-modal alignment.

Overview of the MixCon3D. We integrate the image and point cloud modality information, formulating a holistic 3D instance-level representation for cross-modal alignment.

[2023.11.2] We release the training code of the MixCon3D.

Please refer to this instruction for step-by-step installation guidance. Both the necessary packages and some helpful debug experience are provided.

First, modify the path in the download_data.py. Then, execute the following command to download data from Hugging Face:

python3 download_data.pyThe datasets used for experiments are the same as OpenShape. Please refer to OpenShape for more details of the data.

To train the PointBERT model using the MixCon3D, please change the [data_path] to your specified local data path. Then, running the following command:

- On an 8 GPU server, training with batchsize 2048

torchrun --nproc_per_node=8 src/main.py --ngpu 8 dataset.folder=data_path dataset.train_batch_size=256 model.name=PointBERT model.scaling=3 model.use_dense=True --trial_name MixCon3D --config src/configs/train.yaml- If the GPU memory is not enough, you can lower the train_batch_size and run with a specific accumulated iter num as follows (The equivalent batchsize = train_batch_size * accum_freq):

torchrun --nproc_per_node=8 src/main.py --ngpu 8 dataset.folder=data_path dataset.train_batch_size=128 dataset.accum_freq=2 model.name=PointBERT model.scaling=3 model.use_dense=True --trial_name MixCon3D --config src/configs/train.yamlTo train on different datasets, use the following command:

(Ensemble_no_LVIS)

torchrun --nproc_per_node=8 src/main.py --ngpu 8 dataset.folder=data_path dataset.train_split=meta_data/split/train_no_lvis.json dataset.train_batch_size=128 dataset.accum_freq=2 model.name=PointBERT model.scaling=3 model.use_dense=True --trial_name MixCon3D --config src/configs/train.yaml(ShapeNet_only)

torchrun --nproc_per_node=8 src/main.py --ngpu 8 dataset.folder=data_path dataset.train_split=meta_data/split/ablation/train_shapenet_only.json dataset.train_batch_size=128 dataset.accum_freq=1 model.name=PointBERT model.scaling=3 model.use_dense=True --trial_name MixCon3D --config src/configs/train.yamlWe use the wandb for logging.

This codebase is based on OpenShape, timm and PointBERT. Thanks to the authors for their awesome contributions! This work is partially supported by TPU Research Cloud (TRC) program and Google Cloud Research Credits program.

@inproceedings{gao2023mixcon3d,

title = {Sculpting Holistic 3D Representation in Contrastive Language-Image-3D Pre-training},

author = {Yipeng Gao and Zeyu Wang and Wei-Shi Zheng and Cihang Xie and Yuyin Zhou},

booktitle = {CVPR},

year = {2024},

}

Questions and discussions are welcome via gaoyp23@mail2.sysu.edu.cn or open an issue here.