Table of Contents

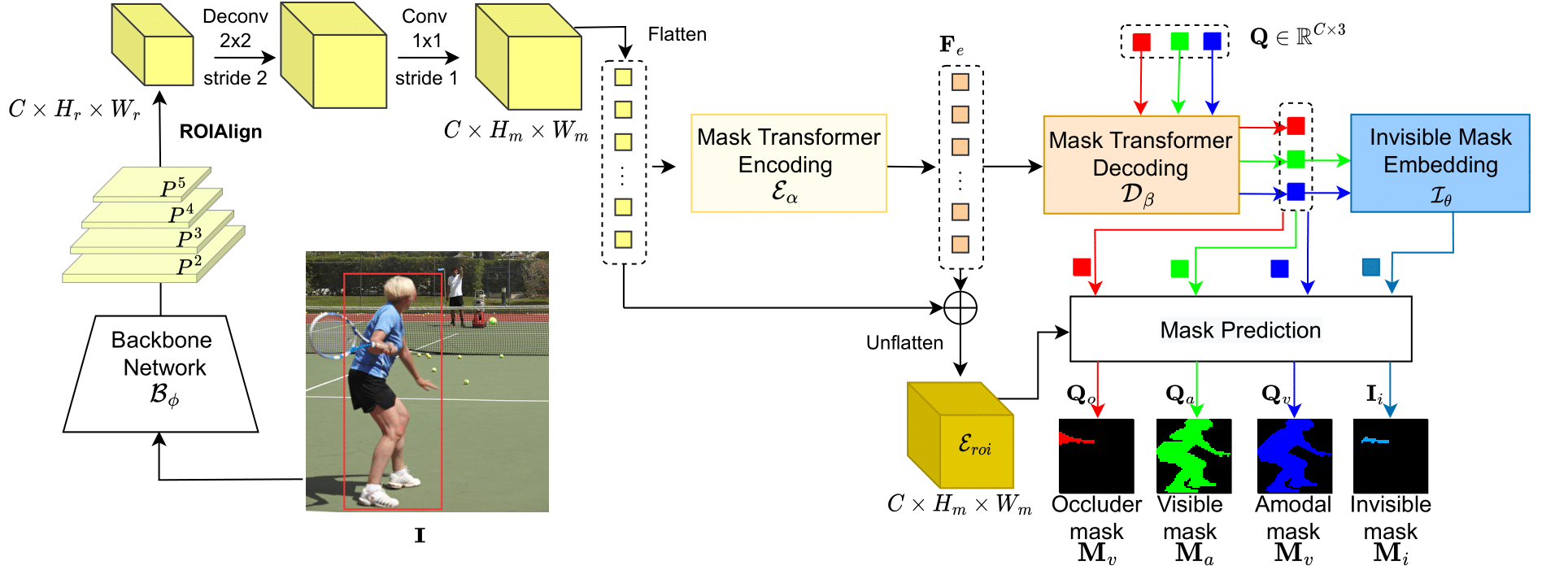

The figure above illustrates our AISFormer architecture. The main implementation of this network can be foundd here.

- Create a parent project folder

mkdir ~/AmodalSeg

export PROJECT_DIR=~/AmodalSeg

- Conda, Pytorch and other dependencies

conda create -n aisformer python=3.8 -y

source activate aisformer

conda install pytorch==1.10.0 torchvision==0.11.0 cudatoolkit=11.3 -c pytorch

pip install ninja yacs cython matplotlib tqdm

pip install opencv-python==4.4.0.40

pip install scikit-image

pip install timm==0.4.12

pip install setuptools==59.5.0

pip install torch-dct

- Install cocoapi

cd $PROJECT_DIR/

git clone https://github.com/cocodataset/cocoapi.git

cd cocoapi/PythonAPI

python setup.py build_ext install

- Install AISFormer

cd $PROJECT_DIR/

git clone https://github.com/UARK-AICV/AISFormer

cd AISFormer/

python3 setup.py build develop

- Expected Directory Structure

$PROJECT_DIR/

|-- AISFormer/

|-- cocoapi/

Download the Images from KITTI dataset.

The Amodal Annotations could be found at KINS dataset

The D2S Amodal dataset could be found at mvtec-d2sa.

The COCOA dataset annotation from here (reference from github.com/YihongSun/Bayesian-Amodal) The images of COCOA dataset is the train2014 and val2014 of COCO dataset.

AISFormer support datasets as coco format. It can be as follow (not necessarily the same as it depends on register data code)

$PROJECT_DIR/

|-- AISFormer/

|-- cocoapi/

|-- data/

|---- datasets/

|------- KINS/

|---------- train_imgs/

|---------- test_imgs/

|---------- annotations/

|------------- train.json

|------------- test.json

|------- D2SA/

|...

Then, See here for more details on data registration

After registering, run the preprocessing scripts to generate occluder mask annotation, for example:

python -m detectron2.data.datasets.process_data_amodal \

/path/to/KINS/train.json \

/path/to/KINS/train_imgs \

kins_dataset_train

the expected new annotation can be as follow:

$PROJECT_DIR/

|-- AISFormer/

|-- cocoapi/

|-- data/

|---- datasets/

|------- KINS/

|---------- train_imgs/

|---------- test_imgs/

|---------- annotations/

|------------- train.json

|------------- train_amodal.json

|------------- test.json

|------- D2SA/

|...

Configuration files for training AISFormer on each datasets are available here.

To train, test and run demo, see the example scripts at scripts/:

- AISFormer R50 on KINS

- AISFormer R50 on D2SA (TBA)

- AISFormer R50 on COCOA-cls (TBA)

This code utilize BCNet for dataset mapping with occluder, VRSP-Net for amodal evalutation, and detectron2 as entire pipeline with Faster RCNN meta arch.

@article{tran2022aisformer,

title={AISFormer: Amodal Instance Segmentation with Transformer},

author={Tran, Minh and Vo, Khoa and Yamazaki, Kashu and Fernandes, Arthur and Kidd, Michael and Le, Ngan},

journal={arXiv preprint arXiv:2210.06323},

year={2022}

}