A fast & densely stored hashmap and hashset based on robin-hood backward shift deletion for C++17 and later.

The classes ankerl::unordered_dense::map and ankerl::unordered_dense::set are (almost) drop-in replacements of std::unordered_map and std::unordered_set. While they don't have as strong iterator / reference stability guaranties, they are typically much faster.

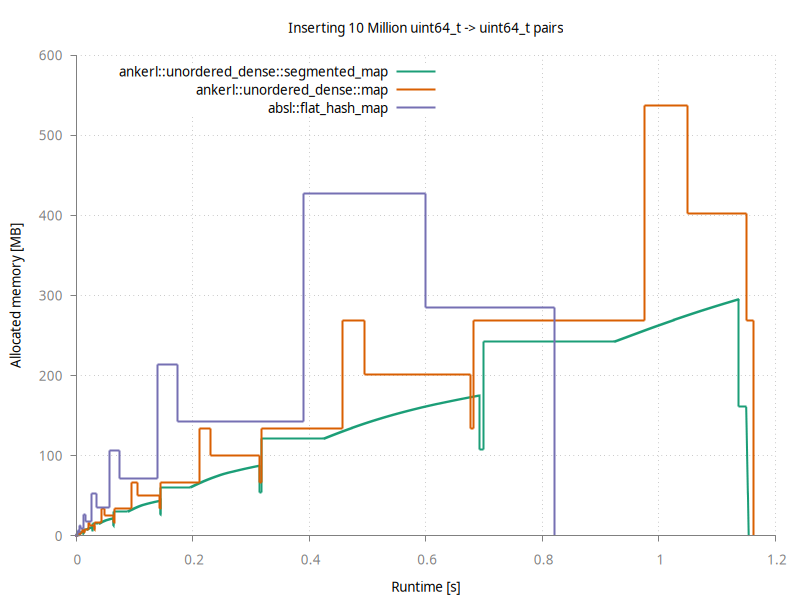

Additionally, there are ankerl::unordered_dense::segmented_map and ankerl::unordered_dense::segmented_set with lower peak memory usage.

- 1. Overview

- 2. Installation

- 3. Extensions

- 4.

segmented_mapandsegmented_set - 5. Design

The chosen design has a few advantages over std::unordered_map:

- Perfect iteration speed - Data is stored in a

std::vector, all data is contiguous! - Very fast insertion & lookup speed, in the same ballpark as

absl::flat_hash_map - Low memory usage

- Full support for

std::allocators, and polymorphic allocators. There areankerl::unordered_dense::pmrtypedefs available - Customizeable storage type: with a template parameter you can e.g. switch from

std::vectortoboost::interprocess::vectoror any other compatible random-access container. - Better debugging: the underlying data can be easily seen in any debugger that can show an

std::vector.

There's no free lunch, so there are a few disadvantages:

- Deletion speed is relatively slow. This needs two lookups: one for the element to delete, and one for the element that is moved onto the newly empty spot.

- no

const Keyinstd::pair<Key, Value> - Iterators are not stable on insert/erase

The default installation location is /usr/local.

Clone the repository and run these commands in the cloned folder:

mkdir build && cd build

cmake ..

cmake --build . --target installConsider setting an install prefix if you do not want to install unordered_dense system wide, like so:

mkdir build && cd build

cmake -DCMAKE_INSTALL_PREFIX:PATH=${HOME}/unordered_dense_install ..

cmake --build . --target installTo make use of the installed library, add this to your project:

find_package(unordered_dense CONFIG REQUIRED)

target_link_libraries(your_project_name unordered_dense::unordered_dense)ankerl::unordered_dense::hash is a fast and high quality hash, based on wyhash. The ankerl::unordered_dense map/set differentiates between hashes of high quality (good avalanching effect) and bad quality. Hashes with good quality contain a special marker:

using is_avalanching = void;This is the cases for the specializations bool, char, signed char, unsigned char, char8_t, char16_t, char32_t, wchar_t, short, unsigned short, int, unsigned int, long, long long, unsigned long, unsigned long long, T*, std::unique_ptr<T>, std::shared_ptr<T>, enum, std::basic_string<C>, and std::basic_string_view<C>.

Hashes that do not contain such a marker are assumed to be of bad quality and receive an additional mixing step inside the map/set implementation.

Consider a simple custom key type:

struct id {

uint64_t value{};

auto operator==(id const& other) const -> bool {

return value == other.value;

}

};The simplest implementation of a hash is this:

struct custom_hash_simple {

auto operator()(id const& x) const noexcept -> uint64_t {

return x.value;

}

};This can be used e.g. with

auto ids = ankerl::unordered_dense::set<id, custom_hash_simple>();Since custom_hash_simple doesn't have a using is_avalanching = void; marker it is considered to be of bad quality and additional mixing of x.value is automatically provided inside the set.

Back to the id example, we can easily implement a higher quality hash:

struct custom_hash_avalanching {

using is_avalanching = void;

auto operator()(id const& x) const noexcept -> uint64_t {

return ankerl::unordered_dense::detail::wyhash::hash(x.value);

}

};We know wyhash::hash is of high quality, so we can add using is_avalanching = void; which makes the map/set directly use the returned value.

Instead of creating a new class you can also specialize ankerl::unordered_dense::hash:

template <>

struct ankerl::unordered_dense::hash<id> {

using is_avalanching = void;

[[nodiscard]] auto operator()(id const& x) const noexcept -> uint64_t {

return detail::wyhash::hash(x.value);

}

};This map/set supports heterogeneous overloads as described in P2363 Extending associative containers with the remaining heterogeneous overloads which is targeted for C++26. This has overloads for find, count, contains, equal_range (see P0919R3), erase (see P2077R2), and try_emplace, insert_or_assign, operator[], at, and insert & emplace for sets (see P2363R3).

For heterogeneous overloads to take affect, both hasher and key_equal need to have the attribute is_transparent set.

Here is an example implementation that's usable with any string types that is convertible to std::string_view (e.g. char const* and std::string):

struct string_hash {

using is_transparent = void; // enable heterogeneous overloads

using is_avalanching = void; // mark class as high quality avalanching hash

[[nodiscard]] auto operator()(std::string_view str) const noexcept -> uint64_t {

return ankerl::unordered_dense::hash<std::string_view>{}(str);

}

};To make use of this hash you'll need to specify it as a type, and also a key_equal with is_transparent like std::equal_to<>:

auto map = ankerl::unordered_dense::map<std::string, size_t, string_hash, std::equal_to<>>();For more information see the examples in test/unit/transparent.cpp.

When an implementation for std::hash of a custom type is available, this is automatically used and assumed to be of bad quality (thus std::hash is used, but an additional mixing step is performed).

When the type has a unique object representation (no padding, trivially copyable), one can just hash the object's memory. Consider a simple class

struct point {

int x{};

int y{};

auto operator==(point const& other) const -> bool {

return x == other.x && y == other.y;

}

};A fast and high quality hash can be easily provided like so:

struct custom_hash_unique_object_representation {

using is_avalanching = void;

[[nodiscard]] auto operator()(point const& f) const noexcept -> uint64_t {

static_assert(std::has_unique_object_representations_v<point>);

return ankerl::unordered_dense::detail::wyhash::hash(&f, sizeof(f));

}

};In addition to the standard std::unordered_map API (see https://en.cppreference.com/w/cpp/container/unordered_map) we have additional API leveraging the fact that we're using a random access container internally:

Extracts the internally used container. *this is emptied.

Exposes the underlying values container.

Discards the internally held container and replaces it with the one passed. Non-unique elements are removed, and the container will be partly reordered when non-unique elements are found.

unordered_dense accepts a custom allocator, but you can also specify a custom container for that template argument. That way it is possible to replace the internally used std::vector with e.g. std::deque or any other container like boost::interprocess::vector. This supports fancy pointers (e.g. offset_ptr), so the container can be used with e.g. shared memory provided by boost::interprocess.

The map/set supports two different bucket types. The default should be good for pretty much everyone.

- Up to 2^32 = 4.29 billion elements.

- 8 bytes overhead per bucket.

- up to 2^63 = 9223372036854775808 elements.

- 12 bytes overhead per bucket.

ankerl::unordered_dense provides a custom container implementation that has lower memory requirements than the default std::vector. Memory is not contiguous, but it can allocate segments without having to reallocate and move all the elements. In summary, this leads to

- Much smoother memory usage, memory usage increases continuously.

- No high peak memory usage.

- Faster insertion because elements never need to be moved to new allocated blocks

- Slightly slower indexing compared to

std::vectorbecause an additional indirection is needed.

Here is a comparison against absl::flat_hash_map and the ankerl::unordered_dense::map when inserting 10 million entries

Abseil is fastest for this simple inserting test, taking a bit over 0.8 seconds. It's peak memory usage is about 430 MB. Note how the memory usage goes down after the last peak; when it goes down to ~290MB it has finished rehashing and could free the previously used memory block.

ankerl::unordered_dense::segmented_map doesn't have these peaks, and instead has a smooth increase of memory usage. Note there are still sudden drops & increases in memory because the indexing data structure needs still needs to increase by a fixed factor. But due to holding the data in a separate container we are able to first free the old data structure, and then allocate a new, bigger indexing structure; thus we do not have peaks.

The map/set has two data structures:

std::vector<value_type>which holds all data. map/set iterators are juststd::vector<value_type>::iterator!- An indexing structure (bucket array), which is a flat array with 8-byte buckets.

Whenever an element is added it is emplace_back to the vector. The key is hashed, and an entry (bucket) is added at the

corresponding location in the bucket array. The bucket has this structure:

struct Bucket {

uint32_t dist_and_fingerprint;

uint32_t value_idx;

};Each bucket stores 3 things:

- The distance of that value from the original hashed location (3 most significant bytes in

dist_and_fingerprint) - A fingerprint; 1 byte of the hash (lowest significant byte in

dist_and_fingerprint) - An index where in the vector the actual data is stored.

This structure is especially designed for the collision resolution strategy robin-hood hashing with backward shift deletion.

The key is hashed and the bucket array is searched if it has an entry at that location with that fingerprint. When found, the key in the data vector is compared, and when equal the value is returned.

Since all data is stored in a vector, removals are a bit more complicated:

- First, lookup the element to delete in the index array.

- When found, replace that element in the vector with the last element in the vector.

- Update two locations in the bucket array: First remove the bucket for the removed element

- Then, update the

value_idxof the moved element. This requires another lookup.