- 1. Table of Contents

- 2. Objective

- 3. Model

- 4. Dataset

- 5. Training and Validation

- 6. Evaluation

- 7. Inferece

- 8. Conclusion

The objective of this project is to build a model that can generate captions for images.

The directory structure of this project is shown below:

root/

├── coco/

│ ├── annotations/

│ │ ├── captions_train2014.json

│ │ └── captions_train2014.json

│ ├── karpathy/

│ │ └── dataset_coco.json

│ ├── train2014/

│ └── val2014/

│

└── image_captioning/ # this repository

├── images/

├── pretrained/

├── results/

├── caption.py

├── datasets.py

├── evaluation.py

├── models.py

├── README.md

├── train.py

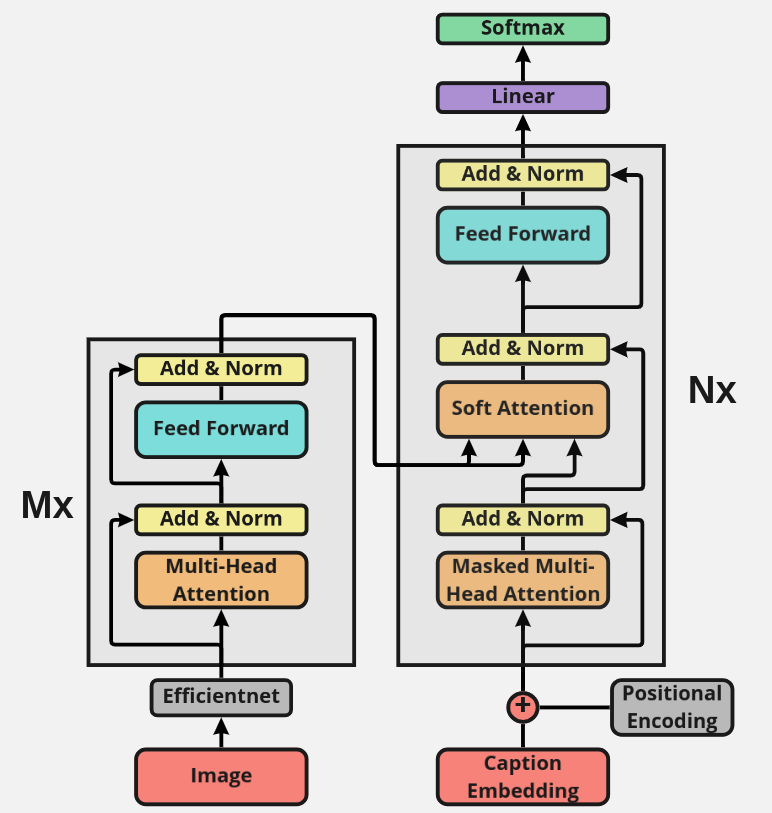

└── utils.pyI use Encoder as Efficientnet to extract features from image and Decoder as Transformer to generate caption. But I also change the attention mechanism at step attention encoder output. Instead of using the Multi-Head Attention mechanism, I use the Attention mechanism each step to attend image features.

Model architecture: The architecture of the model Image Captioning with Encoder as Efficientnet and Decoder as TransformerI'm using the MSCOCO '14 Dataset. You'd need to download the Training (13GB), Validation (6GB) and Test (6GB) splits from MSCOCO and place them in the ../coco directory.

I'm also using Andrej Karpathy's split of the MSCOCO '14 dataset. It contains caption annotations for the MSCOCO, Flickr30k, and Flickr8k datasets. You can download it from here. You'd need to unzip it and place it in the ../coco/karpathy directory.

In Andrej's split, the images are divided into train, val and test sets with the number of images in each set as shown in the table below:

| Image/Caption | train | val | test |

|---|---|---|---|

| Image | 113287 | 5000 | 5000 |

| Caption | 566747 | 25010 | 25010 |

I preprocessed the images with the following steps:

- Resize the images to 256x256 pixels.

- Convert the images to RGB.

- Normalize the images with mean and standard deviation. I normalized the image by the mean and standard deviation of the ImageNet images' RGB channels.

import torch

import torchvision.transforms as transforms

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])Captions are both the target and the inputs of the Decoder as each word is used to generate the next word.

I use BERTTokenizer to tokenize the captions.

from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

token = tokenizer(caption, max_length=max_seq_len, padding="max_length", truncation=True, return_tensors="pt")["input_ids"][0]For more details, see datasets.py file.

- embedding_dim: 512

- vocab_size: 30522

- max_seq_len: 128

- encoder_layers: 6

- decoder_layers: 12

- num_heads: 8

- dropout: 0.1

- n_epochs: 25

- batch_size: 24

- learning_rate: 1e-4

- optimizer: Adam

- adam parameters: betas=(0.9, 0.999), eps=1e-9

- loss: CrossEntropyLoss

- metric: bleu-4

- early_stopping: 5

I evaluate the model on the validation set after each epoch. For each image, I generate a caption and evaluate the BLEU-4 score with list of reference captions by sentence_bleu. And for all the images, I calculate the BLEU-4 score with the corpus_bleu function from NLTK.

You can see the detaile in the train.py file. Run train.py to train the model.

python train.py \

--embedding_dim 512 \

--tokenizer bert-base-uncased \

--max_seq_len 128 \

--encoder_layers 6 \

--decoder_layers 12 \

--num_heads 8 \

--dropout 0.1 \

--model_path ./pretrained/model_image_captioning_eff_transfomer.pt \

--device cuda:0 \

--batch_size 24 \

--n_epochs 25 \

--learning_rate 1e-4 \

--early_stopping 5 \

--image_dir ../coco/ \

--karpathy_json_path ../coco/karpathy/dataset_coco.json \

--val_annotation_path ../coco/annotations/captions_val2014.json \

--log_path ./images/log_training.json \

--log_visualize_dir ./images/See the evaluation.py file. Run evaluation.py to evaluate the model.

python evaluation.py \

--embedding_dim 512 \

--tokenizer bert-base-uncased \

--max_seq_len 128 \

--encoder_layers 6 \

--decoder_layers 12 \

--num_heads 8 \

--dropout 0.1 \

--model_path ./pretrained/model_image_captioning_eff_transfomer.pt \

--device cuda:0 \

--image_dir ../coco/ \

--karpathy_json_path ../coco/karpathy/dataset_coco.json \

--val_annotation_path ../coco/annotations/captions_val2014.json \

--output_dir ./results/To evaluate the model, I used the pycocoevalcap package. Install it by pip install pycocoevalcap. And this package need to be Java 1.8.0 installed.

sudo apt-get update

sudo apt-get install openjdk-8-jdk

java -version

pip install pycocoevalcapI use beam search to generate captions with beam size of 3. I use the BLEU-1, BLEU-2, BLEU-3, BLEU-4, METEOR, ROUGE-L, CIDEr, and SPICE score to evaluate the model. The results on the test set (5000 images) are shown below.

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | ROUGE-L | CIDEr | SPICE |

|---|---|---|---|---|---|---|---|

| 0.675 | 0.504 | 0.372 | 0.273 | 0.259 | 0.521 | 0.933 | 0.190 |

See the file caption.py. Run caption.py to generate captions for the test images. If you don't have resouces for training, you can download the pretrained model from here.

python caption.py \

--embedding_dim 512 \

--tokenizer bert-base-uncased \

--max_seq_len 128 \

--encoder_layers 6 \

--decoder_layers 12 \

--num_heads 8 \

--dropout 0.1 \

--model_path ./pretrained/model_image_captioning_eff_transfomer.pt \

--device cuda:0 \

--beam_size 3 from evaluation import generate_caption

cap = generate_caption(

model=model,

image_path=image_path,

transform=transform,

tokenizer=tokenizer,

max_seq_len=args.max_seq_len,

beam_size=args.beam_size,

device=device

)

print("--- Caption: {}".format(cap))Some examples of captions generated from COCO images are shown below.

|

|

|

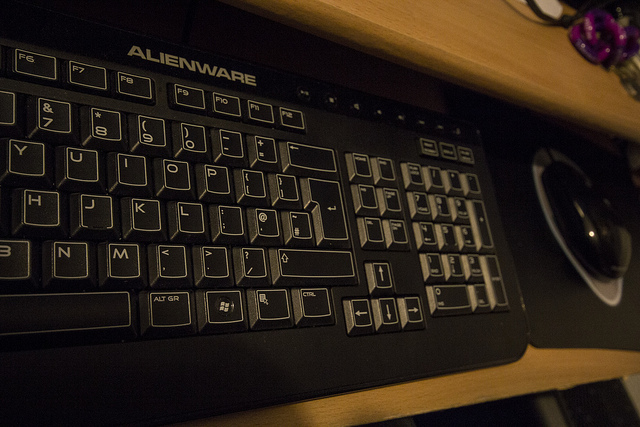

| A bride and groom cutting a wedding cake. | A man riding a wave on top of a surfboard. | A close up view of a keyboard and a mouse. |

|

|

|

| A red fire hydrant sitting on the side of a street. | A woman holding a hot dog in front of her. | A red stop sign sitting on top of a metal pole. |

|

|

|

| A little girl sitting in front of a laptop computer on a desk. | A group of people playing a game of frisbee in a park. | A group of people standing on top of a beach with surfboards. |

Some examples of captions generated from other images that are not in the COCO dataset are shown below.

|

|

|

| A soccer player kicking a soccer ball on a green field. | A baby sleeping in a bed with a blanket on its head. | A brown and white dog standing on top of a grass-covered field. |