Rocky Raccoon is meant as a framework that makes it easy to develop variational quantum circuits that make use of the quantum log-likelihood. The current name is a working title.

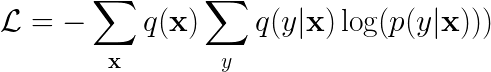

The core idea of this project can be summarized as follows: As opposed to learning a classical model probability density,

we learn a parametrized density matrix instead, given by

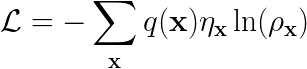

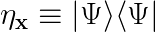

Here,  is the data density matrix, given by

is the data density matrix, given by

with

where  is the conditional empirical probability of observing a certain

label for some sample from the data.

is the conditional empirical probability of observing a certain

label for some sample from the data.

On the other hand,  is our model density matrix, represented by a variational quantum circuit.

To construct this variational quantum circuit, we use PennyLane. In order to estimate te density matrix

of this circuit, we employ the technique of quantum state tomography.

is our model density matrix, represented by a variational quantum circuit.

To construct this variational quantum circuit, we use PennyLane. In order to estimate te density matrix

of this circuit, we employ the technique of quantum state tomography.

The research behind this project can be found in the whitepaper (work in progress). The article about the quantum log-likelihood can be found on arXiv and is published in Physical Review A.

This project is far from finished, but the most important code is there: The RockyModel and RaccoonWrapper classes

are the core of this project and seem to work fine for now.

The code documentation can be found here. The Docs are generated automatically with pdoc3. Code is formatted according to PEP8 standards using Black.

The following works with Conda 4.6.12. Please use the provided environment.yml.

If you have a CUDA enabled GPU, consider installing tensorflow-gpu==1.14.0 instead by

changing the environment.yml file accordingly.

-

Install Conda: Quickstart

-

Clone the git:

git clone https://gitlab.com/rooler/pennylane-qllh.git -

Create a Virtual Environment:

conda env create -f environment.ymlIt is good to know that you can use this to update the env:

conda env update -f environment.ymlAnd removing it can be done with:

conda remove --name pennylane-qllh --all -

Activate the environment and install the

rockyraccoonpackage:conda activate pennylane-qllhpython setup.py install clean

Rocky Raccoon consists of two parts. A RockyModel class that serves as a template for the hybrid-quantum model

we wish to train, and a RaccoonWrapper class that minimizes the quantum log-likelihood. Both these classes

can be found in the model.core module.

Writing your own RockyModel means defining a class that inherits from RockyModel and overloading the

methods defined there. If this is done correctly, RaccoonWrapper will do the heavy lifting for us.

Below we will discuss the requirements of a RockyModel by looking at the individual methods. In the section after that

we will look at some example models that make use of this template. The

whitepaper is a good reference to

help understand the design choices made here.

class RockyModel(tf.keras.Model):

"""

QML model template.

"""1.) RockyModel inherits from keras Models, so that we have a clear template already for what is required

for tensorflow to work.

def __init__(self, nclasses: int, device="default.qubit"):

"""

Initialize the keras model interface.

Args:

nclasses: The number of classes in the data, used the determine the required output qubits.

device: name of Pennylane Device backend.

"""

super(RockyModel, self).__init__()

self.req_qub_out = int(np.ceil(np.log2(nclasses)))

self.req_qub_in = None

self.device = device

self.data_dev = None

self.model_dev = None

self.nclasses = nclasses

self.init = False

self.circuit = None

self.trainable_vars = []2.) In order to determine the subsystem that we will measure to construct the density matrix, we need

to determine beforehand how many classes we want to learn, nclasses. With regards to the device parameter,

at the moment Rocky Raccoon only supports the default.qubit device. In principle we rely only on the

TFEQnode PennyLane interface, but some preliminary tests with the Qiskit backend led to buggy behaviour.

The variable self.req_qub_out is determined by the number of classes in your implementation, since we need to

construct an appropriately sized density matrix from the circuit. On the other hand, self.req_qub_in can be whatever

we want, as long as it is equal to or greater as self.req_qub_out. The variables self.model_dev and self.data_dev

contain the PennyLane device objects used for executing the quantum and data circuits, which as mentioned before only

supports default.qubit for now. self.circuit has to be assigned a TFEQnode PennyLane quantum circuit. In order

for RaccoonWrapper to properly update the gradients, we initalize a list of trainable variables in self.trainable_vars.

def __str__(self):

return 'Gradient Ob-la-descent'3.) Give your model a name.

def initialize(self, nfeatures: int):

"""

Model initialization.

Args:

nfeatures: The number of features in X

"""

self.init = True

raise NotImplementedError4.) initialize is called in RaccoonWrapper before training begins. In this method we need to make sure that our

tensorflow variables are initialized and appended to self.trainable_vars, that self.circuit is assigned a proper TFEQnode

and that self.init is set to True so that training the model can begin. Since some quantum circuits might want to

amplitude encode features from the data into the wavefunction, we need to be aware of nfeatures.

def call(self, inputs: tf.Tensor, observable: tf.Tensor):

"""

Given some obsersable, we calculate the output of the model.

Args:

inputs: N x d tf.Tensor of N samples and d features.

observable: tf.Tensor with Hermitian matrix containing an observable

Returns: N expectation values of the observable

"""

raise NotImplementedError5.) Finally, we reach the most important method: call. This method is called in the

RaccoonWrapper loss function to perform tomography of the model density matrix. Given

a tensor of inputs and an observable, it should return the expectation value of the

given observable. To return this value for each sample, it is recommended to use

tf.map_fn, which performs a parallel map over the batch dimension.

Note: Unfotunately, tf.map_fn cannot be run in parallel since the PennyLane

TFQENode casts the tensors to arrays in order work with all device backends. This

means that tf.map_fn is simply a fancy wrapper of a for-loop.

One example discussed in the whitepaper is a quantum circuit, whose parameters are controlled by a dense neural network. Schematically this looks as follows:

Data is encoded in the parameters of the circuit (theta) by a dense neural network. Using a

PennyLane StronglyEntanglingLayer we entangle the zero state based on these parameters. By performing

quantum state tomography, we obtain the data and model density matrix. Then, we calculate the

quantum log-likelihood and the corresponding gradients.

Improve concurrency.