- 👋 Hi, I’m @TnSor06

- 👀 I’m interested in AI🧠 and Robotics🤖

- 🌱 I’m currently learning Deep Reinforcement Learning

- 💞️ I’m looking to collaborate on more Reinforcement Learning Projects

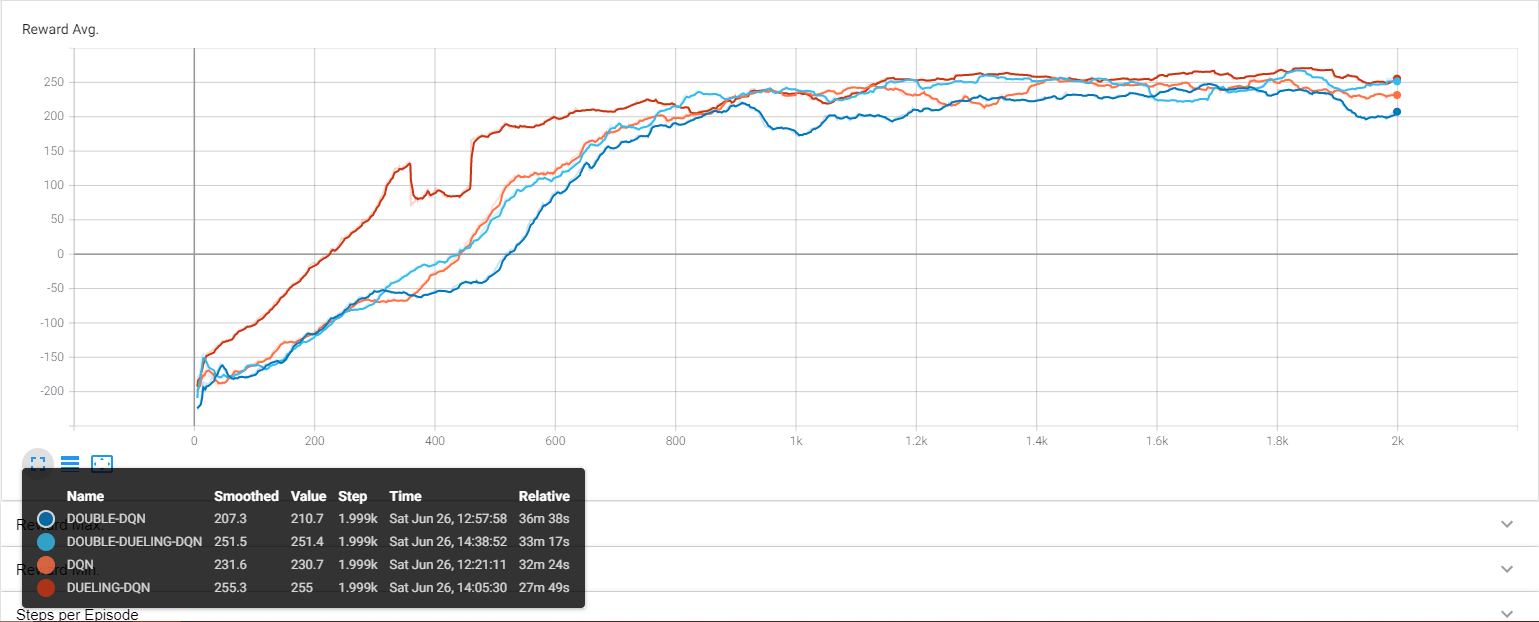

- ✔ DQN + Double DQN + Dueling DQN + Double Dueling DQN

- 🚧 More DQN Projects on the way

This project uses Deep Reinforcement Learning to solve the Lunar Lander environment of the OpenAI-Gym Any of the four methods can be used in this project:

- Deep Q Learning

- Double Deep Q Learning

- Dueling Deep Q Learning

- Double Dueling Deep Q Learning

| Before training |

|---|

|

| DQN | Double DQN |

|---|---|

|

|

| Dueling DQN | Double Dueling DQN |

|---|---|

|

|

The Agent's interaction with the Environment is based on the following four discrete actions:

0: Do Nothing1: Fire Left Orientation Engine2: Fire Main Engine3: Fire Right Orientation Engine.

The Environment returns the state vector of length 8 with continuous variable ranging from [-1,+1]:

0: X co-ordinate of Lander1: X co-ordinate of Lander2: Vertical Velocity of Lander3: Horizontal Velocity of Lander4: Angle of Lander5: Angular Velocity of Lander6: Left Lander Leg contact with Ground7: Right Lander Leg contact with Ground

More information is available on the OpenAI LunarLander-v2, or in the Github.

The episode finishes if the lander crashes or comes to rest. Normally, LunarLander-v2 defines "solving" as getting an average reward of 200 over an average of 100 consecutive episodes. But to improve the efficiency of the agent certain punishments were added in the reward section.

# main.py at line 36

# Reward Punishing for Moving Vertically away from zero

# Range is [-1.00 to +1.00] with center at zero to maximize efficiency

reward -= abs(obs[0])*0.05obs is the state received from the environment. Absolute is taken to penalize getting away from the center with a small scalar magnitude.

NOTE: Replay Buffer Code in utils.py is taken from Udacity's Course Code.

The Project uses the following libraries:

- Pytorch

- Tensorboard

- OpenAI-Gym

- OpenCV-Python

- Numpy

- Box2d-Py(For Google Colab)

Use the requirements.txt file to install the required libraries for this project.

pip install -r requirements.txtpython main.py

usage: main.py [-h] [-a ] [-n ] [-t ] [--seed ] [--scores_window ] [--n_games ] [--limit_steps ] [--gamma ]

[--epsilon ] [--eps_end ] [--eps_dec ] [--batch_size ] [--n_epochs ] [--update_every ]

[--max_mem_size ] [--tau ] [--lr ] [--fc1_dims ] [--fc2_dims ]

Solving Lunar Lander using Deep Q Network

optional arguments:

-h, --help show this help message and exit

-a [], --agent_mode []

Enter Agent Type: SIMPLE or DOUBLE (default: SIMPLE)

-n [], --network_mode []

Enter Network Type: SIMPLE or DUELING (default: SIMPLE)

-t [], --test_mode []

Enter Mode Type: TEST or TRAIN (default: TEST)

--seed [] Initialize the Random Number Generator (default: 0)

--scores_window [] Lenght of scores window for evaluation (default: 100)

--n_games [] Number of games to run on (default: 2000)

--limit_steps [] Number of steps per run (default: 1000)

--gamma [] Discount Factor for Training (default: 0.99)

--epsilon [] Exploration Parameter Initial Value (default: 0.9)

--eps_end [] Exploration Parameter Minimum Value (default: 0.01)

--eps_dec [] Exploration Parameter Decay Value (default: 0.995)

--batch_size [] Number of samples per learning step (default: 64)

--n_epochs [] Number of epochs per learning step (default: 5)

--update_every [] Number of steps to Update the Target Network (default: 5)

--max_mem_size [] Memory size of Replay Buffer (default: 100000)

--tau [] Parameter for Soft Update of Target Network (default: 0.001)

--lr [] Learning Rate for Training (default: 0.0005)

--fc1_dims [] Number of Nodes in First Hidden Layer (default: 64)

--fc2_dims [] Number of Nodes in Second Hidden Layer (default: 64)

NOTE: Epsilon and N_games are by default set to 1.0 and 2000 for training mode, 0.0 and 5 for testing mode respectively unless changed by passing as arguments.

# For Basic DQN

python3 main.py --test_mode=TRAIN --agent_mode=SIMPLE --network_mode=SIMPLE

# For Double DQN

python3 main.py --test_mode=TRAIN --agent_mode=DOUBLE --network_mode=SIMPLE

# For Dueling DQN

python3 main.py --test_mode=TRAIN --agent_mode=SIMPLE --network_mode=DUELING

# For Double Dueling DQN

python3 main.py --test_mode=TRAIN --agent_mode=DOUBLE --network_mode=DUELING# For Basic DQN

python3 main.py --test_mode=TEST --agent_mode=SIMPLE --network_mode=SIMPLE

# For Double DQN

python3 main.py --test_mode=TEST --agent_mode=DOUBLE --network_mode=SIMPLE

# For Dueling DQN

python3 main.py --test_mode=TEST --agent_mode=SIMPLE --network_mode=DUELING

# For Double Dueling DQN

python3 main.py --test_mode=TEST --agent_mode=DOUBLE --network_mode=DUELING| Average Reward |

|---|

|

tensorboard --logdir=logsPull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.