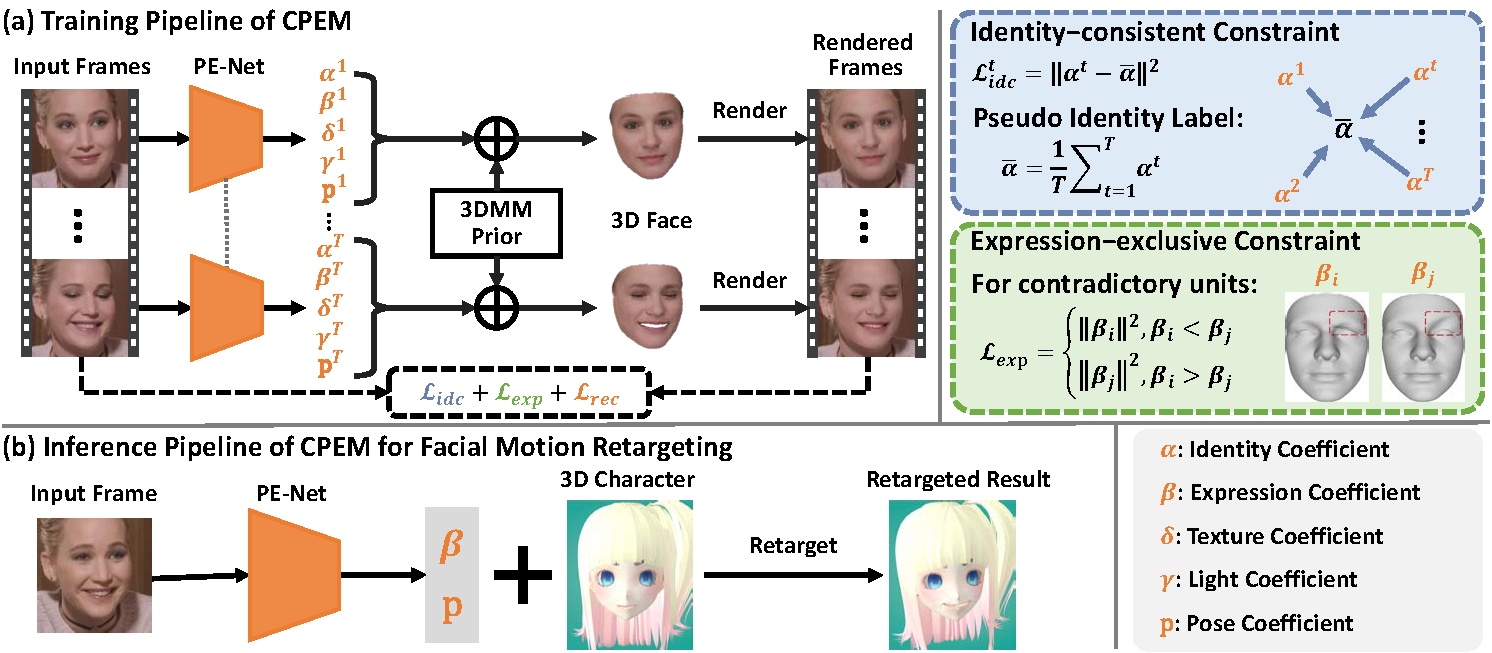

This repository contains the code and supplementary material for our AAAI paper: "Towards Accurate Facial Motion Retargeting with Identity-Consistent and Expression-Exclusive Constraints".

|

|---|

| A demo showing facial motion retargeting results of our CPEM |

Towards Accurate Facial Motion Retargeting with Identity-Consistent and Expression-Exclusive Constraints

[Supplementary material]

Langyuan Mo, Haokun Li, Chaoyang Zou, Yubing Zhang, Ming Yang, Yihong Yang, Mingkui Tan

Proceedings of the AAAI Conference on Artificial Intelligence(AAAI), 2022

- pytorch>=1.7.0

- pytorch3d

- face_alignment

- facenet_pytorch

- opencv-python

- numpy

- pyyaml

- scipy

-

Clone this repo:

git clone https://github.com/deepmo24/CPEM.git cd CPEM/ -

install the dependencies above.

-

install face detector FaceBoxes:

cd FaceBoxes/ sh ./build_cpu_nms.sh pip install onnxruntime # if use onnxruntime to speed up inference

-

3DMM model + pretrained model

-

We use the BFM09 model processed by Deep3DFaceReconstruction for face identity and texture model, while the expression model is obtained by using deformation transfor technology to transfer the expression blendshapes from FaceWarehouse to BFM09.

-

Download link: Dropbox or BaiduNetdisk(extraction code: 7i7u)

-

Put the 3DMM model in

./data/BFMdirectory -

Put the pretrained model in

./data/pretrained_modeldirectory

-

-

Run general demo:

python test.py --mode demo \ --checkpoint_path ./data/pretrained_model/resnet50-id-exp-300000.ckpt \ --image_path <path-to-image> --save_path <path-to-save> -

Run facial motion retargeting:

CUDA_VISIBLE_DEVICES=1 python test.py --mode retarget \ --checkpoint_path ./data/pretrained_model/resnet50-id-exp-300000.ckpt \ --source_coeff_path <path-to-coefficient> --target_image_path <path-to-image> \ --save_path <path-to-save> -

Render 3D face shape on the input image:

-

install rendering library Sim3DR:

cd Sim3DR/ sh ./build_sim3dr.sh -

Rendering shape on the image plane:

python test.py --mode render_shape \ --checkpoint_path ./data/pretrained_model/resnet50-id-exp-300000.ckpt \ --image_path <path-to-image> --save_path <path-to-save>

-

We train our model with three datasets VoxCeleb2, Feafa(need to apply) and 300W-LP.

-

Download the above datasets.

-

Construct the dataset like below:

<train dataset> ├── data │ └── <person_id>/<video_clips>/<images> ├── face_mask │ └── <person_id>/<video_clips>/<images> ├── landmarks │ └── <person_id>/<video_clips>/<landmarks> ├── landmarks2d │ └── <person_id>/<video_clips>/<landmarks> └── front_face_flag.csvdatafolder contains the raw images.face_maskfolder contains the face skin masks w.r.t. images, which can be genereted using face-parsing.PyTorch.landmarksfolder contains the 3D facical landmarks w.r.t. images, which can be generated using face_alignment.landmarks2dfolder contains the 2D facical landmarks w.r.t. images, which can be generated using dlib.front_face_flag.csvfile saves the front face flag, which can be generated usingpreprocess/cal_yaw_angle.py.

-

We supply a demo dataset in

./data/demo_datasetto help preprare your own datasets.

-

(Try) training the model using demo dataset:

python train.py --result_root results/cpem_demo \ --voxceleb2_root data/demo_dataset/voxceleb2 \ --lp_300w_root data/demo_dataset/300w_lp -

training the model using full dataset:

python train.py --result_root results/<experiment-name> \ --voxceleb2_root <path-to-voxceleb2> \ --feafa_root <path-to-feafa> \ --lp_300w_root <path-to-300w_lp>

- We supply the FACS names of the the expression blendshapes here.

If this work is useful for your research, please star our repo and cite our paper.

@inproceedings{mo2022cpem,

title={Towards Accurate Facial Motion Retargeting with Identity-Consistent and Expression-Exclusive Constraints},

author={Mo, Langyuan and Li, Haokun and Zou, Chaoyang and Zhang, Yubing and Yang, Ming and Yang, Yihong and Tan, Mingkui}

booktitle = {Proceedings of the AAAI Conference on Artificial Intelligence (AAAI)},

year={2022}

}